Oculus’ latest feature prototype VR Headset, code-named Crescent Bay, was out in force at this year’s CES, blowing minds left, right and centre. But whilst debate rages over precisely what optics and display it contains, Crescent Bay’s audio has been somewhat sidelined. Here’s why Oculus’ work on building a dedicated audio pipeline with high-end hardware matters.

Oculus’ latest feature prototype VR Headset, code-named Crescent Bay, was out in force at this year’s CES, blowing minds left, right and centre. But whilst debate rages over precisely what optics and display it contains, Crescent Bay’s audio has been somewhat sidelined. Here’s why Oculus’ work on building a dedicated audio pipeline with high-end hardware matters.

‘VR Audio’ – Oculus’ Latest Inititive to Improve Immersion

Oculus’ objectives at CES 2015 was fairly clear; 1) Get the new Oculus Rift ‘Crescent Bay’ onto as many people’s heads as possible and 2) Talk up VR Audio, the company’s term for their 3D positional audio pipeline. It seems to have been ‘mission accomplished’ on both counts judging by the sheer amount of mainstream press the company has received this year – all talking about 3D positional audio and their incredible experiences with Oculus’ latest hardware.

But whilst CB’s newly integrated headphones have drawn some superficial attention by the media and community at large, it may not be immediately obvious just how seriously Oculus is taking what you, the player, hear whilst in virtual reality. Also, if you’ve yet to experience truly effective positional audio whilst in VR (or any gaming experience for that matter), you may not realise that attaining ‘presence’, the industry term for psychological immersion, may rely so heavily upon it.

The Hardware

Oculus’ public mission for providing top-notch VR Audio began with their announcement at Oculus Connect that the company was to license Maryland University startup Visisonics‘ Realspace 3D Audio engine for inclusion in their SDK. This means that every developer creating experiences for the Oculus Rift will have immediate access to a set of APIs that allow them to take advantage of 3D positional audio without the need to seek out proprietary solutions. In theory, lowering the barrier of entry for great 3D sound in games.

The Crescent Bay demos given at CES this year included updated demo’s featuring this new positional audio. VR Audio itself will probably make an appearance in a beta SDK release within the next few months – with a full release appearing before the consumer Rift ships. VR demo’s including RealSpace3D audio are however out there to try right now (see link below).

See Also: A Preview of Oculus’ Newly Licensed Audio Tech Reveals Stunning 3D Sound

Oculus’ newest publicly announced feature prototype Crescent Bay, also announced at Oculus Connect in September, embodied their VR Audio mission by including a pair of integrated headphones – a design that looked initially as a slightly incongruous afterthought. As it turns out however, the headphones are the end of a chain of steps Oculus have taken to provide the purest and most accurate audio possible.

Feeding those custom designed, integrated headphone drivers is a series of stages designed to cut electrical noise and keep the audio as clean as possible. Soundcards are bypassed with an external, inline DAC (Digital to Analogue Converter) taking pure digital audio information from the PC and converting it into analogue signals ready for amplification. Analogue audio is then sent through a dedicated amplifier stage, producing signals eventually interpreted by the HMD’s integrated, custom tailored headphones.

The above methods are well known to those seeking a cut above the standard in sound presentation from electronic devices. Far from representing high-end audiophile snake oil, bypassing on-board DACs and amplifiers found in every day consumer electronics, which generally designed and built purely with cost and not fidelity in mind, can yield some really impressive improvements in quality.

The aim here, Oculus tells us, is to provide the cleanest, flattest response from the audio hardware pipeline as possible. The headset’s audio hardware becoming a blank canvas on which to convey a developer’s chosen audio design. At the same time, the audio path is predictable, so algorithms used to calculate and position audio cues in virtual space should also behave as expected. Oculus have essentially produced a reference design, a standard target against which developers can create.

The pipeline also allows Oculus to bypass any headaches caused by individual soundcard drivers, many of which include troublesome default equalisation (‘enhanced’ bass or treble), not to mention unwanted DSP (Digital Signal Processing – such as adding echo) effects often found lurking in the audio stack, provided by less discerning soundcard manufacturers. In many ways, this approach is similar to ‘direct mode’, Oculus’ attempt to cut out the middle-man in the Windows GPU driver stack to deliver the lowest latency possible to the HMD’s display.

All this means that, in theory, should Oculus follow this design through with their eventual consumer product, every user that buys an Oculus Rift at retail has the best possible chance at top quality and effective 3D positional audio right out of the box.

A Few Words on HRTFs

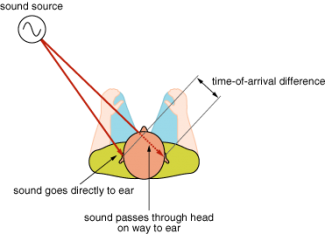

As with most of the incredible things our brains do for us, the way we perceive the world through sound is taken for granted. But the subtle detection of reflections and distortion that sound suffers on its way to our ears provide us with critical spatial information.

No HRTF is created equal however. As everyone’s head shape is subtly different, your brain is attuned specifically to it. Which raises interesting questions for VR Audio’s implementation. Will there be a calibration step that allows you to provide a 3D model of your head to ensure those audio reflection and occlusion is calculated accurately? I suspect not, but it’s likely that calibrating HRTFs for each user in virtual reality will be important in the future, as every other aspect of VR becomes more and more realistic.

From my own personal experience, I’ve come closer to achieving presence using spatialised 3D audio VR demos than any other so I’m heartened and impressed at the efforts being made by Oculus to ensure its use is not only supported but positively encouraged in future virtual reality content.

Combining high quality, custom components at every stage in Crescent Bay’s audio pipeline, Oculus has provided a way to ensure its vision for compelling and ultimately presence-enhancing 3D positional audio can be delivered to the consumer. Assuming these measures make their way into the consumer version, the Oculus Rift could wind up being the best sounding device in the household.