Q: The current setup requires a lot of space for the base stations, do you intend to reduce that? What would be the minimum distance between the base stations? How many base stations are needed, and if there are more than one, how are their positions calibrated? In existing ultrasonic tracking systems, the relative positions of the base stations need to be measured by the end-user, ideally with sub-millimeter accuracy. Does their system have automatic calibration, say by base stations pinging each other, or does it not require calibration at all? If so, how?

A: The locating method uses a minimum of four sensors. More sensors can be added to reduce occlusions but four is the minimum. The spacing between the sensors depends on the application. If the sensors are farther apart you get higher accuracy at a longer range. Move them closer together and the total size of the sensor array is smaller at the cost of accuracy.

For a console controller I have the flatscreen. It makes a convenient home for the sensor array. The sensor arrangement was chosen to go flat against the wall so that you can set this up in your living room without seeing hardware all over the place.

Two sensors are positioned at the bottom corners of the screen. One is positioned at the center of the bottom edge and the other at the center of the top edge. The system needs two measurements to account for the television size. The first is from the bottom/center sensor to the top/center. The second is from the bottom/center to either of the outside sensors. That’s all the calibration that’s necessary. When I set up a system I “calibrate” it with a tape measure and off I go.

In practice I’ve found you have to be misaligned pretty badly before you notice it in game play. It’s surprisingly forgiving. If your measurements accurate to within ¼” you’re unlikely to notice it.

I do have some calibration routines that take the two initial measurements and tweak them based on the tracking data coming in. I usually don’t bother. It’s not that critical and and I want to keep the setup simple. I’ll probably include the calibration as an optional thing in case you want to tweak things.

Everything in this system was optimized for console gaming but there are many other configurations of the markers and sensors that also work. Each configuration has pluses and minuses. For a different application I might arrange things differently.

Q: It seems the update rate is 50 Hz , do you intend to improve that for the consumer release? What would be the maximum supported frequency?

A: Building this first system has been an incredible learning experience. I now know to do so much more than when I started—I know way more about ultrasonic sound than I ever wanted to know! I have a whole list of modifications that improve the sampling rate. I’ve tested each mod individually but I’ve maxed out the processors I’m using. I can’t implement them all together with the current prototype. Argh.

The first step post Kickstarter is to port to faster processors. There’s a lot of work I’m doing in software that could be better handled in hardware. Putting these functions in an FPGA will dramatically improve performance.

I don’t know what the maximum sampling frequency will be. I have some mods that can increase the sampling rate significantly, but I need to get to better hardware to implement them. I won’t know what the upper limit is until I start building things.

Q: What sound technology are you tracking with? Is it subject to being occluded like the Power Glove? Is it tethered to stationary stereo speakers or microphones?

A: The system uses ultrasonic sound. The signals go through a lot of software to pull quality data out of what is a fuzzy, difficult, unreliable, sloppy signal.

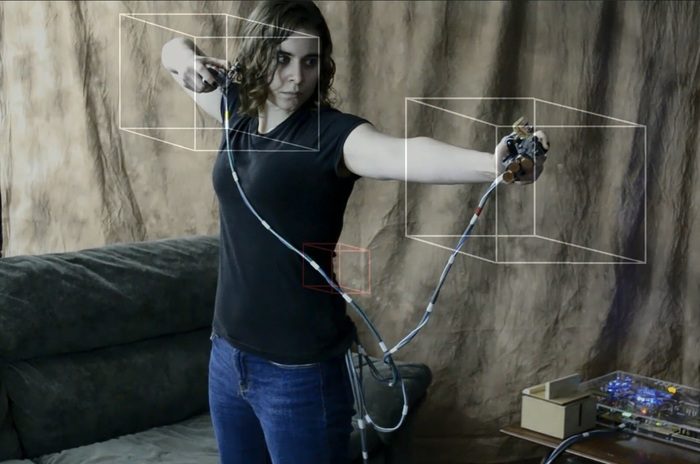

Each controller half has an ultrasonic transmitter that is used as a tracking marker. Four sensors are attached to the outside frame of the television.

Q: How did you measure the 1/100 inch accuracy?

A: I put the controller in a vice and moved the vice, measuring with a micrometer.

In order to have an “accurate” controller location I also looked at what a player needed for it to “feel” accurate. This is what I came up with for using the system with console gaming:

- If the controller isn’t moving the (x,y,z) measurement shouldn’t move. It can’t bounce around. If I’m aiming at a tiny target and the aim bounces on its own then it will just be frustrating.

- If the controller moves a very small amount the measurement should reflect that small movement. The difference between a miss and a headshot may only be a few pixels. If I don’t have enough control to move a few pixels I can’t aim.

- If there’s a large movement that movement has to feel in proportion to the small movement.

- The response has to be immediate. Averaging a bunch of samples can improve accuracy but the lag in tracking would make it feel inaccurate. So, no sample averaging.

I have an LCD monitor that I use for debugging that can display the realtime (x,y,z) coordinates for a marker in hundredths of an inch. If I put the controller in a vice and, say, watch the (x) coordinate over time the most change that I see is that the hundredths digit may change back and forth between two values. “10.45” inches to “10.46” inches and then maybe back again. That satisfies #1.

If I move it a tiny bit with my vice and micrometer I can see the coordinate changing immediately and it’s matching what I expect. That satisfied #2 and #4.

#3 I measured but it’s much easier to tell during game play. If the response isn’t proportional the aiming just doesn’t feel right.

In console gaming #4 is a difficult one. The player sees lag as the delay from when they do something until they see it on the screen. The game will have some lag as it does its processing. The flatscreen can have a lot of lag depending on the model. Putting it in “Game Mode” helps but there’s still a delay until it displays the video that the console is sending.

I’ve actually managed to eat into this lag a bit. There are some really fun things in the software that reduce this lag, even though it’s not caused by the controller. No, I won’t tell you how I did it.

Q: Is it 3DOF (positional) or 6DOF (positional and rotational) tracking? Is the technology used ultrasonic or inertial-ultrasonic hybrid?

A: The prototype in the video is only using the 3D positional data but there’s nothing to stop me from experimenting with additional devices in the future.

The prototype was the result of a lot of “I wonder if this will work” thinking. “I wonder how it will work if I use absolute positioning instead of inertial?” “Can I do different things with true absolute positioning data that inertial devices can’t do?” It was an experiment. To control my variables I built it only with the absolute positioning system.

I wanted it to be clear that I was doing things using a non-inertial method. If I included any other tracking methods I knew that I would spend the rest of my life trying to explain what was due to the absolute positioning system and what was due to the tracking methods that everyone else was using.

Furthermore, demonstrating inertial sensors adds nothing to the demo. They’re everywhere and in everything. We all know how they work. I’m trying to demonstrate something new not what you already know about.