Achieving a wide field of view in an AR headset is a challenge in itself, but so too is fixing the so-called vergence-accommodation conflict which presently plagues most VR and AR headsets, making them less comfortable and less in sync with the way our vision works in the real world. Researchers have set out to try to tackle both issues using varifocal membrane mirrors.

Researchers from UNC, MPI Informatik, NVIDIA, and MMCI have demonstrated a novel see-through near-eye display aimed at augmented reality which uses membrane mirrors to achieve varifocal optics which also manage to maintain a wide 100 degree field of view.

Vergence-Accommodation Conflict

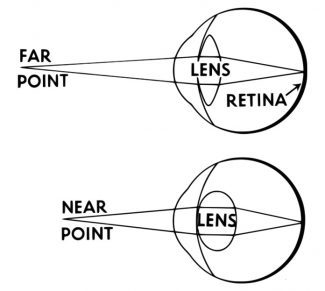

In the real world, to focus on a near object, the lens of your eye bends to focus the light from that object onto your retina, giving you a sharp view of the object. For an object that’s further away, the light is traveling at different angles into your eye and the lens again must bend to ensure the light is focused onto your retina. This is why, if you close one eye and focus on your finger a few inches from your face, the world behind your finger is blurry. Conversely, if you focus on the world behind your finger, your finger becomes blurry. This is called accommodation.

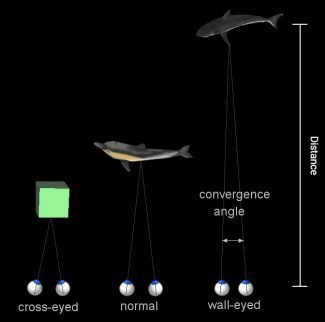

Then there’s vergence, which is when each of your eyes rotates inward to ‘converge’ the separate views from each eye into one overlapping image. For very distant objects, your eyes are nearly parallel, because the distance between them is so small in comparison to the distance of the object (meaning each eye sees a nearly identical portion of the object). For very near objects, your eyes must rotate sharply inward to converge the image. You can see this too with our little finger trick as above; this time, using both eyes, hold your finger a few inches from your face and look at it. Notice that you see double-images of objects far behind your finger. When you then look at those objects behind your finger, now you see a double finger image.

With precise enough instruments, you could use either vergence or accommodation to know exactly how far away an object is that a person is looking at (remember this, it’ll be important later). But the thing is, both accommodation and vergence happen together, automatically. And they don’t just happen at the same time; there’s a direct correlation between vergence and accommodation, such that for any given measurement of vergence, there’s a directly corresponding level of accommodation (and vice versa). Since you were a little baby, your brain and eyes have formed muscle memory to make these two things happen together, without thinking, any time you look at anything.

But when it comes to most of today’s AR and VR headsets, vergence and accommodation are out of sync due to inherent limitations of the optical design.

In a basic AR or VR headset, there’s a display (which is, let’s say, 3″ away from your eye) which makes up the virtual image, and a lens which focuses the light from the display onto your eye (just like the lens in your eye would normally focus the light from the world onto your retina). But since the display is a static distance from your eye, the light coming from all objects shown on that display is coming from the same distance. So even if there’s a virtual mountain five miles away and a coffee cup on a table five inches away, the light from both objects enters the eye at the same angle (which means your accomodation—the bending of the lens in your eye—never changes).

That comes in conflict with vergence in such headsets which—because we can show a different image to each eye—is variable. Being able to adjust the imagine independently for each eye, such that our eyes need to converge on objects at different depths, is essentially what gives today’s AR and VR headsets stereoscopy. But the most realistic (and arguably, most comfortable) display we could create would eliminate the vergence-accommodation issue and let the two work in sync, just like we’re used to in the real world.

Eliminating the Conflict

To make that happen, there needs to be a way to adjust the focal power of the lens in the headset. With traditional glass or plastic optics, the focal power is static and determined by the curvature of the lens. But if you could adjust the curvature of a lens on-demand, you could change the focal power whenever you wanted. That’s where membrane mirrors and eye-tracking come in.

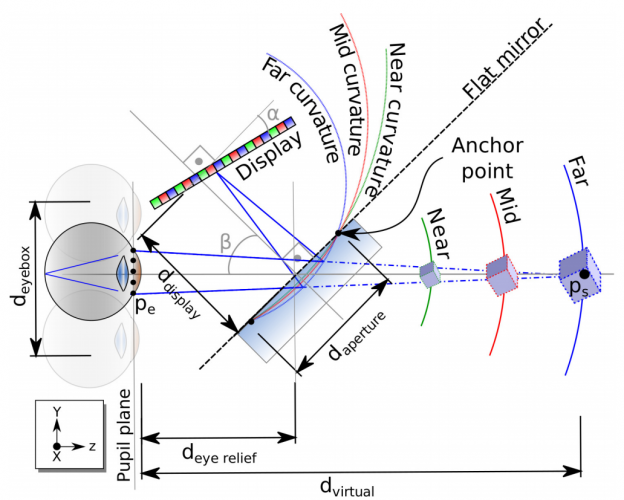

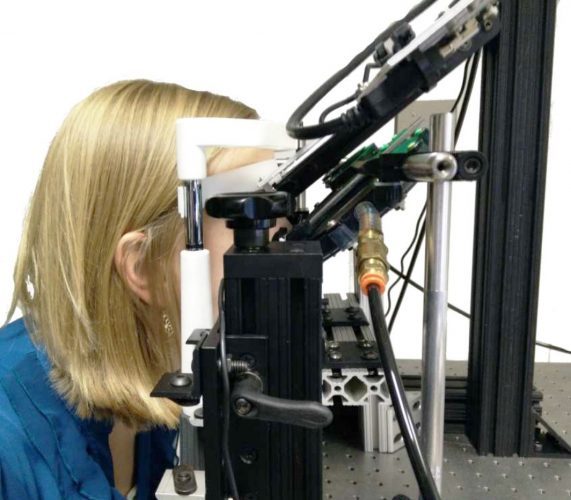

In a soon to be published paper titled Wide Field Of View Varifocal Near-Eye Display Using See-Through Deformable Membrane Mirrors, researchers demonstrated how they could use mirrors made of deformable membranes inside of vacuum chambers to create a pair of varifocal see-through lenses, forming the foundation of an AR display.

The mirrors are able to set the accommodation depth of virtual objects anywhere between 20cm to (optical) infinity. The response time of the lenses between that minimum and maximum focal power is 300ms, according to the paper, with transitions between smaller focal powers happening faster.

But how to know how far to set the accommodation depth so that it’s perfectly in sync with the convergence depth? Thanks to integrated eye-tracking technology, the apparatus is able to rapidly measure the convergence of the user’s eyes, the angle of which can easily be used to determine the depth of anything the user is looking at. With that data in hand, setting the accommodation depth to match is as easy as adjusting the focal power of the lens.

Those of you following along closely will probably see a potential limitation to this approach—the accommodation depth can only be set for one virtual object at a time. The researchers thought about this too, and proposed a solution to be tested at a later date:

Our display is capable of displaying only a single depth at a time, which leads to incorrect views for virtual content [spanning] different depths. A simple solution to this would be to apply a defocus kernel approximating the eye’s point spread function to the virtual image according to the depth of the virtual objects. Due to the potential of rendered blur not being equivalent to optical blur, we have not implemented this solution. Future work must evaluate the effectiveness of using rendered blur in place of optical blur.

Other limitations of the system (and possible solutions) are detailed in section 6 of the paper, including varifocal response time, form-factor, latency, consistency of focal profiles, and more.

Retaining a Wide Field of View & High Resolution

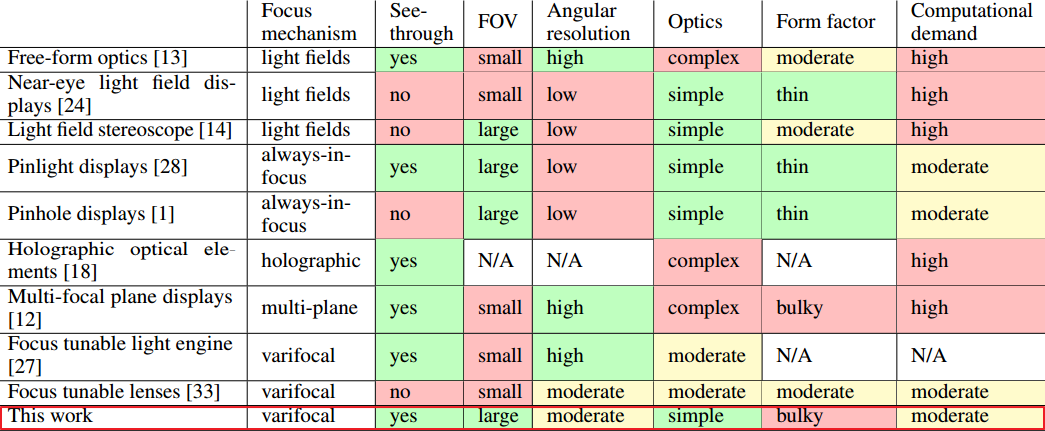

But this isn’t the first time someone has demonstrated a varifocal display system. The researchers identified several other varifocal display approaches, including free-form optics, light field displays, pinlight displays, pinhole displays, multi-focal plane display, and more. But, according to the paper’s authors, all of these approaches make significant tradeoffs in other important areas like field of view and resolution.

But this isn’t the first time someone has demonstrated a varifocal display system. The researchers identified several other varifocal display approaches, including free-form optics, light field displays, pinlight displays, pinhole displays, multi-focal plane display, and more. But, according to the paper’s authors, all of these approaches make significant tradeoffs in other important areas like field of view and resolution.

And that’s what makes this novel membrane mirror approach so interesting—it not only tackles the vergence-accommodation conflict, but does so in a way that allows a wide 100 degree field of view and retains a relatively high resolution, according to the authors. You’ll notice in the chart above, that, of the different varifocal approaches the researchers identified, they show that any large-FOV approach results in a low angular resolution (and vice-versa), except for their solution.

And that’s what makes this novel membrane mirror approach so interesting—it not only tackles the vergence-accommodation conflict, but does so in a way that allows a wide 100 degree field of view and retains a relatively high resolution, according to the authors. You’ll notice in the chart above, that, of the different varifocal approaches the researchers identified, they show that any large-FOV approach results in a low angular resolution (and vice-versa), except for their solution.

– – — – –

This technology is obviously at a very preliminary stage, but its use as a solution for several key challenges facing AR and VR headset designs has been effectively demonstrated. And with that, I’ll leave the parting thoughts to the paper’s authors (D. Dunn, C. Tippets, K. Torell, P. Kellnhofer, K. Akşit, P. Didyk, K. Myszkowski, D. Luebke, and H. Fuchs.):

Despite few limitations of our system, we believe that providing correct focus cues as well as wide field of view are most crucial features of head-mounted displays that try to provide seamless integration of the virtual and the real world. Our screen not only provides basis for new, improved designs, but it can be directly used in perceptual experiments that aim at determining requirements for future systems. We, therefore, argue that our work will significantly facilitate the development of augmented reality technology and contribute to our understanding of how it influences user experience.