At Nvidia’s special Editors Day event in Austin, Texas, the company unveiled an enhancement to it’s VRWorks APIs which now includes what it says is the first “Physically Based Acoustic Simulator Engine”, accelerated by Nvidia GPUs.

Nvidia and AMD’s focus on claiming the high ground in virtual reality has been under way place for some time now, but it’s largely been VR visuals and latency that have received the lions share of the attention.

At Nvidia’s special Editor’s Day event today, Nvidia CEO Jen-Hsun Huang announced that VRworks (formerly Gameworks VR), the company’s collection of virtual reality focused rendering APIs, is to receive a new, physically based audio engine capable of performing calculations needed to project model sound interaction with virtual spaces entirely on the GPU.

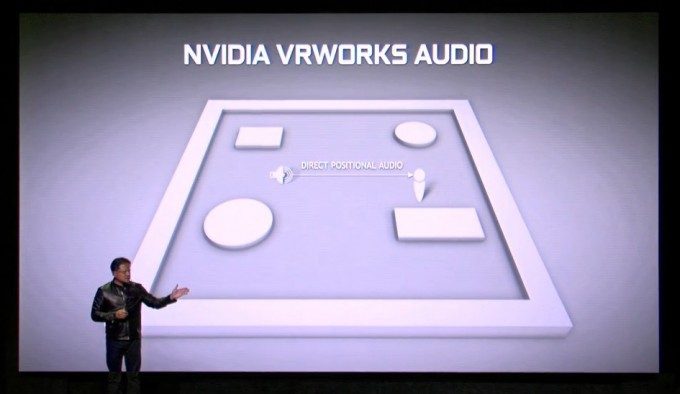

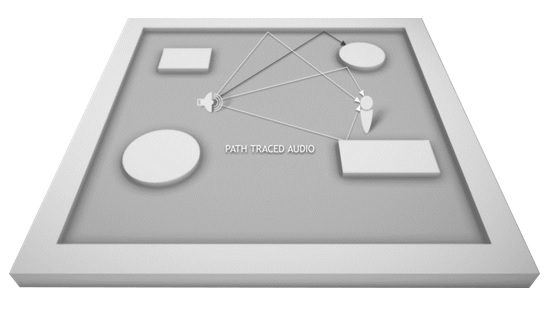

Physically based spatial or 3D audio is the process by which sounds generated within a virtual scene are affected by the path they take before reaching the player’s virtual ears. The resulting affects such as muffling due to walls and door occluding sound to reverberation, echoes caused by the bouncing of sound off many physical surfaces.

Nvidia states in a recently released video demonstrating the new VR Audio engine, that they’re approaching audio modelling and rendering much like ray tracing. Ray tracing is a processor intensive but incredibly accurate to render graphics, calculating the path from source to destination of individual rays of light in a scene. Similarly, albeit presumably computationally much more cheaply, Nvidia claims to be tracing the path sound waves travel through a virtual scene, applying ‘physical’ attributes and dynamically rendered audio based on the resulting distortion. to put is simply, they work out how sound bounces off stuff and make it sound real. In fact VR Audio uses Nvidia’s pre-existing ray-tracing engine OptiX to “simulate the movement, or propagation, of sound within an environment, changing the sound in real time based on the size, shape and material properties of your virtual world — just as you’d experience in real life.”

Spatial audio and the use of HRTFs (Head Related Transfer Function) in virtual reality is already common with in SDK options for both Oculus and SteamVR development, not to mention various 3rd party options such as Realspace audio and 3DCeption. I cannot with degree of authority say how computationally accurate the physical modelling already is in any of those options, although I have heard how they sound. So whilst the promises of such great levels accuracy by Nvidia are certainly appealing (and judging by the video pretty convincing), perhaps it’s the GPU offloading that sells the idea as a possible winner. You can hear the results in the embedded video at the very top of this page.

However, as with most of the VRWorks suite of APIs and technologies, whatever benefits are brought by the company’s GPU accelerated VR Audio will be limited to those with Nvidia GPUs. What’s more, developers will have to target these APIs in code specifically too. The arguments for doing so however may well be attractive enough for developers to do just that and as an audio enthusiast, I can’t deny that the idea of such potential accuracy in VR sounds-capes is pretty appealing to me. We’ll see how it stacks up once it arrived.

In the mean time, if you’re a developer who’s interested in getting an early peek at VRWorks Audio, head over to this sign up page.