Meta announced it’s shipping out Project Aria Gen 2 to third-party researchers next year, which the company hopes will accelerate development of machine perception and AI technologies needed for future AR glasses and personal AI assistants.

The News

Meta debuted Project Aria Gen 1 back in 2020, the company’s sensor-packed research glasses which it used internally to train various AR-focused perception systems, in addition to releasing it in 2024 to third-party researchers across 300 labs in 27 countries.

Then, in February, the company announced Aria Gen 2, which Meta says includes improvements in sensing, comfort, interactivity, and on-device computation. Notably, neither generation contains a display of any type, like the company’s recently launch Meta Ray-Ban Display smart glasses.

Now the company is taking applications for researchers looking to use the device, which is said to ship to qualified applicants sometime in Q2 2026. That also means applications for Aria Gen 1 are now closed, with remaining requests still to be processed.

To front run what Meta calls a “broad” rollout next year, the company is releasing two major resources: the Aria Gen 2 Device Whitepaper and the Aria Gen 2 Pilot Dataset.

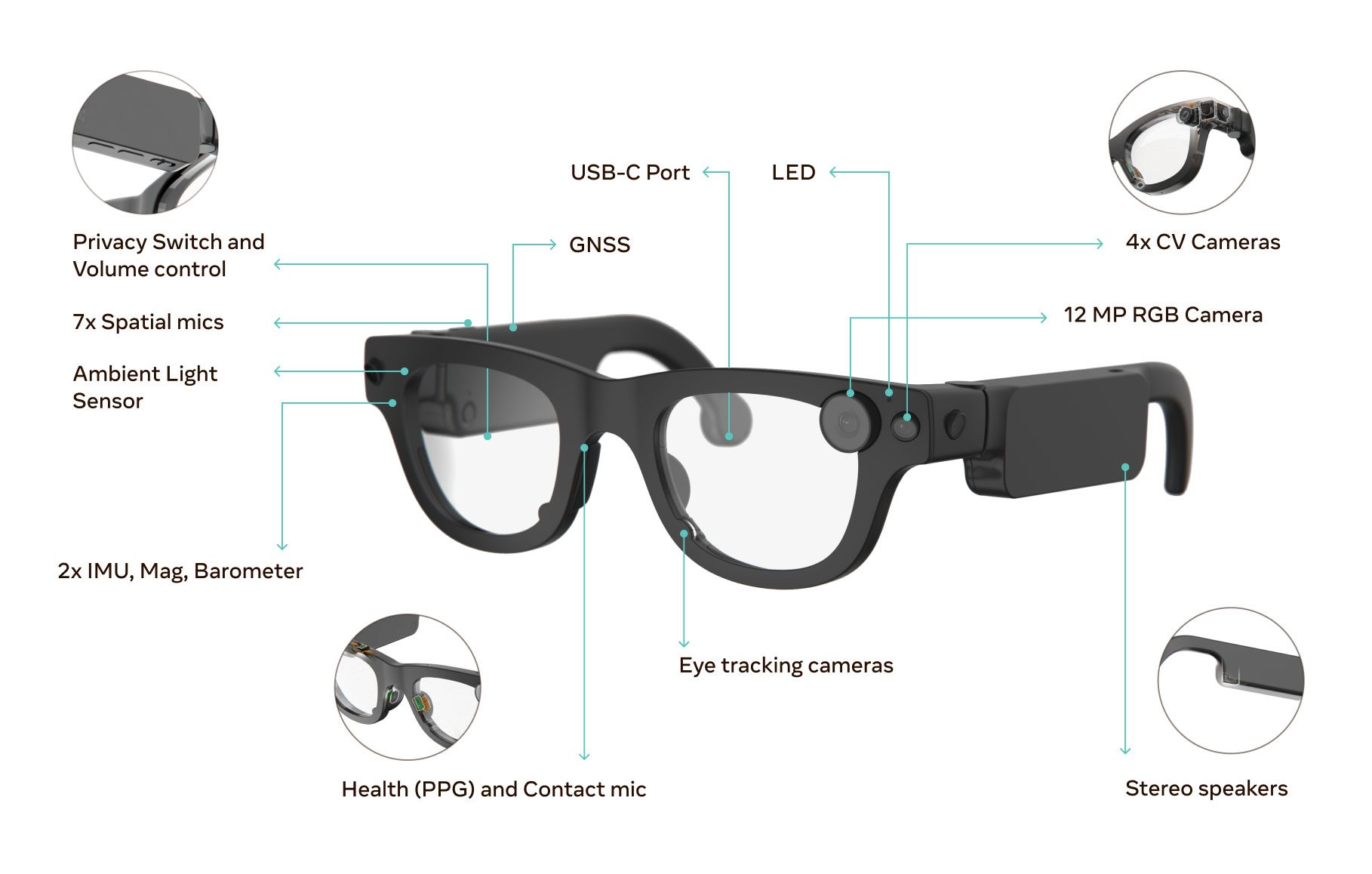

The whitepaper details the device’s ergonomic design, expanded sensor suite, Meta’s custom low-power co-processor for real-time perception, and compares Gen 1 and Gen 2’s abilities.

Meanwhile, the pilot dataset provides examples of data captured by Aria Gen 2, showing its capabilities in hand and eye-tracking, sensor fusion, and environmental mapping. The dataset also includes example outputs from Meta’s own algorithms, such as hand-object interaction and 3D bounding box detection, as well as NVIDIA’s FoundationStereo for depth estimation.

Meta is accepting applications from both academic and corporate researchers for Aria Gen 2.

My Take

Meta doesn’t call Project Aria ‘AI glasses’ like it does with its various generations of Ray-Ban Meta or Meta Ray-Ban Display, or even ‘smart glasses’ like you might expect—even if they’re substantively similar on the face of things. They’re squarely considered ‘research glasses’ by the company.

Cool, but why? Why does the company that already makes smart glasses with and without displays, and cool prototype AR glasses need to put out what’s substantively the skeleton of a future device?

What Meta is attempting to do with Project Aria is actually pretty smart for a few reasons: sure, it’s putting out a framework that research teams will build on, but it’s also doing it at a comparatively lower cost than outright hiring teams to directly build out future use cases, whatever those might be.

While the company characterizes its future Aria Gen 2 rollout as “broad”, Meta is still filtering for projects based on merit, i.e. getting a chance to guide research without really having to interface with what will likely be substantially more than 300 teams, all of whom will use the glasses to solve problems in how humans can more fluidly interact with an AI system that can see, hear, and know a heck of a lot more about your surroundings than you might at any given moment.

AI is also growing faster than supply chains can keep up, which I think more than necessitates an artisanal pair of smart glasses so teams can get to grips with what will drive the future of AR glasses—the real crux of Meta’s next big move.

Building out an AR platform that may one day supplant the smartphone is no small task, and its iterative steps have the potential to give Meta the sort of market share the company dreamt of way back in 2013 when it co-released the HTC First, which at the time was colloquially called the ‘Facebook phone’.

The device was a flop, partly because the hardware was lackluster, and I think I’m not alone in saying so, mostly because people didn’t want a Facebook phone in their pockets at any price when the ecosystem had some many other (clearly better) choices.

Looking back at the early smartphones, Apple teaches us that you don’t have to be first to be best, but it does help to have so many patents and underlying research projects that your position in the market is mostly assured. And Meta has that in spades.