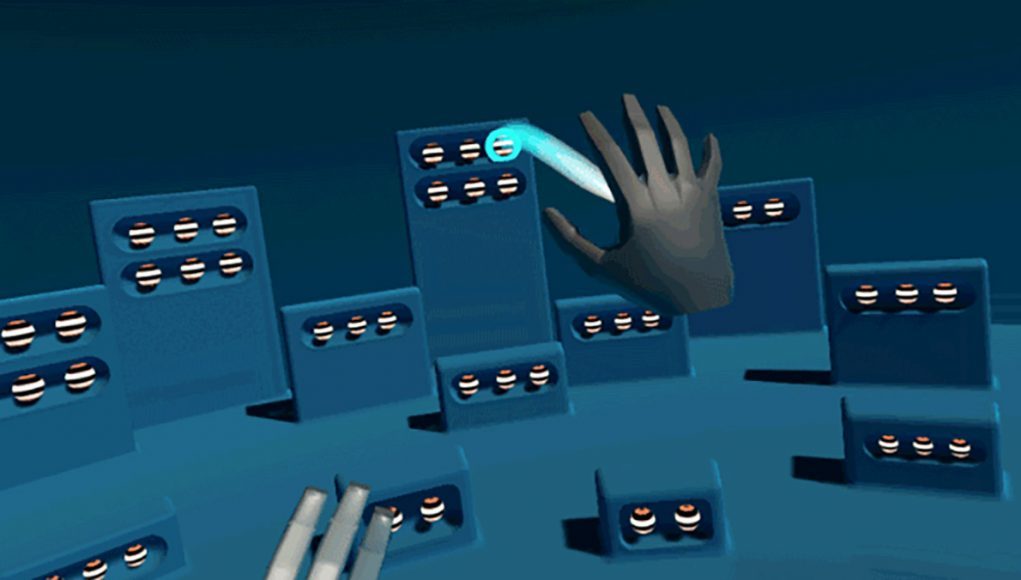

Experiment #2: Telekinetic Powers

While the first experiment handled summoning and dismissing one static object along a predetermined path, we also wanted to explore summoning dynamic physics-enabled objects. What if we could launch the object towards the user, having it land either in their hand or simply within their direct manipulation range? This execution drew inspiration from Force-pulling, Magneto yanking guns out of his enemies’ hands, wizards disarming each other, and many other fantasy powers seen in modern media.

In this experiment, the summonable objects were physics-enabled. This means that instead of sitting up at eye level, like on a shelf, they were most likely resting on the ground. To make selecting them a more low-effort task, we decided to change the selection mode hand pose from overhanded, open palm-facing-the-target pose to a more relaxed open palm-facing-up with fingers pointed toward the target.

To allow for a quicker, more dynamic summoning, we decided to condense hovering and selecting into one action. Keeping the same underlying raycast selection method, we simply removed the need to make a selection gesture. Keeping the same finger-curling summon gesture meant the user could quickly select and summon an object by pointing toward it with an open, upward-facing palm, then curling the fingers.

Originally, we used the hand as the target for the ballistics calculation that launched a summoned object towards the user. This felt interesting, but having the object always land perfectly on one’s hand felt less physics-based and more like the animated summon. To counter this, we changed the target to an offset in front of the user – plus a slight random torque to the object to simulate an explosive launch. Adding a small shockwave and a point light at the launch point, as well as having each object’s current speed drive its emission, completed the explosive effect.

Since the interaction had been condensed so much, it was possible to summon one object after another in quick succession before the first had even landed.

Users could even summon objects again, in mid-air, while they were already flying towards the user.

This experiment was successful in feeling far more dynamic and physics-based than the animated summon. Condensing the stages of interaction made it feel more casual, and the added variation provided by enabling physics made it more playful and fun. While one byproduct of this variation was that objects would occasionally land and still be out of reach, simply summoning it again would bring it close enough. While we were still using a gesture to summon, this method felt much more physically based than the previous one.