NVIDIA’s latest ‘RTX’ cards are not just an incremental step in performance, but represent significant new direction for NVIDIA’s approach to real-time rendering. Built on the company’s ‘Turing’ architecture, the RTX cards pave the way for graphics infused with accelerated ray-tracing and artificial intelligence, and also bring VR-specific enhancements over NVIDIA’s prior 10-series GPUs which are based on the ‘Pascal’ architecture.

The Turing architecture introduces two new types of ‘cores’ (processors designed to quickly handle specific tasks) that are not found in prior GeForce cards: RT Cores and Tensor Cores. RT cores are designed to accelerate ray-tracing operations, the math that simulates how light bounces around a scene and interacts with objects. Tensor Cores are designed to accelerate tensor operations which are useful for AI inferencing like that which comes from neural networks and deep learning.

This means that in additional to the usual CUDA-based rendering, RTX cards also have the ability to bring accelerated ray-tracing and AI processes into the rendering mix, which can make for some impressive real-time reflections, among other things. But beyond the potential for better graphics thanks to more realistic lightning, what do RTX cards bring to the table for VR? NVIDIA recently broke down some of the highlights.

Multi-view Rendering for Ultra-wide FOV Headsets

Over the last few years, so-called ‘single-pass stereo’ rendering has become an important part of rendering stereoscopic scenery for VR headsets, given that each eye needs to see a slightly different view of the scene to create an accurate 3D view. Single-pass stereo allows the geometry of the scene to be rendered for both eyes with a single rendering pass, instead of one pass for each eye.

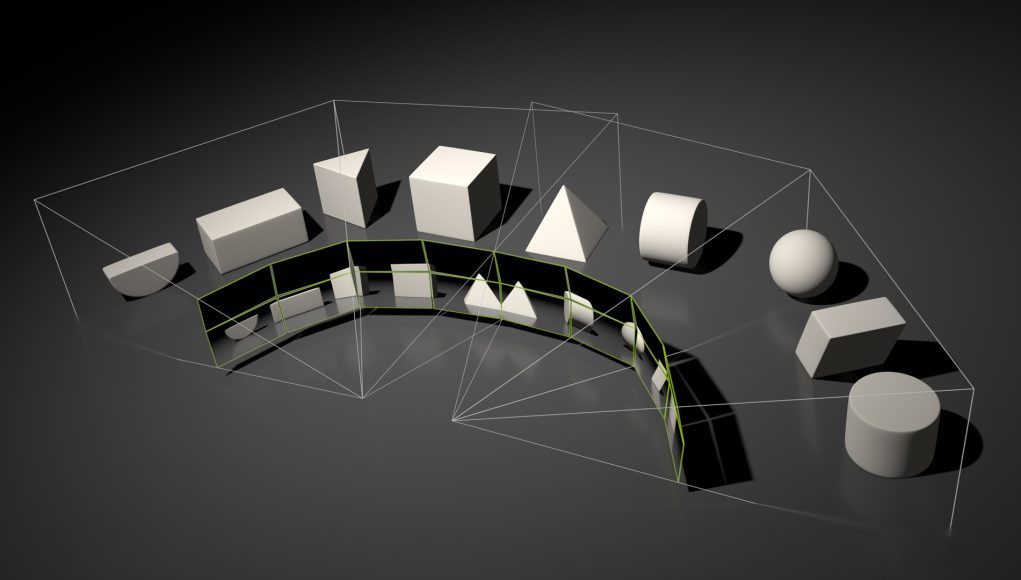

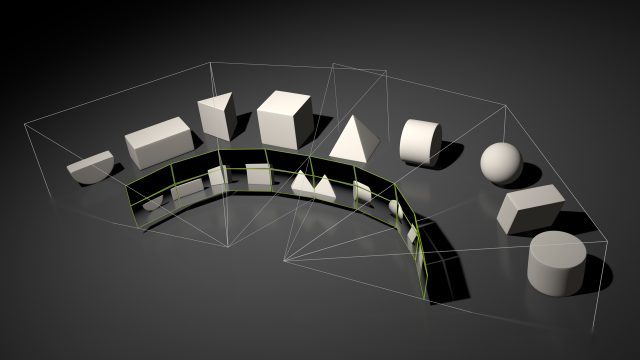

Upcoming ultra-wide FOV headsets like StarVR One typically use displays which are angled to one another to achieve their wide view. Rendering such a wide field (especially when the content of each eye’s view is quite different given the expanded FOV and angle of each display) without distortion is not a trivial task.

RTX GPUs are now capable of Multi-view Rendering, which NVIDIA says is like a next-generation version of Single-pass Stereo. Multi-view Rendering bumps the number of possible geometry projections which can be achieved with a single pass from two to four, allowing ultra-wide fields of view to be rendered in a single pass for headsets with angled displays. The company also says that all four projections are now position-independent and able to shift along any axis, which should allow for more even more complex display layouts in future headsets.

It appears that each perspective from Multi-view Rendering should also be capable of being segmented for Lens Matched Shading via Simultaneous Multi-projection (a feature introduced with Pascal cards).

Variable-rate Shading for Foveated Rendering

Foveated rendering—reducing detail in your peripheral view where you don’t notice it for more efficient rendering—has been talked about for years now, but practical implementation relies heavily on eye-tracking technology which isn’t available in many current-gen headsets. But with eye-tracking expected in a range of next-generation headsets, the need for an efficient method of foveated rendering is increasingly important.

NVIDIA says that their new RTX cards support a feature called Variable-rate Shading which allows for dynamic adjustments to how much shading is done in one part of the scene vs. another. It isn’t clear just yet exactly how this feature works, but it sounds like the next step up from the previous Multi-res Shading feature which worked like a static foveated rendering solution.

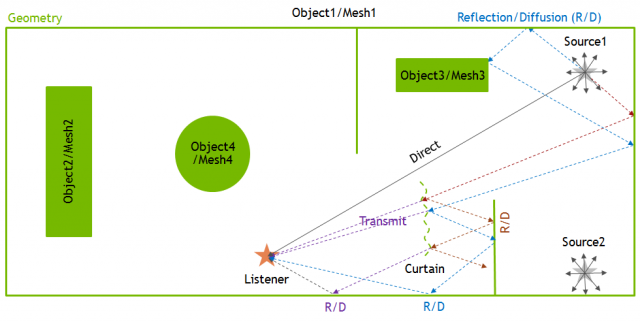

Accelerated Ray-traced Sound Simulation

As it turns out, ray-tracing doesn’t just have to involve light. Ray-tracing can also be used for simulating the complex interactions of sound waves as they bounce around an environment. As NVIDIA points out, many spatial audio implementations for VR today provide accurate positional sound, but generally don’t account for how that sound would interact with the geometry of the environment around the user, which can have a major impact on the accuracy and immersiveness of the scene.

NVIDIA revealed their VRWorks Audio solution—which simulates sound in real-time on the GPU—back in 2016. With the RT Cores in the new RTX cards, the company says that VRWorks Audio implementations are accelerated up to 6x compared to the prior generation of GPUs. As VRWorks Audio demands a share of a GPU’s processing power, the newly accelerated capability might be more attractive to developers as they can retain more of the GPU’s horsepower for graphical tasks than before.

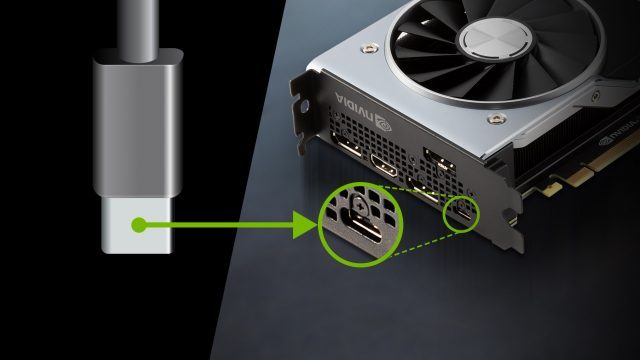

VirtualLink

Then of course there’s VirtualLink, a USB-C based connection standard being pushed by VR’s biggest players. The VirtualLink connector offers four high-speed HBR3 DisplayPort lanes (which are “scalable for future needs”), a USB3.1 data channel for on-board cameras, and up to 27 watts of power, all in a single cable. The standard is said to be “purpose-built for VR,” being optimized for latency and the demands of next-generation headsets.

All Turing cards technically support VirtualLink (including Quadro cards), though ports could vary from card to card depending upon the manufacturer. For NVIDIA’s part, the company’s own ‘Founder’s Edition’ version of the 2080 Ti, 2080, and 2070 are confirmed to include it.

– – — – –

All of the features mentioned above (except for VirtualLink) are part of NVIDIA’s VRWorks package, and must be specifically implemented by developers or game engine creators. The company says that Variable-rate Shading, Multi-view Rendering and VRWorks Audio SDKs will be made available to developers through a VRWorks update in September.