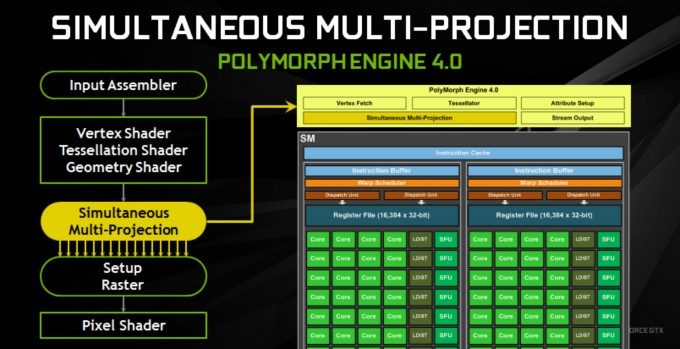

The graphics pipeline in NVIDIA’s new ‘Pascal’ GPUs has been rearchitected with features which can significantly enhance VR rendering performance. Here the company explains how Simultaneous Multi-projection and Lens Matched Shading work together to increase VR rendering efficiency.

As the name implies, ‘Simultaneous Multi-projection’ (hereafter ‘SMP’) allows Pascal-based GPUs to render multiple views from the same origin point with just one geometry pass; rendering multiple views this way previously would have required a pass for each projection, but with SMP up to 16 views can be rendered in a single pass (or up to 32 projections in the case of rendering from two viewpoints for VR).

SMP can be used specifically for VR to achieve what Nvidia calls ‘Lens Matched Shading’ (hereafter LMS). The goal of LMS is to avoid rendering pixels which end up being discarded in the final view sent to the display in the VR headset after the distortion process.

As the company explains, traditionally a single planar viewpoint is rendered and then distorted to counteract the opposite distortion imposed by the headset’s lenses. The distortion process however ends up cutting out a significant number of pixels from the initial render, which means wasted rendering power which could instead be used elsewhere.

LMS aims to avoid rendering excess pixels in the first place by using SMP to break up the view for each eye into four quadrants that better approximate the final distorted view from the get-go. The result is that the GPU renders fewer pixels that are destined to be discarded, ultimately allowing that horsepower to be used elsewhere.

Nvidia says that LMS can result in up to a 50%

increase in throughput available for pixel shading. However, these features don’t happen automatically simply by using a Pascal-based GPU; in order to take advantage of SMP and LMS, applications need to be built against Nvidia’s VRWorks SDK.

Disclosure: Nvidia paid for travel and accommodation for one Road to VR correspondent to attend an event where information for this article was gathered. Nvidia also provided Road to VR with a GTX 1080 GPU for review.