Real-time Object Classification

Apple’s ARKit and Google’s ARCore tech will let you do some pretty nifty and novel AR-like things on your smartphone, but for the most part these systems are limited to understanding flat surfaces like floors and walls. Which is why 99% of the AR apps and demos out there right now for iOS take place on a floor or table.

Why floors and walls? Because they’re easy to classify. The plane of one floor or wall is identical to the plane of another, and can be reliably assumed to continue as such in all directions until intersecting with another plane.

Note that I used the word understand rather than sense or detect. That’s because, while these systems may be able to ‘see’ the shapes of objects other than floors and walls, they cannot presently understand them.

Take a cup for instance. When you look at a cup, you see far more than just a shape. You already know a lot about the cup. How much? Let’s review:

- You know that a cup is an object that’s distinct from the surface upon which it sits

- You know that, without actually looking into the top of that cup, that it contains an open volume which can be used to hold liquids and other objects

- You know that the open volume inside the cup doesn’t protrude beyond the surface upon which it sits

- You know that people drink from cups

- You know that a cup is lightweight and can be easily knocked over, resulting in a spill of whatever is inside

I could go on… The point is that a computer knows none of this. It just sees a shape, not a cup. Without getting a full view sweep of the interior of a cup, to build a map of the shape in its entirety, the computer can’t even assume that there’s an open interior volume. Nor does it know that it is a separate object from the surface it sits upon. But you know that, because it’s a cup.

But it’s a non-trivial problem to get computer-vision to understand ‘cup’ rather than just seeing a shape. This is why for years and years we’ve seen AR demos where people attach fiducial markers to objects in order to facilitate more nuanced tracking and interactions.

Why is it so hard? The first challenge here is classification. Cups come in thousands of shapes, sizes, colors, and textures. Some cups have special properties and are made for special purposes (like beakers), which means they are used for entirely different things in very different places and contexts.

Think about the challenge of writing an algorithm which could help a computer understand all of these concepts, just to be able to know a cup when it sees it. Think about the challenge of writing code to explain to the computer the difference between a cup and a bowl from sight alone.

That’s a massive problem to solve for just one simple object out of perhaps thousands or hundreds of thousands of fairly common objects.

Today’s smartphone-based AR happens within your environment, but hardly interacts with it. That’s why all the AR experiences you’re seeing on smartphones today are relegated to floors and walls—it’s impossible for these systems to convincingly interact with the world around us, because they see it, but they don’t understand it.

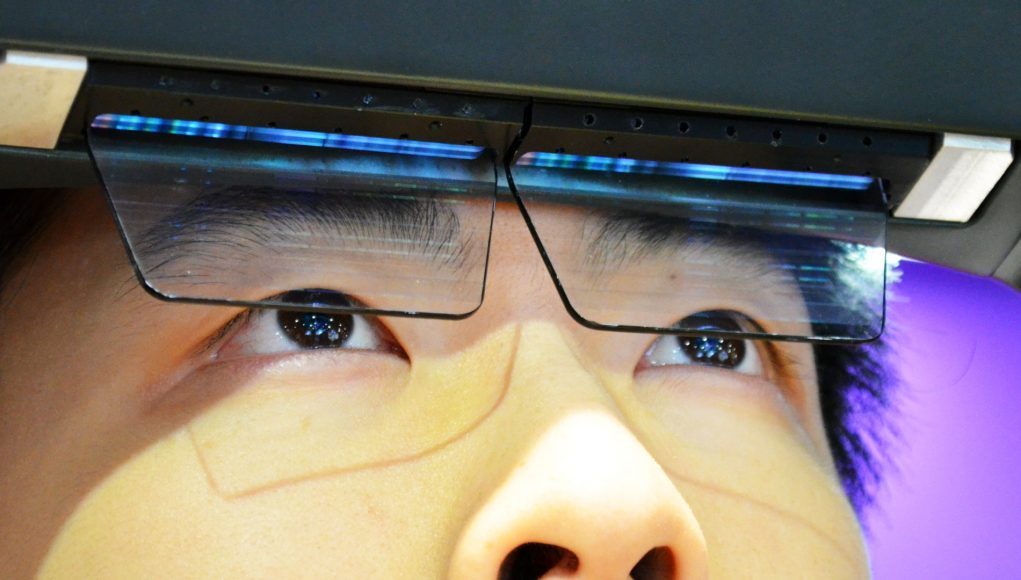

For the sci-fi AR that everyone imagines—where my AR glasses show me the temperature of the coffee in my mug and put a floating time-remaining clock above my microwave—we’ll need systems that understand way more about the world around us.

So how do we get there? The answer seems like it must involve so-called ‘deep learning’. Hand-writing classifying algorithms for every object type, or even just the common ones, is a massively complex task. But we may be able to train computerized neural networks—which are designed to automatically adapt their programming over time—to reliably detect many common objects around us.

Some of this work is already underway and appears quite promising. Consider this video which shows a computer somewhat reliably detecting the difference between arbitrary people, umbrellas, traffic lights, and cars:

The next step is to vastly expand the library of possible classifications and then to fuse this image-based detection with real-time environmental mapping data gathered from AR tracking systems. Once we can get AR systems to begin understanding the world around us, we begin to tackle the challenge of adaptive design for AR experiences, which just so happens to be our next topic.