DeepMind, Google’s AI research lab, announced Genie 3 last August, showing off an AI system capable of generating interactive virtual environments in real-time. Now, Google has released an experimental prototype that Google AI subscribers can try today. Granted, you can’t generate VR world on the fly just yet, but we’re getting tantalizingly close.

The News

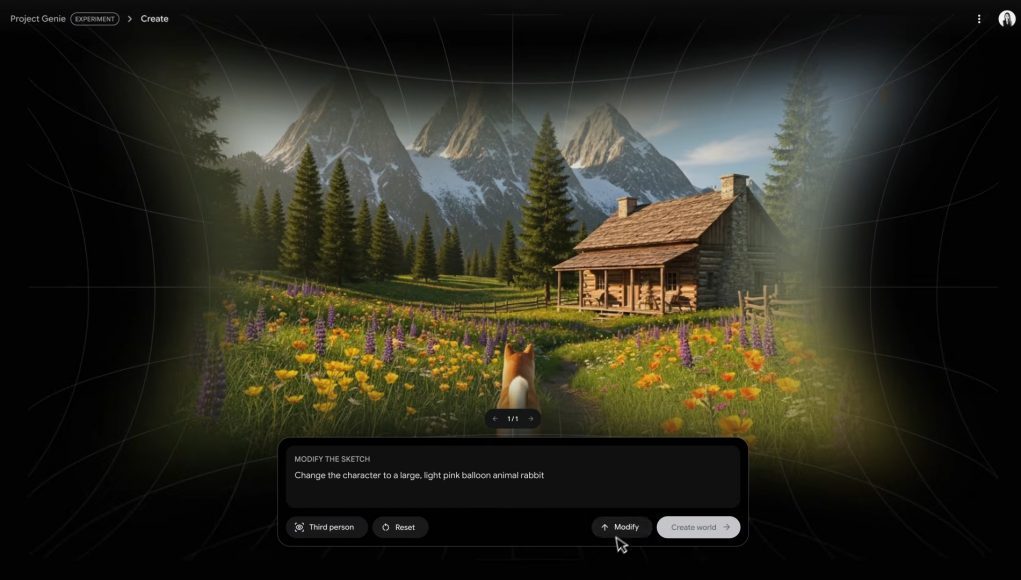

Project Genie is what Google calls it an “experimental research prototype,” so it isn’t exactly the ‘AI game machine’ of your dreams just yet. Essentially, it allows users to create, explore, and modify interactive virtual environments through a web interface.

The system is a lot like previous image and video generators, which require inputting a text prompt and/or uploading reference images, although Project Genie takes this a few steps further.

Instead of one, Project Genie has two main prompt boxes—one for the environment and one for the character. A third prompt box also allows you to modify the initial look before fully generating the environment (e.g.. make the sword bigger, change the trees to fall time).

As an early research system, Project Genie has limitations, Google says in a blog post. Generated environments may not closely match real-world physics or prompts, character control can be inconsistent, sessions are limited to 60 seconds, and some previously announced features are not yet included.

And for now, the only thing you can output is a video of the experience, although you can explore and remix other ‘worlds’ available in the gallery.

Project Genie is now rolling out to Google AI Ultra subscribers in the US, aged 18 and over, with broader availability planned to release at some point in the future. You can find out more here.

My Take

There are a lot of hurdles to get over before we can see anything like Project Genie running on a VR headset.

One of the most important hurdles to get over is undoubtedly cloud streaming. Frankly, cloud gaming exists on VR headsets, but it’s not great right now since latency is so variable based on how close you are to your service’s data center. That, and the big names in cloud gaming today (i.e. NVIDIA GeForce Now, Xbox Cloud Gaming) are generally geared towards flatscreen games; when it comes to render and input latency, the bar is much lower than VR headsets, which generally require a maximum of 20ms motion-to-photon latency to avoid user discomfort.

And that’s also not taking into account that Project Genie would need to also somehow render the world with stereoscopy in mind—which may present its own problems since the system would technically need two distinct points of view that resolve into a single, solid 3D picture.

As far as I understand, world models created in Project Genie are probabilistic, i.e. objects can behave slightly different each time, which is part of the reason Genie 3 can only support a maximum of few minutes of continuous interaction at a time. Genie 3 world generation has a tendency to drift from prompts, which probably gives undesired results.

So while it’s unlikely we’ll see a VR version of this in the very near future, I’m excited to see the baby steps leading to where it could eventually go. The thought of being able to casually order up a world on the fly Holodeck-style that I can explore—be it past, present, or any fiction of my chooseing—feels so much more interesting to me from a learning perspective. One of my most-used VR apps to date is Google Earth VR, and I can only imagine a more detailed and vibrant version of that to help me learn foreign languages, time travel, and tour the world virtually.

Before we even get that far though, there’s a distinct possibility that the Internet will be overrun by ‘game slop’, which feels like asset flipping taken to the extreme. It will also likely expose game developers to the same struggles that other digital artists are facing right now when it comes to AI sampling and recreating copyrighted works—albeit on a whole new level (GTA VI anyone?).

That, and I can’t shake the feeling that the future is shaping up be a very odd, but hopefully also a very interesting and not entirely terrible place. I can imagine a future wherein photorealistic, AI-driven environments go hand-in-hand with brain-computer interfaces (BCI)—two subjects Valve has been researching for years—and serving up The Virtual Reality I’m actually waiting for.