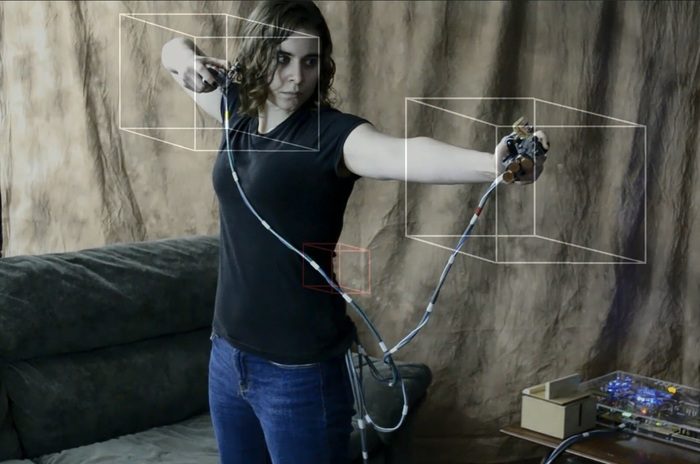

Q: I am curious about your “location zones” that you tout for setting up motion triggers like pulling back a bow. The video of this showed boxes that the controller needed to enter to trigger the event. It also showed an approximation of the users body and the zones tracked this body. How are you sensing the user? Can the ultrasonic tech detect the general user “blob”?

A: I call the “location zones” Trigger Boxes. The key to Trigger Boxes is the controller splitting.

When the controller is together motion is usually ignored. When you split the controller apart the controller’s current location is recorded. I call it the “Origin.” This tells me where the controller is at the time it was split. I don’t need to know where the player is. Trigger Boxes can be positioned relative to the Origin. If you split the controller over there the Trigger Boxes are positioned over there as well. Split it over here and the Trigger Boxes go over here.

What if you’re in a “movement” mode, where you’re moving around the room to move the character? As you move around the room Origin moves along with you. This, in turn, moves the Trigger Boxes.

Not everyone is the same height or has the same size arms and things. Players can also create a Player Profile that’s used to adjust the locations of the Trigger Boxes. That way the Trigger Boxes will be positioned to match each player.

There’s a Chapter video about the TriggerBoxes here. Chapter 12, Boxes in Space.

Q: The Kickstarter goal seems unattainable. Have you considered cancelling the campaign and starting over, say 100k?

The Kickstarter goal is based on what it would take to get all of the setup, design and tooling done and deliver a quality product. There were some components I needed that didn’t exist so I had to invent them. These parts must be manufactured as well as everything else.

I’m not just delivering a motion tracking dev kit that backers can own and dream about the day that some games may be made available for it. I’m delivering a system that takes this data and translates it to something that existing consoles and games can understand. There are already games out there for it because it works with any game.

This means that there’s a lot more involved in the Mad Genius Controller. If a dev kit plugs into your PC most of the processing will be done in the PC. I’m plugging into a console without an API so I have to provide my own processing power.

I look at a lot of Kickstarter projects and I can’t imagine how they’re going to deliver if they only get their minimum. I’ve seen some that had to cut back on the quality of their final product in order to deliver anything at all. I don’t want to do this.

Most of the cost of producing something like this lies in the fixed project costs. There are custom components, setup costs for the plastics, the FPGA design, software development and on and on and on. I’m going to need more developers to do this right and they don’t work for free. I need somewhere to put everyone and that’s not free either. These costs have to be spread over the number of controllers we’re making. A smaller number means each controller has to carry a larger portion of this cost.

If you make millions of something most of your cost is the components and assembly. If you make thousands most of the cost will be those annoying fixed project costs.

If I reduce the goal to $100,000 what happens if the Kickstarter only hits that minimum? Now I’m producing even fewer units but my fixed project costs are the same. The cost of each controller is now even higher but I have even less funding. The only way to deliver is to increase the cost of each controller and it quickly gets ridiculous.

The numbers weren’t where I’d like them to be but they are what they are. It turns out that to deliver the controller at a reasonable price I also need to make a reasonable quantity of them. The goal is set based on the quantity of controllers I need to build in order to keep the cost down.

So, no. I can’t do another Kickstarter with a lower goal. If I could make this work with a lower goal I would have set the goal there to begin with.

Q: It’s the same price as STEM which is cutting edge tech so why would I buy this for VR for PC instead of STEM?

Ultrasonic locating methods typically have an accuracy in the range of ¼” to ½”. I found a way to get 1/100th of an inch. I think that’s cutting edge but that’s just me.

I designed this system to add motion to console gaming without taking away traditional controls. Everything was optimized for that environment. I didn’t want to build one more dev kit that only programmers could use so I added the motion translation and Game Profiles so that a gamer could use it right out of the box without writing any code.

So, what does this have to do with VR? Nothing. Well, at first. Just about every other YouTube comment said we should try this with the Oculus so I got one.

Most people were using the Oculus seated to avoid breaking bones on the furniture. This kept the player facing in one direction much like the situation with console play. With the player seated the situation isn’t all that different from the console play I’d originally designed the system for.

The controller performed extremely well as you can see in the video. Surprisingly well! Well enough that with a little tweaking it would be a good option for a controller.

Is it ready to be a full-VR-it’s-like-I-have-a-holodeck-in-my-room controller where I’m walking around and turning in every direction? Not in its current form. But VR isn’t there yet, either.

Quite a few of the PC games written for head-mounted displays have been using an Xbox controller. The mouse and keyboard can be difficult when you can’t see them. An Xbox controller you can hang onto. The Mad Genius Controller can connect to anything by presenting itself as an Xbox controller. This means you can use it with a PC game without a software API. If the game works with a game controller it works with the Mad Genius Controller.

This means that all you need to do to add motion to a game is to set up the Game Profile through our motion design app. This configures the Mad Genius Controller to match the game and you can get an idea how the motion can feel with very little time invested.

Does this give you 1:1 hand motions and things? No, of course not. If you want that you can go the dev kit route and get all of the tracking data that the controller provides. The motion design app can let you test the waters before you commit to a lot of coding for the API.

It’s really a question of what you want to do. Full VR is still quite a ways away. It has a lot of difficulties to overcome. I’m sure these difficulties will be overcome but I’d like to play some games in the meantime. I’d want to play lots of different games, including the favorites I already have, with the best motion control possible. That’s why I built this thing, because I’d like to have fun with this stuff now.

This is an important difference with what I’m trying to do. One of VR’s biggest hurdles is that few non-developers will want it until lots games are available. Few developers are going to write these games until a large number of gamers already own the VR gear. I don’t know how this is going to work out but I don’t want to wait until it’s all in place. I don’t need to have all aspects of VR in place to add to the gaming experience. I’m going to take whatever pieces are available and add them to the games that are already there and I’m going to have some fun.

Q: I would like to know if the controller has haptic feedback/rumble? If yes is it in each side of the controller?

A: There are so many new devices out there for feedback. I’ve played with a few of them and the potential to pull you closer to the game is very exciting.

The prototype in the video doesn’t have any tactile feedback. It was designed for one thing, to test the absolute positioning system and see how it could work with console game play.

Kickstarter is not Amazon. You don’t click “Add to Cart” and I ship you a shrink-wrapped box. Kickstarter is to start projects. My goal with Kickstarter is to get the resources to take that prototype and turn it into something even better. Everything I learned building it will go into the final prototype. Anything else that I can get my hands on will go in the final one as well.

I have a huge list of things that I want to add to this thing and haptic feedback is right there at the top. I’d like to put the whole list into the final controller but it’s a question of resources. So, given whatever resources I end up with I’m going to add as many as I can.

Thanks to Don Rider for taking the time to answer the community’s questions!