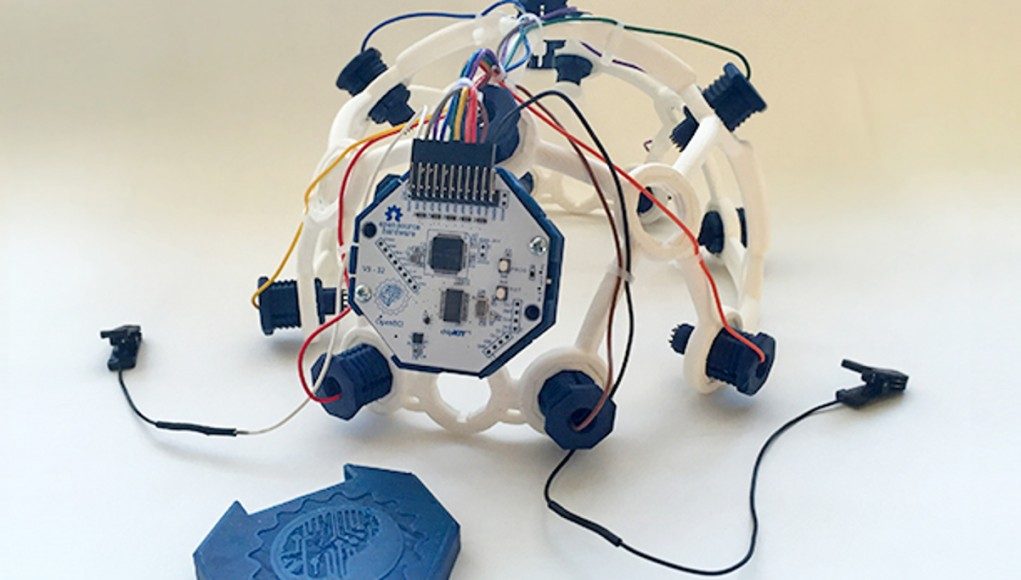

OpenBCI is an open source, brain control interface that gathers EEG data. It was designed for makers and DIY neuroengineers, and has the potential to democratize neuroscience with a $100 price point. At the moment, neither OpenBCI nor other commercial off-the-shelf neural devices are compatible with any of the virtual reality HMDs, but there are VR headsets like MindMaze that are fully integrated their headset with neural inputs. I had the chance to talk with OpenBCI founder Conor Russomanno about the future of VR and neural input on the day before the Experiential Technology and Neurogaming Expo — also known as XTech. Once the neural inputs are integrated in VR headsets, then VR experiences will be able to detect and react whenever something catches your attention, your level of alertness, your degree of cognitive load and frustration, as well differentiating between different emotional states.

OpenBCI is an open source, brain control interface that gathers EEG data. It was designed for makers and DIY neuroengineers, and has the potential to democratize neuroscience with a $100 price point. At the moment, neither OpenBCI nor other commercial off-the-shelf neural devices are compatible with any of the virtual reality HMDs, but there are VR headsets like MindMaze that are fully integrated their headset with neural inputs. I had the chance to talk with OpenBCI founder Conor Russomanno about the future of VR and neural input on the day before the Experiential Technology and Neurogaming Expo — also known as XTech. Once the neural inputs are integrated in VR headsets, then VR experiences will be able to detect and react whenever something catches your attention, your level of alertness, your degree of cognitive load and frustration, as well differentiating between different emotional states.

LISTEN TO THE VOICES OF VR PODCAST

Audio Player“Neurogaming” is undergoing a bit of rebranding effort towards “Experiential Technology” to take some of the emphasis off of the real-time interaction of brain waves. Right now the latency of EEG data is too slow and it is not consistent enough to be reliable. One indication of this was that all of the experiential technology applications that I saw at XTech that integrated with neural inputs were either medical and educational applications.

Conor says that there are electromyography (EMG) signals that are more reliable and consistent including micro expressions of the face, jaw grits, moving your tongue, and eye clinches. He expects developers to start to use some of these cues to drive drones or do medical applications for quadriplegics or people who have limited mobility from ALS.

There are a lot of privacy implications once you start to gather some of this EEG data, and Conor is particularly sensitive to this. He says that recent research is indicating that EEG signals are very unique to each person, and represent a unique digital signature that could trace anonymously submitted data back to you. He says that companies of the future will need to take into consider a strict privacy policy, and not use this data to exploit their users.

At the same time, there were a number of software-as-a-service companies at XTech who were taking EEG data and applying their own algorithms to extrapolate emotions and other higher-level insights. A lot of these algorithms are using AI techniques like machine learning in order to capture a baseline signals of someone’s unique fingerprint and start to train the AI to be able to make sense of the data. AI that interprets and extrapolates meaning out of a vast sea of data from dozens of biometric sensors is going to be a big part of the business models for Experiential Technology.

Once this biometric data starts to become available to VR developers, then we’ll be able to go into a VR experience and be able to see visualizations of what contextual inputs were affecting our brain activity and we’ll start to be able to make decisions to optimize our lifestyle.

I could also imagine some pretty amazing social applications of these neural inputs. Imagine being able to see a visualization of someone’s physical state as you interacting with them. This could have huge implications within the medical context where mental health consolers could get additional insight and the physiological context that would be correlated to the content of a counseling session. Or I could see experiments in social interactions with people who trusted each other enough to be that intimate with their inner most unconscious reactions. And I could also see how immersive theater actors could have very intimate interactions or entertainers could be able to read the mood of the crowd as they’re giving a performance.

Finally, there are a lot of deep and important questions to protect users from loosing control of how their data is used and how it’s kept private since it may prove impossible to completely anonymize it. VR enthusiasts will have to wait on better hardware integrations, but the sky is the limit for what’s possible once all of the inputs are integrated and made available for VR developers.

Donate to the Voices of VR Podcast Patreon

Music: Fatality & Summer Trip