Domain Specific Sensors

He also revealed that Reality Labs has already begun work to this end, and has even created a prototype camera sensor that’s specifically designed for the low power, high performance needs of AR glasses.

The sensor uses an array of so-called digital pixel sensors which capture digital light values on every pixel at three different light levels simultaneously. Each pixel has its own memory to store the data, and can decide which of the three values to report (instead of sending all of the data to another chip to do that work).

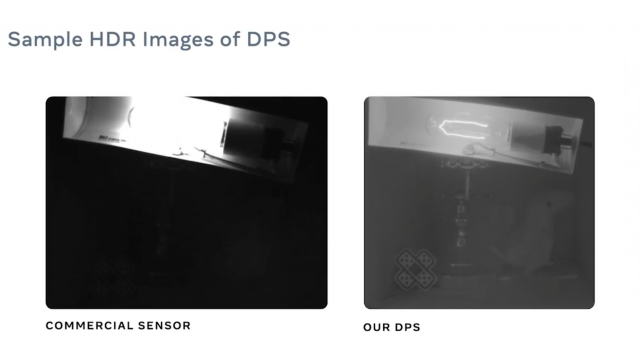

This doesn’t just reduce power, Abrash says, but also drastically increases the sensor’s dynamic range (its ability to capture dim and bright light levels in the same image). He shared a sample image captured with the company’s prototype sensor compared to a typical sensor to demonstrate the wide dynamic range.

In the image on the left, the bright bulb washes out the image, causing the camera to not be able to capture much of the scene. The image on the right, on the other hand, can not only see the extreme brightness of the lightbulb’s filament in detail, it can also see other parts of the scene.

This wide dynamic range is essential to sensors for future AR glasses which will need to work just as well in low light indoor conditions as sunny days.

Even with the HDR benefits of Meta’s prototype sensor, Abrash says it’s significantly more power efficient, using just 5mW at 30 FPS (just under 25% of what a typical sensor would draw, he claims). And it scales well too; though it would take more power, he says the sensor can capture up to 480 frames per second.

But, Meta wants to go even further, with even more complex compute happening right on the sensor.

“For example, a shallow portion of the deep neural networks—segmentation and classification for XR workloads such as eye-tracking and hand-tracking—can be implemented on-sensor.”

But that can’t happen, Abrash says, before more hardware innovation, like the development of ultra dense, low power memory that would be necessary for “true on-sensor ML computing.”

While the company is experimenting with these technologies, Abrash notes that the industry at large is going to need to come together to make it happen at scale. Specifically he says “the development of MRAM technologies by [chip makers] is a critical element for developing AR glasses.”

“Combined together in an end-to-end system, our proposed distributed architecture, and the associated technology I’ve described, have potential for enormous improvements in power, area, and form-factor,” Abrash sumises. “Improvements that are necessary to become comfortable and functional enough to be a part of daily life for a billion people.”