It was nearly a year ago, back at E3 2015, that Oculus founder Palmer Luckey told us that the company had plans to open up an API for third parties to tap into the Constellation tracking system. He was clearly enthusiastic about the ecosystem of peripherals that would be enabled by such a move.

“[Oculus Touch is] never going to be better than truly optimized VR input for every game. For example, racing games: it’s always going to be a steering wheel. For a sword fighting game, you’re going to have some type of sword controller,” Luckey said. “…I think you’re going to see people making peripherals that are specifically made for particular types of games, like whether they’re steering wheels, flight sticks, or swords, or gun controllers in VR.”

Valve too was bullish about their Lighthouse tracking system; more than a year ago, when the company had just revealed the HTC Vive, Valve head Gabe Newell said that at they wanted to give the tracking tech away for all to use.

“So we’re gonna just give [Lighthouse tech] away. What we want is for that to be like USB. It’s not some special secret sauce,” Newell told Engadget. “It’s like everybody in the PC community will benefit if there’s this useful technology out there. So if you want to build it into your mice, or build it into your monitors, or your TVs, anybody can do it.”

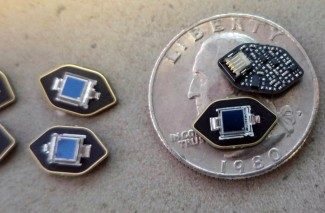

At one point, Valve engineers told us they envisioned one day releasing a ‘puck’—a small standalone tracker possibly about the size of a casino chip—which could be affixed to track any arbitrary object with Lighthouse. In the meantime, eager folks are not content to wait and have instead been taping the HTC Vive controllers to all manner of objects to emulate direct integration of the tracking technology.

[gfycat data_id=”RedUnfitCorydorascatfish” data_autoplay=true data_controls=false]

Developer Stress Level Zero attached one to a dog (who was virtually represented as an alien) with quite hilarious results. The same studio attached the controller to a huge sword prop for a more realistic way to play Ninja Trainer (now called Zen Blade).

Yet another developer taped a controller to a pair of sandals to see what it would be like to have feet tracked in VR for a soccer game.

Valve’s Chet Faliszek, who has been closely involved with the company’s VR projects, recently tweeted a photo of a Vive controller which had been haphazardly hacked into a gun-shaped peripheral and teased about “lighthouse dev kits.”

While we need to get the lighthouse dev kits out, it is fun to see the craziness/resourcefulness in the meantime. pic.twitter.com/Mv4qhKLH9G

— Chet Faliszek (@chetfaliszek) April 30, 2016

It’s not entirely clear what Faliszek may mean by the tweet, but given the context it seems he could be talking about a development kit of a standalone tracking module for Lighthouse.

Given that it could mean increased developer interest, I’m quite surprised neither company has been vying to be first and best when it comes to opening up their tracking systems to third-parties. Surely both Oculus and Valve/HTC have been busy dealing with some not-so-smooth initial launches, but it’s clear that there’s demand from developers and companies to track more than just head and heads in VR.