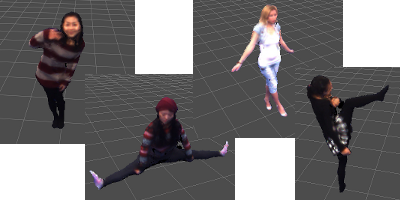

At the most recent Silicon Valley VR meetup, Albert Kim, CEO of DoubleMe, demonstrated his company’s technology for creating realtime 3D models from a synchronized collection of 2D images. Subjects step into a small studio consisting of blue-screen walls and 8 inexpensive cameras. Capture computers take the synchronized video feeds and run them through a series of imaging algorithms to create a 3D model in realtime. What differentiates DoubleMe from other solutions is that it’s capturing motion, and it’s doing it using cameras instead of motion capture gear.

At the most recent Silicon Valley VR meetup, Albert Kim, CEO of DoubleMe, demonstrated his company’s technology for creating realtime 3D models from a synchronized collection of 2D images. Subjects step into a small studio consisting of blue-screen walls and 8 inexpensive cameras. Capture computers take the synchronized video feeds and run them through a series of imaging algorithms to create a 3D model in realtime. What differentiates DoubleMe from other solutions is that it’s capturing motion, and it’s doing it using cameras instead of motion capture gear.

The results are pretty impressive, as Albert demonstrated for me:

DoubleMe is opening a San Francisco studio soon that will allow for free 3D modeling of you, your kids, or your pets. You can even create a 3D printout of yourself based on the model, presumably to use as an action figure. For more information, see DoubleMe.me.