At GDC last week Oculus announced they were bringing a VR rendering function called Asynchronous Timewarp to Windows with version 1.3 of the Oculus PC SDK. The feature (abbreviated ‘ATW’) smoothes over otherwise uncomfortable stuttering which can happen when the PC doesn’t render frames quite fast enough. Valve calls ATW an “ideal safety net,” but suggests a system to avoid relying on it too heavily.

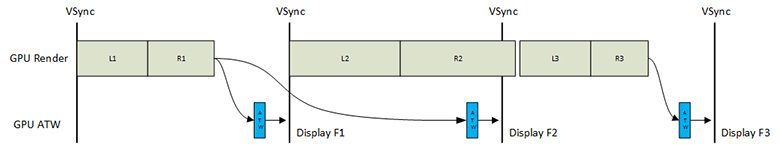

Asynchronous Timewarp is an improved version of Synchronous Timewarp which Oculus has implemented since the Rift DK2. While Synchronus Timewarp was effective in reducing latency, it was tied to the frame-to-frame render loop.

ATW, as the name suggests, decouples Timewarp from the render loop, which gives it more flexibility in dealing with frames that aren’t finished rendering by the time they need to be sent to the VR headset. The company explains in more detail in a recent blog post:

On Oculus, ATW is always running and it provides insurance against unpredictable application and multitasking operating system behavior. ATW can smooth over jerky rendering glitches like a suspension system in a car can smooth over the bumps. With ATW, we schedule timewarp at a fixed time relative to the frame, so we deliver a fixed, low orientation latency regardless of application performance.

This consistently low orientation latency allows apps to render efficiently by supporting full parallelism between CPU and GPU. Using the PC resources as efficiently as possible, makes it easier for applications to maintain 90fps. Apps that need more time to render will have higher positional latency compared to more efficient programs, but in all cases orientation latency is kept low.

Oculus seems quite proud of the feature and writes that “Getting [ATW] right took a lot of work.”

“The user experiences much smoother virtual reality with ATW. Early measurements of Rift launch titles running without ATW showed apps missing ~5% of their frames. ATW is able to fill in for the majority of these misses, resulting in judder reduction of 20-100x. This functionality comes at no performance cost to the application and requires no code changes,” the blog post continues.

The company maintains that ATW is “not a silver bullet,” and continues to recommend that developers target a consistent 90 FPS framerate in their VR apps for the best experience.

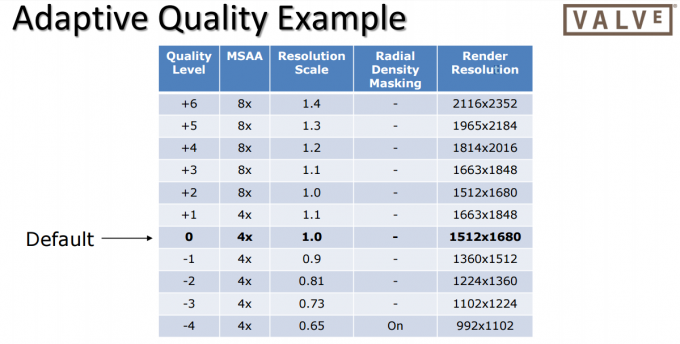

Valve agrees that ATW should only be used as a last resort for the occasional missed frame. Valve Senior Programmer Alex Vlachos called ATW an “ideal safety net” during his Advanced VR Rendering Performance talk at GDC last week, but suggested an ‘Adaptive Quality’ system which would favor reducing rendering quality over relying too heavily on ATW.

Vlachos said the Adaptive Quality system has two goals:

- Reduce the chances of dropping frames and [resorting to timewarp]

- Increase quality when there are idle GPU cycles

Using such a system opens the door to GPUs below the recommended VR specification being able to hit 90 FPS even on applications not expressly designed for that specification, while on the high end allowing VR experiences to look even better on systems that exceed the recommended spec.

He offered an example of settings which can be tweaked up or downward automatically based on the measured framerate:

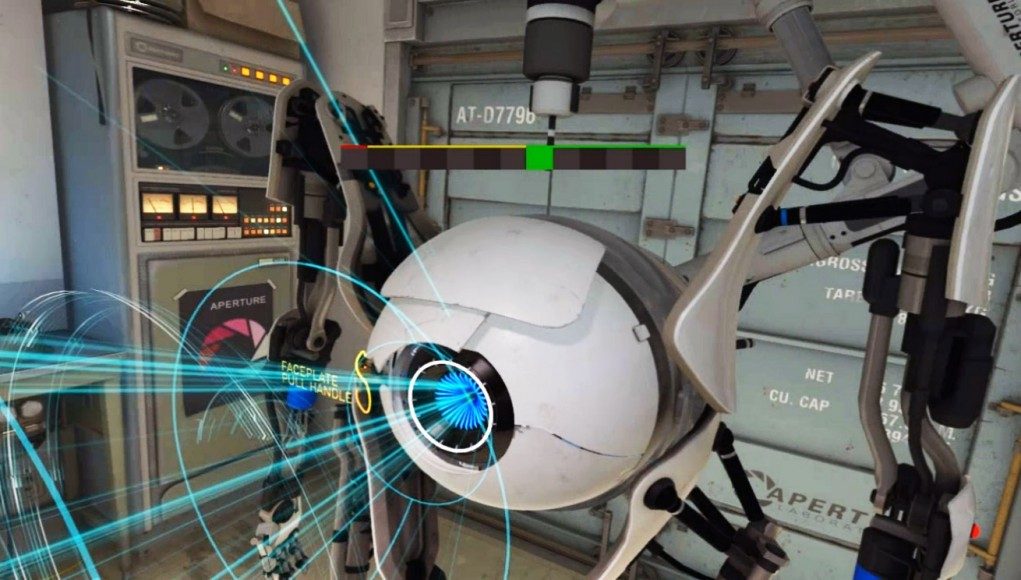

Using this system, Vlachos says Valve was able to get the Aperture Robot Repair demo to hit 90 FPS consistently on the Nvidia GTX 680, a four year old GPU, without relying on ATW.

Using this system, Vlachos says Valve was able to get the Aperture Robot Repair demo to hit 90 FPS consistently on the Nvidia GTX 680, a four year old GPU, without relying on ATW.

Ultimately these techniques allow for smoother VR performance when frames unexpectedly don’t render fast enough, whether its because the user’s view at a given moment in time is particularly render-intensive, or if background processes running on a user’s computer steal a little too much rendering power at the wrong time.