Tomáš “Frooxius” Mariančík, creator of the stunning VR experience SightLine: The Chair, is back! This time he’s demoing an educational prototype that aims to provide an intuitive virtual reality user interface that allows you to reach into VR and control the environment with your hands.

Reaching in to Virtual Spaces

Not content with developing with one of the best virtual reality concepts at last year’s VR Jam event with Sightline and blowing mine and everyone else’s socks off with his follow up tech demo SightLine: The Chair, developer Tomáš “Frooxius” Mariančík has come up with what looks to be one of the most intuitive and responsive VR user experience I’ve yet seen.

See Also: Why ‘Sightline: The Chair’ on the DK2 is My New VR Reference Demo

His new project, which is still a prototype, uses the combination of an Oculus Rift DK2 and its positional head tracking fused with a Rift-mounted Leap Motion controller. The Leap detects your real life hands and fingers and allows Tomáš to translate any gestures or movements into equivalent actions in the virtual world. And in order for your virtual hands to have something to do in virtual space, he’s also conceptualised and built a series of menus and gesture commands that allow the user to navigate and control the world around them.

The current prototype uses a human skeleton, complete with organs, to show how natural interaction with our hands can be used to manipulate and inspect objects in 3D space. The Oculus Rift DK2 brings its positional tracking to the party, allowing the user to grab, hold, and then glance around the virtual object in a natural way. The demo video above shows an extraordinary amount of precision and grace in play here, something that was difficult to achieve in earlier versions of the Leap SDK. But with Leap Motion’s recent skeletal tracking advancements, recently made available to developers in V2 Beta of the SDK, it seems you can clearly achieve some incredible things.

See Also: Leap Motion’s Next-gen ‘Dragonfly’ Sensor is Designed for VR Headsets

The project looks impressive on multiple counts, but it’s the precision of control that’s enabled here that blew me away. Tomáš (featured in the video) taps delicately at menu sliders and scrolls through a test textbox deftly and with apparent ease. I also particularly liked the gesture of a clenched fist, which allows the user to drag the world’s position around them (or vice versa depending on how you look at it).

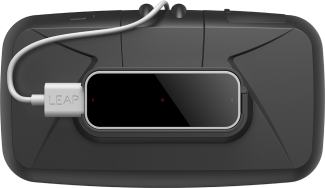

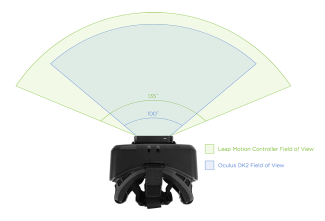

Mount Your Leap

Leap Motion used in conjunction with DK2’s positional tracking seems to be a potent mix and the company has done everything it can to try to evangelise this. They now offer a special mount for your DK2 which allows you to slot your Leap Motion sensor onto the front of your headset (cleverly missing most of the IR LEDs covering the DK2) and presto, you have a sensor capable of spotting your wavy arms within its horizontal FOV of 135 degrees (so claims the maker). It certainly manages to make a compelling argument for an answer of naturalistic VR input, something that even Oculus hasn’t yet publicly addressed.

Leap Motion used in conjunction with DK2’s positional tracking seems to be a potent mix and the company has done everything it can to try to evangelise this. They now offer a special mount for your DK2 which allows you to slot your Leap Motion sensor onto the front of your headset (cleverly missing most of the IR LEDs covering the DK2) and presto, you have a sensor capable of spotting your wavy arms within its horizontal FOV of 135 degrees (so claims the maker). It certainly manages to make a compelling argument for an answer of naturalistic VR input, something that even Oculus hasn’t yet publicly addressed.

VR input and ways to allow humans to interface with these new digital worlds lags behind rapidly advancing VR headset solutions. Tomáš’ prototype gives us a glimpse at how we could be interacting with our digital worlds very soon indeed. What’s more, this seems to mark a new lease of life for the Leap Motion device, which had appeared to be searching for a fitting application.

We’ll be digging deeper into this project along with some hands-on impressions and thoughts from the Tomáš himself on the project. In the mean time, you can find more on SightLine here and the facebook page.