Ahead of an upcoming technical conference, researchers from Meta’s Reality Labs Research group published details on their work toward creating ultra-wide field-of-view VR & MR headsets that use novel optics to maintain a compact goggles-style form-factor.

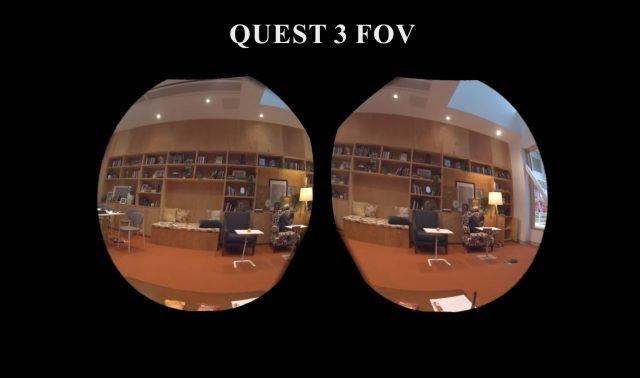

Published in advance of the ACM SIGGRAPH 2025 Emerging Technologies conference, the research article details two headsets, each achieving a horizontal field-of-view of 180 degrees (which is a huge jump over Meta’s existing headsets, like Quest 3, which is around 100 degrees).

The first headset is a pure VR headset which the researchers say uses “high-curvature reflective polarizers” to achieve the wide field of view in a compact form-factor.

The other is an MR headset, which uses the same underlying optics and head-mount but also incorporates four passthrough cameras to provide an ultra-wide passthrough field-of-view to match the headset’s field-of-view. The cameras total 80MP of resolution at 60 FPS.

The researchers compared the field-of-view of their experimental headsets to that of the current Quest 3. In the case of the MR headset, you can clearly see the advantages of the wider field-of-view: the user can easily see someone who is in a chair right next to them, and also has peripheral awareness of a snack in their lap.

Both experimental headsets appear to use something similar to the outside-in ‘Constellation’ tracking system that Meta used on its first consumer headset, the Oculus Rift CV1. We’ve seen Constellation pop up on a number of Reality Labs Research headsets over the years, likely because it’s easier to use for rapid iteration compared to inside-out tracking systems.

The researchers point out that similarly wide field-of-view headsets already exist the consumer market (for instance, those from Pimax), but the field-of-view often comes at the cost of significant bulk.

The Reality Labs researchers claim that these experimental headsets have a “form-factor comparable to current consumer devices.”

“Together, our prototype headsets establish a new state-of-the-art in immersive virtual and mixed reality experiences, pointing to the user benefits of wider FOVs for entertainment and telepresence applications,” the researchers claim.

For those hoping these experimental headsets point to a future Quest headset with an ultra-wide field-of-view… it’s worth noting that Meta does lots of R&D and has shown off many research prototypes over the years featuring technologies that have yet to make it to market.

For instance, back in 2018, Meta (at the time still called Facebook) showed a research prototype headset with varifocal displays. Nearly 7 years later, the company still hasn’t shipped a headset with varifocal technology.

As the company itself will tell you, it all comes down to tradeoffs; Meta CTO Andrew ‘Boz’ Bosworth explained as recently as late 2024 why he thinks pursuing a wider field-of-view in consumer VR headsets brings too many downsides in terms of price, weight, battery life, etc. But there’s always the chance that this latest research causes him to change his mind.