It’s been a long-held assumption that the human eye is capable of detecting a maximum of 60 pixels per degree (PPD), which is commonly called ‘retinal’ resolution. Any more than that, and you’d be wasting pixels. Now, a recent University of Cambridge and Meta Reality Labs study published in Nature maintains the upper threshold is actually much higher than previously thought.

The News

As the University of Cambridge’s news site explains, the research team measured participants’ ability to detect specific display features across a variety of scenarios: both in color and greyscale, looking at images straight on (aka ‘foveal vision’), through their peripheral vision, and from both close up and farther away.

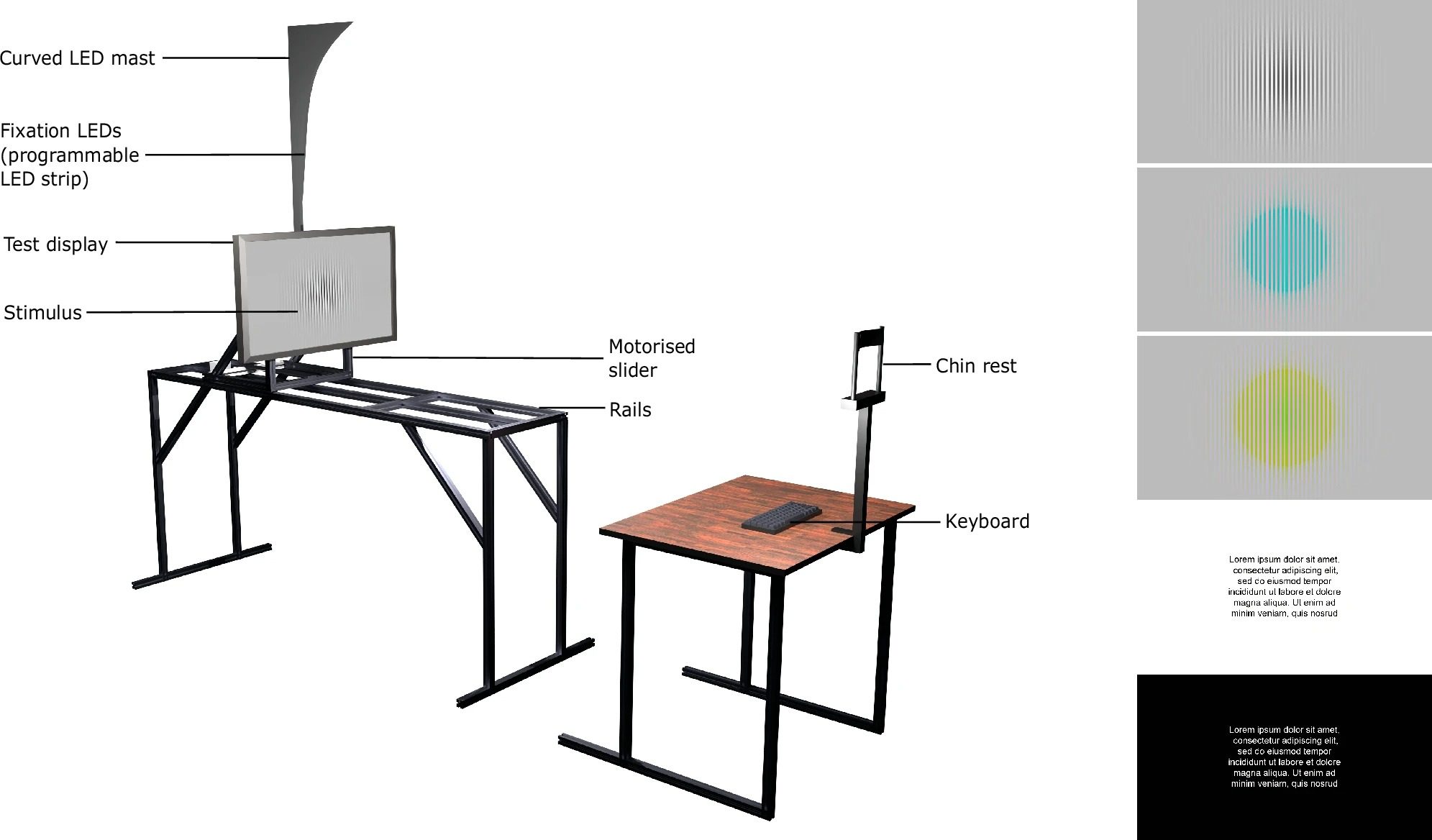

The team used a novel sliding-display device (seen below) to precisely measure the visual resolution limits of the human eye, which seem to overturn the widely accepted benchmark of 60 PPD commonly considered as ‘retinal resolution’.

Essentially, PPD measures how many display pixels fall within one degree of a viewer’s visual field; it’s sometimes seen on XR headset spec sheets to better communicate exactly what the combination of field of view (FOV) and display resolution actually means to users in terms of visual sharpness.

According to the researchers, foveal vision can actually perceive much more than 60 PPD—more like up to 94 PPD for black-and-white patterns, 89 PPD for red-green, and 53 PPD for yellow-violet. Notably, the study had a few outliers in the participant group, with some individuals capable of perceiving as high as 120 PPD—double the upper bound for the previously assumed retinal resolution limit.

The study also holds implications for foveated rendering, which is used with eye-tracking to reduce rendering quality in an XR headset user’s peripheral vision. Traditionally optimized for black and white vision, the study maintains foveated rendering could further reduce bandwidth and computation by lowering resolution further for specific color channels.

So, for XR hardware engineers, the team’s findings point to a new target for true retinal resolution. For a more in-depth look, you can read the full paper in Nature.

My Take

While you’ll be hard pressed to find accurate info on each headset’s PPD—some manufacturers believe in touting pixels per inch (PPI), while others focus on raw resolution numbers—not many come close to reaching 60 PPD, let alone the revised retinal resolution suggested above.

According to data obtained from XR spec comparison site VRCompare, consumer headsets like Quest 3, Pico 4, and Bigscreen Beyond 2 tend to have a peak PPD of around 22-25, which describes the most pixel-dense area at dead center.

Prosumer and enterprise headsets fare slightly better, but only just. Estimating from available data, Apple Vision Pro and Samsung Galaxy XR boast a peak PPD of between 32-36.

Headsets like Shiftall MeganeX Superlight “8K” and Pimax Dream Air have around 35-40 peak PPD. On the top end of the range is Varjo, which claims its XR-4 ($8,000) enterprise headset can achieve 51 peak PPD through an aspheric lens.

Then, there are prototypes like Meta’s ‘Butterscotch’ varifocal headset, which the company showed off in 2023, which is said to sport 56 PPD (not confirmed if average or peak).

Still, there’s a lot more to factor in to reaching ‘perfect’ visuals beyond PPD, peak or otherwise. Optical artifacts, refresh rate, subpixel layout, binocular overlap, and eye box size can all sour even the best displays. What is sure though: there is still plenty of room to grow in the spec sheet department before any manufacturer can confidently call their displays retinal.