Amidst other VR related announcements from Google at today’s I/O 2015 conference comes ‘Jump’, a VR camera that will follow Cardboard’s path by offering up an open design. Google is also revealing the ‘Assembler’ which the company says can combine multiple video feeds into a “seamless” 3D scene. YouTube will support VR video playback for Jump videos starting this summer.

‘Jump’ is what Google is calling its foray into the world of VR video. The company has created a VR video camera design that consists of 16 viewpoints; they will be releasing the design to the public and encouraging third-parties to create ‘Jump’ cameras which will capture 3D 360 degree footage for virtual reality playback. This approach is similar to Google’s Cardboard VR viewer that the company opted not to sell, but rather chose to release the plans, allowing third parties to manufacture and sell the device.

See Also: Google Announces New, Larger Cardboard VR Viewer with Universal Input Button

Google, as others, say that they’ve experimented extensively to determine the ideal placement of the cameras for recording VR video. The 16 camera rig creates a fairly large ring of cameras, with none appearing to cover directly above or below. It may be that any top or bottom gaps are filled in computationally, as directly above the camera will often be nothing but sky or ceiling, while directly below will often be the ground or floor. We’ve seen this approach used elsewhere, especially to eliminate a tripod that might be holding the rig from the bottom.

One problem with existing VR cameras are ‘stitching’ errors which create visible seams where the various video streams are merged together into a single 360 view. Google says they have a solution for this.

The ‘Assembler’ is their stitching service which they say uses computer vision and “3D alignment” to create a scene that has no stitching seams (a claim we’ll have to see to believe). Google says this is achieved by analyzing the scene in 3D and adapting the stitching to match. The system outputs a scene that’s “depth corrected stereo in all directions.” The company says that the high resolution output is “the equivalent of five 4k TVs playing at once.”

The Assembler service will be made available to “creators” this summer, though it isn’t clear if the company plans to charge for it; a possibility given the computing power involved with the process. It also isn’t clear if the Assembler will stitch only content recorded from cameras adhering to the Jump design, or if it’s a universal stitching method for any multi-viewpoint VR camera. The former may be the case, as the company notes that “The size of the [Jump] rig and the arrangement of the cameras are optimized to work with the Jump assembler.”

In addition to the Assembler, Google is bringing Jump VR video to YouTube. The company recently added 360 degree video support to the streaming video platform, and this summer they say that YouTube will allow users to “experience immersive video from your smartphone,” presumably through Google’s Cardboard VR viewer. Where that leaves access by other platforms like the Oculus Rift, Gear VR, and HTC Vive is still unclear.

See Also: YouTube Now Supports 360 Video, No VR Support (Yet)

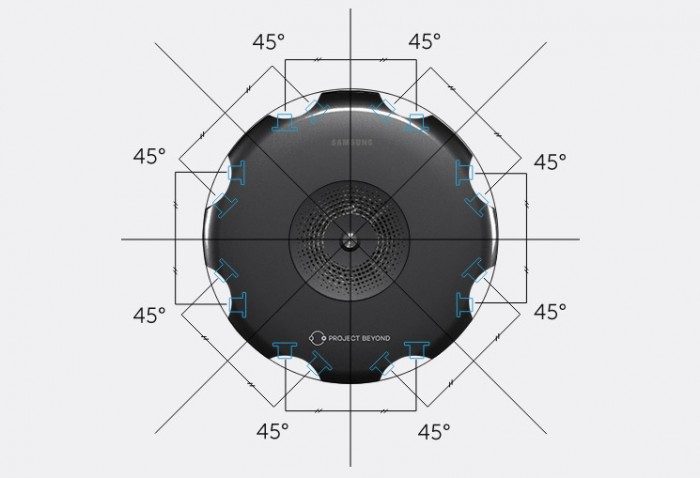

Similarly, Samsung is also working on a 3D VR camera that they call Project Beyond. It uses a different lens layout (including a camera that covers the top of the scene), and we’re sure both companies will battle over whose layout is best.

See Also: First Impressions of Project Beyond, Samsung’s 360 3D Camera for VR

Creators interested in working with Google’s Jump VR camera can hop in line using this form.