Google has released to researchers and developers its own mobile device-based hand tracking method using machine learning, something Google R call a “new approach to hand perception.”

First unveiled at CVPR 2019 back in June, Google’s on-device, real-time hand tracking method is now available for developers to explore—implemented in MediaPipe, an open source cross-platform framework for developers looking to build processing pipelines to handle perceptual data, like video and audio.

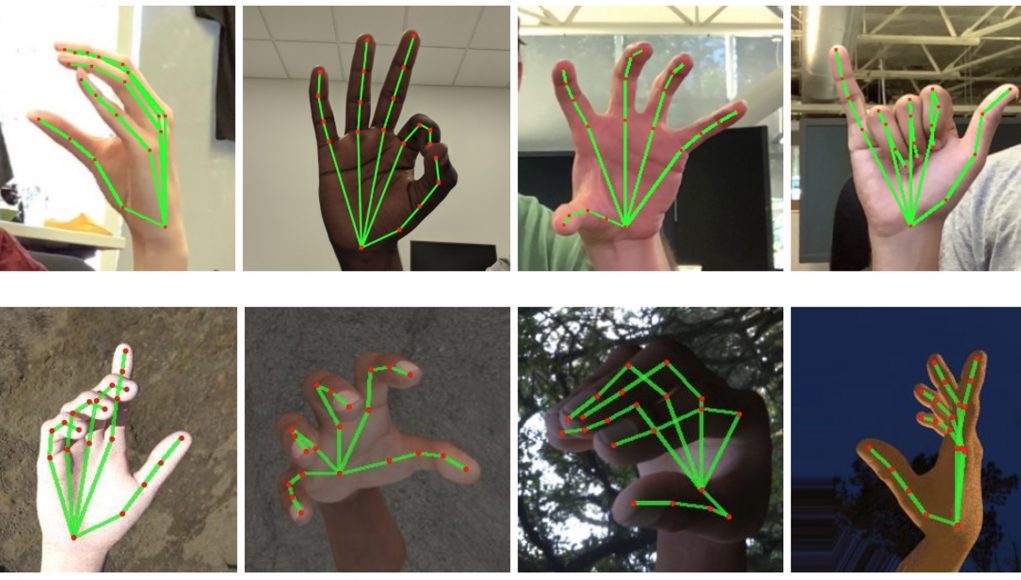

The approach is said to provide high-fidelity hand and finger tracking via machine learning, which can infer 21 3D ‘keypoints’ of a hand from just a single frame.

“Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands,” say in a blog post.

Google Research hopes its hand-tracking methods will spark in the community “creative use cases, stimulating new applications and new research avenues.”

explain that there are three primary systems at play in their hand tracking method, a palm detector model (called BlazePalm), a ‘hand landmark’ model that returns high fidelity 3D hand keypoints, and a gesture recognizer that classifies keypoint configuration into a discrete set of gestures.

Here’s a few salient bits, boiled down from the full blog post:

- The BlazePalm technique is touted to achieve an average precision of 95.7% in palm detection, researchers claim.

- The model learns a consistent internal hand pose representation and is robust even to partially visible hands and self-occlusions.

- The existing pipeline supports counting gestures from multiple cultures, e.g. American, European, and Chinese, and various hand signs including “Thumb up”, closed fist, “OK”, “Rock”, and “Spiderman”.

- Google is open sourcing its hand tracking and gesture recognition pipeline in the MediaPipe framework, accompanied with the relevant end-to-end usage scenario and source code, here.

In the future, say Google Research plans on continuing its hand tracking work with more robust and stable tracking, and also hopes to enlarge the amount of gestures it can reliably detect. Moreover, they hope to also support dynamic gestures, which could be a boon for machine learning-based sign language translation and fluid hand gesture controls.

Not only that, but having more reliable on-device hand tracking is a necessity for AR headsets moving forward; as long as headsets rely on outward-facing cameras to visualize the world, understanding that world will continue to be a problem for machine learning to address.