While Microsoft’s ‘Mixed Reality’ VR headsets are due to launch with some impressively low minimum hardware requirements that will be met by integrated graphics, the big players presently in the market have a much higher ‘VR Ready’ bar which involves a beefy GPU for high-end gaming applications. Intel says they expect their integrated CPU graphics will eventually achieve the performance required to meet that high-end bar, though even their new ultra high-end Core i9 isn’t there yet.

‘VR Ready’ is a colloquial term that refers primarily to the recommended hardware specifications for VR, supplied by both Oculus and HTC. While their recommendations cover a range of facets, arguably the most important part is the need for a powerful GPU, a big (and expensive) graphics processing component.

Both companies agree on the same set of GPUs that they consider good enough to meet their recommended specifications: an AMD Radeon R9 290 / Nvidia GTX 970 or higher; mid-range GPUs that can handle the high-powered rendering tasks required of VR headsets.

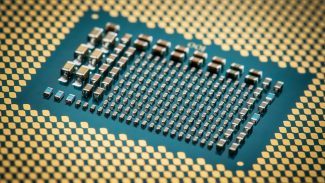

In the early days of consumer computing, computers only had CPUs (the main logic processor of the computer), but as 3D graphics came to the fore, the GPU became a more common addition to the computer’s hardware, acting as a specialized processor for the calculations needed to render 3D graphics.

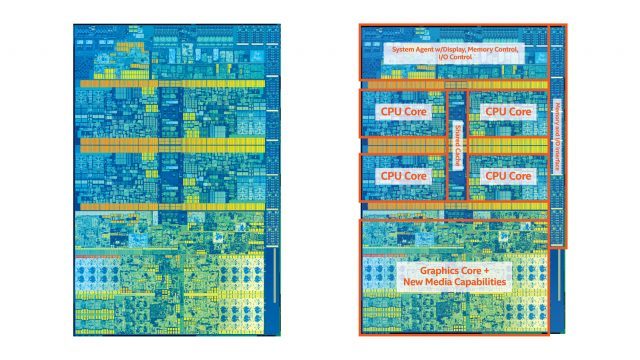

For people not into gaming or 3D workflows, however, the GPU isn’t entirely necessary as CPUs can do enough graphics processing to get by. If you hear anyone talking about ‘integrated’ graphics, this is what they mean: using the CPU’s own built-in graphics processing capabilities rather than a separate (AKA ‘discrete’) GPU.

In the last 15 years however, the GPU has become an increasingly important part of the modern computer due to an increase in the demand for graphical processing brought on by the popularity of 3D content like gaming and other heavy graphics tasks that can be accelerated with a GPU.

That’s been a fire under the foot of CPU-maker Intel who has, in the last 10 years or so, decided that their integrated CPU graphics need to step up their game in order to keep up with a world increasing in demand for graphics processing power.

So the company introduced ‘Intel HD Graphics’, which would add more graphics processing performance to the company’s CPUs. Since the introduction of HD Graphics, Intel’s integrated CPU graphics have gone (as far as gaming is concerned) from pretty much a joke, to being powerful enough for modern low-end gaming (in fact, about 16% of Steam users at present use Intel’s integrated graphics). For consumers who want to do just some gaming and other light graphical work, that’s a big plus because it means they can eschew the cost and bulk of a dedicated GPU.

And while Intel has actually made pretty impressive strides in their integrated CPU graphics, the current level of performance—even in the new Intel Core i9, an ultra high-end CPU priced at $2,000—is still far from ‘VR Ready’.

But Intel plans to continue growing the graphics processing capabilities of their CPUs, and one day, expects to meet the same VR Ready spec that Oculus, HTC, and others recommend for high-end VR gaming.

Frank Soqui, Intel’s General Manager of Virtual Reality and Gaming, confirmed as much speaking with Road to VR this week.

“Integrated graphics will support high-end VR… and not just mainstream [like the Windows Mixed Reality spec]. Our goal is to continue to push performance on CPU and graphics [to achieve] the same high-end content you’d see on Oculus and HTC.”

It’s a bold claim—if nothing else, simply due to the available area occupied by a CPU compared to a GPU—but it reinforces Intel’s commitment to not just keep up with the dedicated GPU, but to close the graphics performance gap between integrated graphics and dedicated GPUs.

Soqui didn’t offer any sort of timeline for when this might happen, and the way he talked about it made me feel like it wouldn’t be in the near future. But if Intel succeeds in this venture it would surely be a major boon for the VR industry because the majority of modern computers in the world lack dedicated GPUs and instead rely on integrated graphics. Integrated graphics capable of high-end VR would mean a much larger addressable market for VR hardware and a cheaper initial buy-in too.