Lytro, the once consumer-facing light field camera company which has recently pivoted to create high-end production tools, has announced a light field rendering software for VR that essentially aims to free developers from the current limitations of real-time rendering. The company calls it ‘Volume Tracer’.

Light field cameras are typically hailed as the next necessary technology in bridging the gap between real-time rendered experiences and 360 video—two VR content types that for now act as bookends on the spectrum of immersive to less-than-immersive virtual media. Professional-level light field cameras, like Lytro’s Immerge prototype, still aren’t yet in common use though, but light fields aren’t only capable of being generated with expensive/large physical cameras.

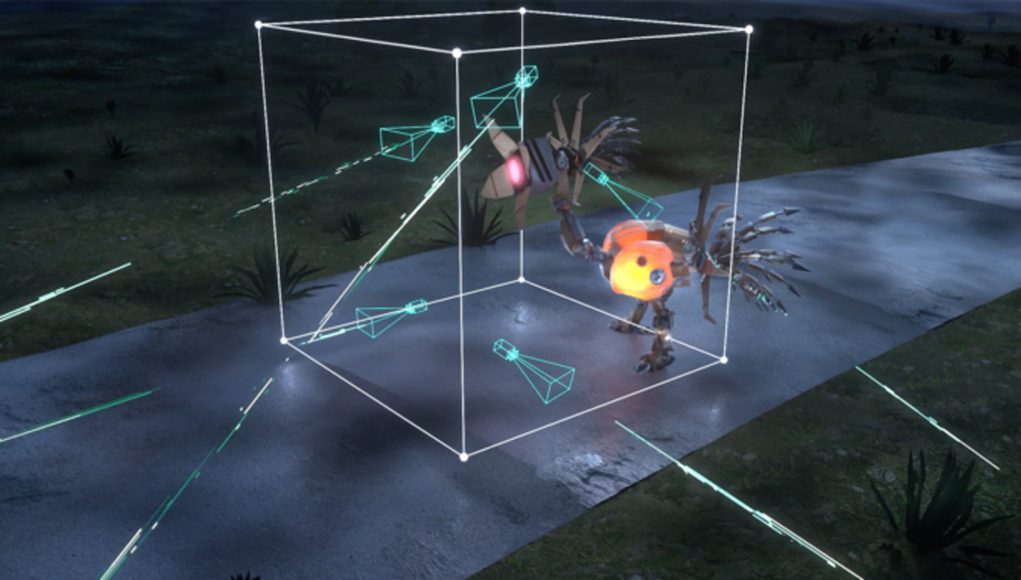

The company’s newest software-only solution, Volume Tracer, places multiple virtual cameras within a view volume of an existing 3D scene that might otherwise be expected to be rendered in real-time. Because developers who create real-time rendered VR experiences constantly fight to hit the necessary 90 fps required for comfortable play, and have to do so in a pretty tight envelope—both Oculus and HTC agree on a recommended GPU spec of NVIDIA GTX 1060 or AMD Radeon RX 480—the appeal of graphics power-saving light fields is pretty apparent.

Lytro breaks it down on their website, saying “each individual pixel in these 2D renders provide sample information for tracing the light rays in the scene, enabling a complete Light Field volume for high fidelity, immersive playback.”

According to Lytro, content created with Volume Tracer provides view-dependent illumination including specular highlights, reflections, refractions, etc; and is scalable to any volume of space, from seated to room-scale sizes. It also presents a compelling case for developers looking to eke out as much visual detail as possible by hooking into industry standard 3D modeling and rendering tools like Maya, 3DS Max, Nuke, Houdini, V-Ray, Arnold, Maxwell, and Renderman.

Real-time playback with positional tracking is also possible on Oculus rift and HTC Vive at up to 90 fps refresh rate.

One Morning, an animated short directed by former Pixar animator Rodrigo Blaas that tells the story of a brief encounter with a robot bird, was built on the Nimble Collective, rendered in Maxwell, and then brought to life in VR with Lytro Volume Tracer.

“What Lytro is doing with its tech is bringing something you haven’t seen before; it hasn’t been possible. Once you put the headset on and experience it, you don’t want to go back. It’s a new reality,” said Blaas.

Volume Tracker doesn’t seem to be currently available for download, but keep an eye on Lytro’s site and sign up for their newsletter for more info.