Lytro have today revealed plans which take the company’s expertise in ‘still’ light-field photography into the realm of VR film. The company have developed a scalable end-to-end solution for shooting, processing, editing and rendering next-generation 360 footage that allows a viewer to move around the captured scene in real time.

Lytro‘s technology, it could be argued, has been waiting for the rest of the world to catch up for nigh on a decade.

The company was founded in 2006 by then CEO Ren Ng, launching their first digital camera in 2012. The device allowed the user to refocus captured images after they’d been shot; this was a digital camera which harnessed the power of light-field technology. Last year, the company launched its Illum series of cameras, aimed at the professional photography market.

Thanks to a healthy $50M investment, Lytro have now developed a full end-to-end suite of systems, services and hardware, fuelled by VR’s recent revival. Immerge is the name of the solution and it promises professional-grade, high resolution, high frame rate stereoscopic 3D 360 video capture with no stitching required.

As it turns out, Light-fields are perfect for virtual reality cinema. So it seems that, after a decade extolling the virtues of the technology, the rest of the world is finally catching up with Lytro’s vision.

Introduction to Light-fields

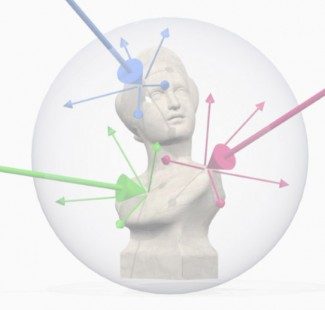

Light-field photography differs from traditional photography in that it captures much more information about the light passing through its volume (i.e. the lens or sensor). Whereas a  standard digital camera will capture light as it hits the sensor, statically and entirely in two dimensions, a light-field camera captures data about which direction the light emanated and from what distance.

standard digital camera will capture light as it hits the sensor, statically and entirely in two dimensions, a light-field camera captures data about which direction the light emanated and from what distance.

The practical upshot of this is, as a light-field camera captures information on all light passing into its volume (the size of the camera sensor itself), once captured you can refocus to any level with that scene (within certain limits). Make the camera’s volume large enough, and you have enough information about that scene to allow for positional tracking in the view; that is, you can manipulate your view within the captured scene left or right, up or down, allowing you ‘peek’ behind objects in the scene.

If you’re not quite with me, I don’t blame you. This technology is and will remain essentially ‘magic’ to most who have it explained to them (me included). I suspect however (as with all virtual reality things) its potential will be immediately apparent to everyone who sees the results.

Cinema-Grade 360 Degree Light-field Video

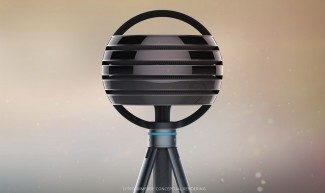

As if ‘static’ light-field capture wasn’t already incredible enough, Lytro have now  announced it’s taking a huge evolutionary leap. It’s developed a camera capable of capturing light-fields in motion in stereoscopic 3D and in 360 degrees. Lytro’s new Immerge camera has stackable light-field sensor arrays that can pull in light within its half-metre horizontal volume, from every angle at a quick enough rate to render high resolution, high frame rate data which can then be used to create immersive video. With input from a suitable hardware (i.e. a positionally tracked VR headset) the data allows the user six degrees of freedom of movement within that cinematic experience. This has the potential to create not only a much more immersive viewing experience but also to increase user comfort when compared with static, linear 360 movies viewed in VR.

announced it’s taking a huge evolutionary leap. It’s developed a camera capable of capturing light-fields in motion in stereoscopic 3D and in 360 degrees. Lytro’s new Immerge camera has stackable light-field sensor arrays that can pull in light within its half-metre horizontal volume, from every angle at a quick enough rate to render high resolution, high frame rate data which can then be used to create immersive video. With input from a suitable hardware (i.e. a positionally tracked VR headset) the data allows the user six degrees of freedom of movement within that cinematic experience. This has the potential to create not only a much more immersive viewing experience but also to increase user comfort when compared with static, linear 360 movies viewed in VR.

However, the benefits when applied to virtual reality extend beyond positional tracking. Because Immerge captures light as data across the camera’s volume, that light is not bound by the constraints of any lenses focusing that light, as would be the case in a traditional rig. This means that things like field of view and IPD are freely configurable per-user and VR headset, all from one set of captured data. Considering the difficulties presented by existing multi-camera 360 arrays (fixed lenses, fixed FOV, fixed IPD in stereoscopy), those benefits alone are potentially huge for VR video as a medium.

The Pipeline

Immerge isn’t just the camera however. It describes the suite of tools and hardware that make up a pipeline developed by Lytro to allow creators to capture and utilise the enormous amounts of detailed information the new camera is capable of capturing.

This high rate of data capture is actually Immerge’s Achilles heel. To deal with this, Lytro have developed the Immerge Server, a powerful, fibre attached storage array capable of

storing enough data for an hours worth of ‘filming’ before offloading that data elsewhere. The server comprises a dense array of fast storage and processing power to preview renders of the image data stored on it. Thanks to high-bandwidth PCI-E over fibre connectivity, the server can be situated around 150 metres from the camera – important when it’s capturing all angles of a film set.

Directors can control the entire process using tablet software which communicates wirelessly with the server, allowing them to preview footage and control camera actions from remote vantage points.

Once data is offloaded from the server, the true benefits of Immerge’s light-field data capture system really come into play. Because Immerge captures three dimensional data, ‘footage’ can be placed into VFX packages like Nuke with CGI elements accurately placed  within the scenes alongside the real-time photography.

within the scenes alongside the real-time photography.

For ‘standard’ editing, Lytro are working on plugins for industry favourite packages like Final Cut and Adobe Premiere. The plugins will allow editors to render the captured data and retain the flexibility of use light-fields provide.

Experiencing the Results

All of this is well and good, but none of this impressive technology is much use if no-one’s able to experience the results.

So, the final link in Lytro’s Immerge pipeline is a player capable of streaming compressed versions of the edited 360 degree light-field footage from the cloud. Initially targeting high-end (i.e. desktop-class) VR headsets launching next year, the ‘interactive Light Field video playback engine’ is no mere ‘player’. Using predictive algorithms, the local application will buffer certain amounts of light-field data to cope with the viewers choice of head movements. Only areas within view will be rendered, plus extra required to deal with quick head movements where necessary.

Of course, this won’t help when the user does something entirely unexpected, like pull a sudden 360. In this case there will be a lower definition spherical view presented to the viewer, allowing the streamed data to catch-up in order to render a full resolution light-field presentation once again.

These headsets are positionally tracked of course, so viewers will in theory be able to utilise six degrees of movement within the cinematic experience, as long as they stay within the confines of the original camera’s volume. This means that, for seated experiences at least, the size of Immerge’s camera arrays should provide enough volume for parallax movement by most users.

Lytro openly admit that there is still work to be done at this end of the pipeline, but Lytro CEO Jason Rosenthal assured me that the viewer would be scalable enough to run on low-power hardware like Gear VR for example.

Challenges like network bandwidth and latency are all issues of course when streaming from the web over variable data rates, so it’s likely there will need to be a healthy buffer of pre-loaded data on any device presenting Immerge footage.

The Practicalities and the Cost

All of this cutting edge hardware and software comes at a cost of course, and Lytro are the first to admit acquiring the equipment detailed above won’t be cheap. So the company intends to provide suites of equipment, from camera to server, configured to suit budgets on a rental basis.

The Immerge camera is actually designed with this in mind it turns out. Each of the horizontally sensor array ‘slices’ are removable, reducing weight at the expense of fidelity and camera volume. So as well as reducing heft, it also reduces cost. That’s the theory at least.

Of course, Lytro aren’t talking hard numbers just yet. It’s likely for the moment though this tech will remain outside the reach of micro-budget film-makers.

When?

Lytro’s CEO Jason Rosenthal assured me that the company are looking to reach market, that is: allowing people to view 360 light-field video on their first generation headsets in the first half of 2016. The company are shooting test footage right now and they have content partners (detailed below) in line to start shooting with their equipment.

In the End, It’s All About the Content

Yes it is, and Lytro are acutely aware of this. Luckily, some of the best pioneers in immersive cinema are on-board. And it seems that, mostly due to frustrations and limitations of current 360 camera technology, it wasn’t too hard to persuade them.

Lytro have asked some of the best in the field to create content using the Immerge platform. Felix & Paul, Vrse and WEVR will get early access to this technology to create new films with light-field technology. For the likes of Anthony Batt, EVP at WEVR, it’s about time.

“Creatively we’re going through the exercise of making a lot of immersive VR with live action video and CG and compositing both together,” says Batt. “We’re finding all the pain points. Camera systems are a pain point, post production is a pain point because it is all linear video, distribution and playback is also a pain point. So getting your content onto a system is persnickety at best. We’re still stumbling on crude tools. We’re stuck on old tools for a new medium.”

No content is available to watch as of yet unfortunately, but I was told that this will change soon.

The Future

Just imagine if one of your favourite movies had been shot using light-field technology. Imagine watching Star Wars over and over again, viewing it from a different angle, such that every subsequent viewing was different from the last. Being able to move around a movie scene is not just potentially nausea diminishing, it’s a way to feel like you’re in the scene set with the actors, that old 3D movie advertising trope made reality.

“VR is the next wave in cinematic content, and immersive storytellers have been seeking technology that allows them to fully realize their creative vision,” said Jason Rosenthal, CEO of Lytro. “We believe the power of Light Field will help VR creators deliver on the promise of this new medium. Lytro Immerge is an endtoend Light Field solution that will provide all the hardware, software and services required to capture, process, edit and playback professional grade cinematic content.”

There’s no doubt that 360 light-field photography answers many of the gripes currently felt by film-makers trying to create for VR. Its sheer flexibility means it’s also a good fit for expanding the scope of VR cinema, easing potentially very tricky VFX compositing processes. Not to mention the benefits to the viewer, with potentially universal FOV and IPD across all users and hardware.

However, despite light-field’s obvious departure from traditional film-making, the delivery of the resulting content remains challenging. If the solution is to be cloud rendered and streamed, then you’re going to need some pretty hefty bandwidth to enjoy a high quality experience. But with local buffering of data and some clever predictive rendering, it seems that these challenges may be surmountable too.

Nevertheless, if Lytro can pull off everything they’ve talked about, the breadth and technical capabilities of the Immerge system could be enough to place them as the front-runners in the race to provide a truly workable VR video pipeline.