The Meta 2 development kit, made available for pre-order by the company today, isn’t perfect. But then again neither was the Rift DK1, and it didn’t need to be. It only needed to be good enough that just a tiny bit of imagination is all that was needed for people to extrapolate and see what VR could be.

See Also: ‘Meta 2′ AR Glasses Available to Pre-order, 1440p with 90 Degree FOV for $949

Without an inexpensive and widely available development kit like the DK1, VR would not be where it is today. There are lots of great companies and incredible products now in the VR space, but there is no argument that Oculus didn’t catalyze the re-emergence of consumer VR and that the Rift DK1 wasn’t the opening act of a play that is on its way to a grand climax.

Augmented reality, on the other hand, hasn’t yet seen a device like the Rift DK1—an imperfect, but widely available and low cost piece of hardware that makes an effortless jumping-off point for the imagination.

Meta’s first development kit, the Meta 1, was not that device. It was too rudimentary; it still asked for too much faith from the uninitiated. But Meta 2 could change all of that.

New Display Tech

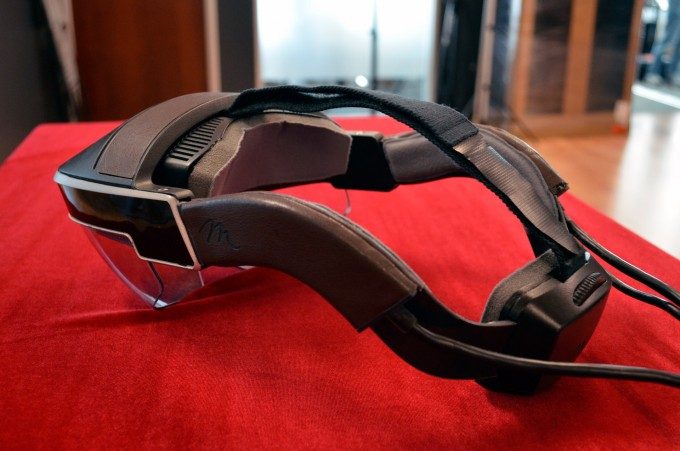

Meta 2’s biggest change is a drastic difference in display technology. The result is a hugely improved field of view and resolution compared to its predecessor. The unit now sports a 2560×1440 (1280×1440 per eye) resolution and 90 degree diagonal field of view, compared to the 960×540 per eye resolution and paltry 36 degree field of view of the original.

The new display tech uses a smartphone display mounted above the plastic shield which serves as the reflective medium to bounce light from the display into your eyes. On the inside, the plastic shield is shaped concavely to aim one half of the smartphone’s display into your left eye and, and the other half into your right. These concave segments use a faintly silvered coating to reflect the light coming from the angle of the smartphone display, but still allow light to penetrate through to your eyes from the outside, effectively creating a transparent display that doesn’t darkly tint the outside world.

Meta has honed this display system quite well and the result is a reasonably sharp and bright AR image that appears to float out in front of you. The improvement in the field of view from Meta 1 to Meta 2 is night and day. It makes a huge difference to immersion and usability.

Those of you with us in the Rift DK1 days will recall that one of the device’s smart choices was to saddle up with mobile device displays, effectively piggybacking on the benefits of a massive industry which was independently driving the production of affordable, high performance, high resolution displays. Meta has also made this call and it will likely pay dividends compared to more fringe proprietary display tech like waveguides and tiny projectors that don’t get the ‘free R&D’ of healthy consumer electronics competition.

Hands-on Demo

I joined Meta at their offices in Redwood City, CA recently to see the Meta 2 development kit first hand. They took me into a fairly dim room and fitted the device onto my head. For a development kit, the fit is reasonably good; most of the weight sits atop your head compared to many VR headsets which squeeze against the back of your head and your face for grip. The plastic shield in front of your eyes doesn’t actually touch your face at all.

It should be noted that Meta 2 is a tethered device which requires the horsepower of a computer to display everything I was about to see.

Meta walked me through several short demos showing things like a miniature Earth floating on the table in front of me with satellite orbiting it, an augmented reality TV screen, and a multi-monitor setup, all displayed right there before me projected out into my reality. When I held my hand up in front of the AR projections, I saw reasonably good occlusion—enough to make me see where things are headed—such that the objects would disappear behind my hand (as if the objects were really behind it).

In the multi-AR monitor segment of the demo I was able to reach my hand out into each monitor and make a first as a grabbing gesture, allowing me to rearrange the monitors as desired. On one of the monitors I saw an e-commerce website mocked up in a web-browser, and when I touched the picture of a shoe on the AR display, a 3D model of the shoe floated out into the space in front of me.

With the hand-tracking built into Meta 2, I was able to reach out and use the fist-gesture to move it about, somewhat haphazardly due to poor hand-tracking, and also use two fists in unison as a sort of pinch-zoom function to make the shoe larger and smaller. The hand-tracking performance I felt in this demo left much to be desired, but the company showed me some substantial improvements to hand-tracking coming down the pipe, and said that the Meta 2 development kit would ship with those improvements built-in. We plan to go into more detail on the newer hand-tracking soon.

One ostensibly ‘big’ moment of the demo was when they showed a “holographic call” where I saw a Meta representative projected on the table in front of me, live from a nearby room. While neat, this sort of functionality presupposes that we all have a Kinect-like device set up in our homes somewhere, allowing the other user to see us in 3D as well. In this demo example, since I had no gesture camera pointed at me, the person I was seeing had no way to see me on the other end.

An Obvious Flaw (Didn’t Stop the Rift DK1 Either)

All of the demos I saw were fine and dandy and did a good job of showing some practical future uses of augmented reality. The new Meta 2 display technology sets a good minimum bar for what feels sharp and immersive enough to be useful in the AR context, but the headtracking performance was simply not up to par with the other aspects of the system.

If you want great AR, you need great headtracking as a very minimum.

A major part of believing that something is really part of your reality is that when you look away and look back, it hasn’t moved at all. If there’s an augmented reality monitor floating above my desk, it lacks a certain amount of reality until it can appear to stay properly affixed in place.

In order to achieve that, you need to know precisely where the headset is in space and where it’s going. Meta 2 is doing on-board inside-out positional and rotational tracking with sensors on the headset. And while you couldn’t say it doesn’t work, it is far from a solved problem. If you turn your head about the scene with any reasonable speed, you’ll see the AR world become completely de-synced from the real world as the tracking latency simply fails to keep up.

Even under demo conditions and slow head movements, notice how bouncy the AR objects are in this clip.

Projected AR objects will fly off the table until you stop turning your head, at which point they’ll slide quickly back into position. The whole thing is jarring and means the brain has little time to build the AR object into its map of the real world, breaking immersion in a big way.

Granted, computer vision remains one of the most significant challenges facing augmented reality in general, not just Meta.

But, returning to the Rift DK1—Oculus knew they needed lower latency and quite importantly, positional tracking. But they didn’t have a solution when they shipped the DK1, and thus the unit simply went out the door with rotational tracking only and more latency than they would have prefered. But even while lacking positional tracking altogether, the DK1 went on to play an important part in the resurgence of VR by doing enough things well enough, and being that affordable, accessible jumping off point for the imagination.

Meta 2 is in the same boat (albeit, somewhat more expensive, but still sub-$1,000). It has a number of great starting points and a few major things that still need to be solved. But with any luck, it will be the jumping-off point that the world needs to see a VR-like surge of interest and development in AR.

AR is Where VR Was in 2013

The careful reader of this article’s headline (Meta 2 Could Do for Augmented Reality What Rift DK1 Did for Virtual Reality), will spot a double entendre.

Many people right now think that the VR and AR development timelines are right on top of each other—and it’s hard to blame them because superficially the two are conceptually similar—but the reality is that AR is, at best, where VR was in 2013 (the year the DK1 launched). That is to say, it will likely be three more years until we see the ‘Rifts’ and ‘Vives’ of the AR world shipping to consumers.

Without such recognition, you might look at Meta’s teaser video and call it overhyped. But with it, you can see that it might just be spot on, albeit on a delayed timescale compared to where many people think AR is today.

Without such recognition, you might look at Meta’s teaser video and call it overhyped. But with it, you can see that it might just be spot on, albeit on a delayed timescale compared to where many people think AR is today.

Like Rift DK1 in 2013, Meta 2 isn’t perfect in 2016, but it could play an equally important role in the development of consumer augmented reality.