A new feature has leaked to some Samsung smartphones which is expected to bring the ability to capture 3D images and videos specifically for “Galaxy XR headsets,” SamMobile has discovered.

Samsung revealed its forthcoming XR headset, codenamed ‘Project Moohan’ (Korean for ‘Infinite’), late last year, which is slated to bring competition to Apple Vision Pro sometime later this year.

When, how much, or even the mixed reality headset’s official named are all still a mystery, however a recent feature leak uncovered by SamMobile’s Asif Iqbal Shaik reveals Samsung smartphones could soon be able to capture 3D photos and video—just like iPhone does for Vision Pro.

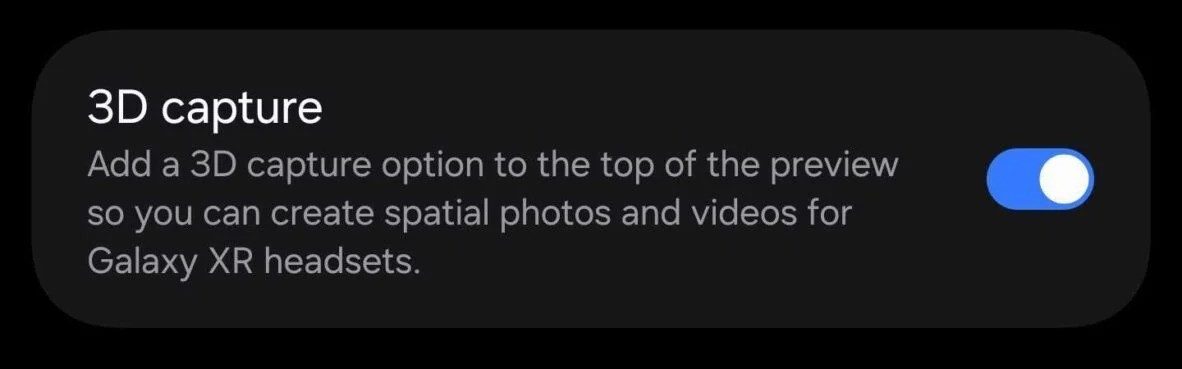

Shaik maintains the latest version (4.0.0.3) of the Camera Assistant app contains the option to capture specifically for “Galaxy XR headsets,” initially hidden within an update to the app on Galaxy S25 FE. Transferring the APK file to a Galaxy S25 Ultra however reveals the option, seen above.

Speculation regarding the plurality of Galaxy XR headsets aside: Samsung has gone on record multiple times since Project Moohan’s late 2024 unveiling that the mixed reality headset will indeed release later this year, making the recent software slip an understandable mistake as the company ostensibly seeks to match Vision Pro feature-for-feature on its range of competing smartphones on arrival.

Slated to be the first XR headset to run Google’s Android XR operating system, Moohan could be releasing sooner than you think. A recent report from Korea’s Newsworks maintained the device will be featured at a Samsung product event on September 29th. Notably, Moohan was a no-show at Samsung’s Galaxy event earlier this month, which saw the unveiling of Galaxy S25 FE, Galaxy Tab S11, and Galaxy Tab S11 Ultra.

Newsworks further suggests Moohan could launch first in South Korea on October 13th, priced at somewhere between ₩2.5 and ₩4 million South Korean won—or between $1,800 and $2,900 USD—with a global rollout set to follow.