On February 18th Intel held a developers lab in Rocklin, CA (north of Sacramento) in the new ‘Hacker Lab’ space at Sierra College. The event sought to teach devs the in’s and out’s of developing with Intel’s latest RealSense 3D Camera, which allows for interactive gesture input, thanks to a 1080p color camera and depth sensor. Road to VR worked with Intel to find a local VR enthusiast to report from the event.

This guest article is written by Ally Grace Esparza (@allygrace), a screenwriter currently living in Sacramento, CA where she teaches after-school engineering classes for K-5th graders. Her interest in VR began with the Matrix and Ready Player One, and has grown with the technology’s increased accessibility in recent years. Her research of virtual reality and artificial intelligence is primarily for a screenplay involving the technologies.

This guest article is written by Ally Grace Esparza (@allygrace), a screenwriter currently living in Sacramento, CA where she teaches after-school engineering classes for K-5th graders. Her interest in VR began with the Matrix and Ready Player One, and has grown with the technology’s increased accessibility in recent years. Her research of virtual reality and artificial intelligence is primarily for a screenplay involving the technologies.

Upon arriving to the RealSense dev lab, you could sense the excitement as they passed out the computers and cameras. The camera dev kit plugs into the computers, but Intel has recently started building RealSense directly into laptops and desktops alike. If they catch on, depth sensing and gesture input could become as common on computers as the webcam, allowing for a standard method of gesture control for VR and other applications, beyond the abstract interfaces of the keyboard and mouse.

The day started with a short presentation highlighting the types of input that the camera can sense—hands, face, speech, and environment—as well as some of the features of the RealSense technology, to aid in the ideation process.

Developers were shown how the camera could be used to create a digital green-screen, essentially inserting the user into a digital environment, with the user remaining tracked in 3D, great for immersive learning, working, and playing. They stressed the importance of creating commands that come naturally and aren’t too intricate or taxing, focusing on usability, ease of use, and avoiding arm fatigue. They were shown how you can create dynamic input by melding gesture, speech, facial, and depth analysis into gameplay.

Although a VR headset would block eyetracking, mouth tracking for mapping speech onto an avatar could be possible with RealSense, provided the headtracking algorithms are tuned for a user wearing a headset.

In education applications, the RealSense camera can help create interactive lessons and stories bringing 2D and 3D objects into the experience, or sensing facial and emotional cues so the computer knows when to aid in the learning process. When brainstorming a project to create with the camera, I thought about tracking these cues overtime to determine when a student is struggling, what comes easy, or what the student enjoys doing, thus creating a learning profile for the student and enabling more insightful and effective learning going forward.

Christopher Haupt of MobiRobo, a local independent game studio, was most excited for the educational opportunities afforded by the RealSense technology. Specifically, allowing children to perform experiments previously too expensive or dangerous to bring into a classroom.

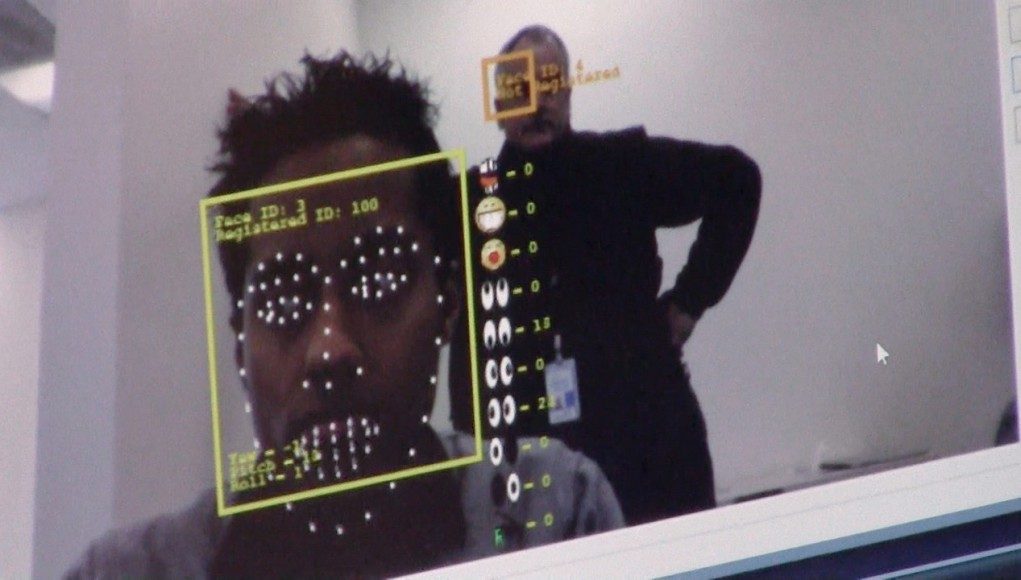

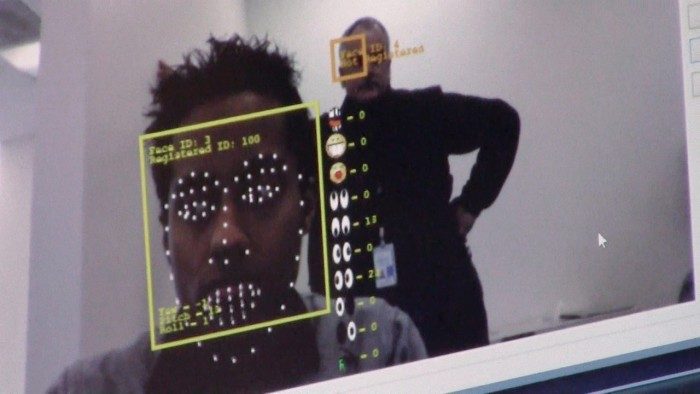

By focusing on 3D environments and detailed fingers and faces, RealSense places Intel at the forefront of accessible and advanced gesture and facial recognition technology. 22-points of recognition for hands and 78-points of facial feature detection creates phenomenal accuracy in facial and avatar mapping.

The camera can detect raising eyebrows not only in amazement but also as a command—to shoot lasers, for instance—or create plasma clouds with the wave of a hand. Allowing games to read our often subconscious expressions can enable games with characters and gameplay that can react and transform according to our emotions.

The RealSense camera doesn’t only have use in the educational and entertainment areas though. I overheard developers talking about a program that senses when a driver is becoming fatigued and triggers the radio or air conditioner to keep them alert. Others were talking about using it as a key to your home or car that only unlocks for those you have allowed to enter. Or, my idea, a Facial Rec-Ignition that only starts your car for those who you approve, sending the owner a picture of anyone else trying to start it in an attempt to eliminate car theft, or hinder your teenager from taking it without permission!

Throughout the day, prizes were awarded for thoughts on uses of the the RealSense camera. It started with an exploration of ideas, which were shared on twitter. One of which was recognized for its potential to enable the deaf/mute community.

With my RealSense gesture recognition I will help deaf/mute community use sign langauge to communicate over video chat. #RealSense

— German (@germano18) February 18, 2015

Another prize was an Intel-equipped tablet, the program that won this prize was called the ‘Intexticator’ and it would sense a driver’s head movement in relation to their phone and send an alert to a 3rd party that the individual was texting and driving, aimed at the parents of newly driving teens.

The grand prize of an Intel-equipped laptop went to a video game in which the developer utilized the camera’s gesture recognition, allowing your head movements to control the character’s movement, widening and closing your arms to grow and shrink him, and smiling—yes, smiling—to shoot enemies.

All in all, the RealSense’s gesture input feels like the future of how we’ll interact with computers, providing us with the next evolutionary step passed the touchscreens of today.

For more information you can visit the RealSense Resource Center or the Intel RealSense main page.

This article was sponsored by Intel and written by Ally Grace, an independent VR enthusiast who attended Intel’s RealSense Developer Lab event.