Eye-tracking—the ability to quickly and precisely measure the direction a user is looking while inside of a VR headset—is often talked about within the context of foveated rendering, and how it could reduce the performance requirements of XR headsets. And while foveated rendering is an exciting use-case for eye-tracking in AR and VR headsets, eye-tracking stands to bring much more to the table.

Updated – May 2nd, 2023

Eye-tracking has been talked about with regards to XR as a distant technology for many years, but the hardware is finally becoming increasingly available to developers and customers. PSVR 2 and Quest Pro are the most visible examples of headsets with built-in eye-tracking, along with the likes of Varjo Aero, Vive Pro Eye and more.

With this momentum, in just a few years we could see eye-tracking become a standard part of consumer XR headsets. When that happens, there’s a wide range of features the tech can enable to drastically improve the experience.

Foveated Rendering

Let’s first start with the one that many people are already familiar with. Foveated rendering aims to reduce the computational power required for displaying demanding AR and VR scenes. The name comes from the ‘fovea’—a small pit at the center of the human retina which is densely packed with photoreceptors. It’s the fovea which gives us high resolution vision at the center of our field of view; meanwhile our peripheral vision is actually very poor at picking up detail and color, and is better tuned for spotting motion and contrast than seeing detail. You can think of it like a camera which has a large sensor with just a few megapixels, and another smaller sensor in the middle with lots of megapixels.

The region of your vision in which you can see in high detail is actually much smaller than most think—just a few degrees across the center of your view. The difference in resolving power between the fovea and the rest of the retina is so drastic, that without your fovea, you couldn’t make out the text on this page. You can see this easily for yourself: if you keep your eyes focused on this word and try to read just two sentences below, you’ll find it’s almost impossible to make out what the words say, even though you can see something resembling words. The reason that people overestimate the foveal region of their vision seems to be because the brain does a lot of unconscious interpretation and prediction to build a model of how we believe the world to be.

Foveated rendering aims to exploit this quirk of our vision by rendering the virtual scene in high resolution only in the region that the fovea sees, and then drastically cut down the complexity of the scene in our peripheral vision where the detail can’t be resolved anyway. Doing so allows us to focus most of the processing power where it contributes most to detail, while saving processing resources elsewhere. That may not sound like a huge deal, but as the display resolution of XR headsets and field-of-view increases, the power needed to render complex scenes grows quickly.

Eye-tracking of course comes into play because we need to know where the center of the user’s gaze is at all times quickly and with high precision in order to pull off foveated rendering. While it’s difficult to pull this off without the user noticing, it’s possible and has been demonstrated quite effectively on recent headset like Quest Pro and PSVR 2.

Automatic User Detection & Adjustment

In addition to detecting movement, eye-tracking can also be used as a biometric identifier. That makes eye-tracking a great candidate for multiple user profiles across a single headset—when I put on the headset, the system can instantly identify me as a unique user and call up my customized environment, content library, game progress, and settings. When a friend puts on the headset, the system can load their preferences and saved data.

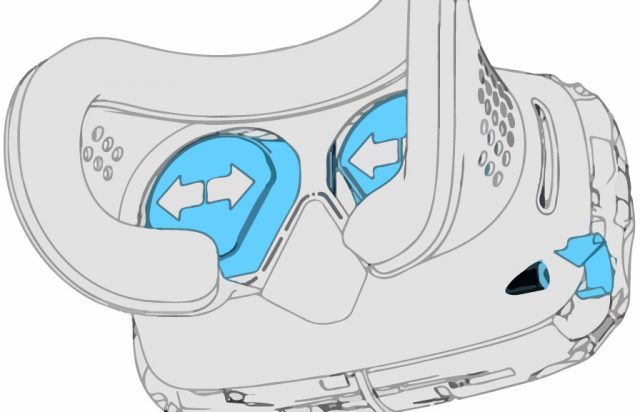

Eye-tracking can also be used to precisely measure IPD (the distance between one’s eyes). Knowing your IPD is important in XR because it’s required to move the lenses and displays into the optimal position for both comfort and visual quality. Unfortunately many people understandably don’t know what their IPD off the top of their head.

With eye-tracking, it would be easy to instantly measure each user’s IPD and then have the headset’s software assist the user in adjusting headset’s IPD to match, or warn users that their IPD is outside the range supported by the headset.

In more advanced headsets, this process can be invisible and automatic—IPD can be measured invisibly, and the headset can have a motorized IPD adjustment that automatically moves the lenses into the correct position without the user needing to be aware of any of it, like on the Varjo Aero, for example.

Varifocal Displays

The optical systems used in today’s VR headsets work pretty well but they’re actually rather simple and don’t support an important function of human vision: dynamic focus. This is because the display in XR headsets is always the same distance from our eyes, even when the stereoscopic depth suggests otherwise. This leads to an issue called vergence-accommodation conflict. If you want to learn a bit more in depth, check out our primer below:

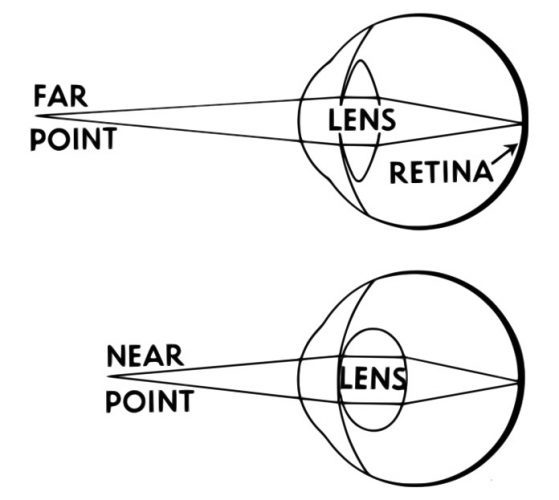

Accommodation

In the real world, to focus on a near object the lens of your eye bends to make the light from the object hit the right spot on your retina, giving you a sharp view of the object. For an object that’s further away, the light is traveling at different angles into your eye and the lens again must bend to ensure the light is focused onto your retina. This is why, if you close one eye and focus on your finger a few inches from your face, the world behind your finger is blurry. Conversely, if you focus on the world behind your finger, your finger becomes blurry. This is called accommodation.

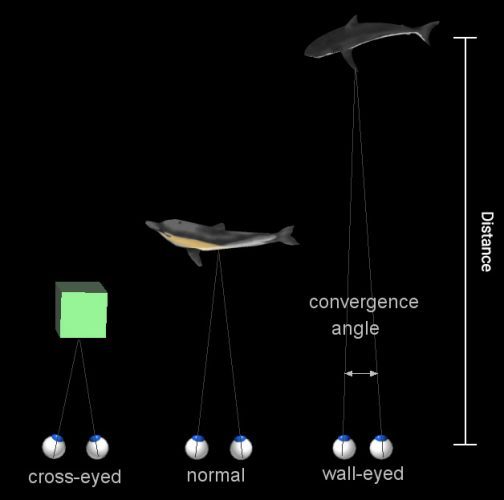

Vergence

Then there’s vergence, which is when each of your eyes rotates inward to ‘converge’ the separate views from each eye into one overlapping image. For very distant objects, your eyes are nearly parallel, because the distance between them is so small in comparison to the distance of the object (meaning each eye sees a nearly identical portion of the object). For very near objects, your eyes must rotate inward to bring each eye’s perspective into alignment. You can see this too with our little finger trick as above: this time, using both eyes, hold your finger a few inches from your face and look at it. Notice that you see double-images of objects far behind your finger. When you then focus on those objects behind your finger, now you see a double finger image.

The Conflict

With precise enough instruments, you could use either vergence or accommodation to know how far away an object is that a person is looking at. But the thing is, both accommodation and vergence happen in your eye together, automatically. And they don’t just happen at the same time—there’s a direct correlation between vergence and accommodation, such that for any given measurement of vergence, there’s a directly corresponding level of accommodation (and vice versa). Since you were a little baby, your brain and eyes have formed muscle memory to make these two things happen together, without thinking, anytime you look at anything.

But when it comes to most of today’s AR and VR headsets, vergence and accommodation are out of sync due to inherent limitations of the optical design.

In a basic AR or VR headset, there’s a display (which is, let’s say, 3″ away from your eye) which shows the virtual scene, and a lens which focuses the light from the display onto your eye (just like the lens in your eye would normally focus the light from the world onto your retina). But since the display is a static distance from your eye, and the lens’ shape is static, the light coming from all objects shown on that display is coming from the same distance. So even if there’s a virtual mountain five miles away and a coffee cup on a table five inches away, the light from both objects enters the eye at the same angle (which means your accommodation—the bending of the lens in your eye—never changes).

That comes in conflict with vergence in such headsets which—because we can show a different image to each eye—is variable. Being able to adjust the imagine independently for each eye, such that our eyes need to converge on objects at different depths, is essentially what gives today’s AR and VR headsets stereoscopy.

But the most realistic (and arguably, most comfortable) display we could create would eliminate the vergence-accommodation issue and let the two work in sync, just like we’re used to in the real world.

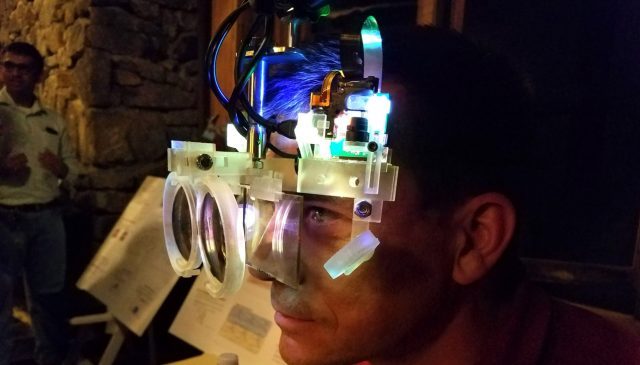

Varifocal displays—those which can dynamically alter their focal depth—are proposed as a solution to this problem. There’s a number of approaches to varifocal displays, perhaps the most simple of which is an optical system where the display is physically moved back and forth from the lens in order to change focal depth on the fly.

Achieving such an actuated varifocal display requires eye-tracking because the system needs to know precisely where in the scene the user is looking. By tracing a path into the virtual scene from each of the user’s eyes, the system can find the point that those paths intersect, establishing the proper focal plane that the user is looking at. This information is then sent to the display to adjust accordingly, setting the focal depth to match the virtual distance from the user’s eye to the object.

A well implemented varifocal display could not only eliminate the vergence-accommodation conflict, but also allow users to focus on virtual objects much nearer to them than in existing headsets.

And well before we’re putting varifocal displays into XR headsets, eye-tracking could be used for simulated depth-of-field, which could approximate the blurring of objects outside of the focal plane of the user’s eyes.

As of now, there’s no major headset on the market with varifocal capabilities, but there’s a growing body of research and development trying to figure out how to make the capability compact, reliable, and affordable.

Foveated Displays

While foveated rendering aims to better distribute rendering power between the part of our vision where we can see sharply and our low-detail peripheral vision, something similar can be achieved for the actual pixel count.

Rather than just changing the detail of the rendering on certain parts of the display vs. others, foveated displays are those which are physically moved (or in some cases “steered”) to stay in front of the user’s gaze no matter where they look.

Foveated displays open the door to achieving much higher resolution in AR and VR headsets without brute-forcing the problem by trying to cram pixels at higher resolution across our entire field-of-view. Doing so is not only be costly, but also runs into challenging power and size constraints as the number of pixels approach retinal-resolution. Instead, foveated displays would move a smaller, pixel-dense display to wherever the user is looking based on eye-tracking data. This approach could even lead to higher fields-of-view than could otherwise be achieved with a single flat display.

Varjo is one company working on a foveated display system. They use a typical display that covers a wide field of view (but isn’t very pixel dense), and then superimpose a microdisplay that’s much more pixel dense on top of it. The combination of the two means the user gets both a wide field of view for their peripheral vision, and a region of very high resolution for their foveal vision.

Granted, this foveated display is still static (the high resolution area stays in the middle of the display) rather than dynamic, but the company has considered a number of methods for moving the display to ensure the high resolution area is always at the center of your gaze.