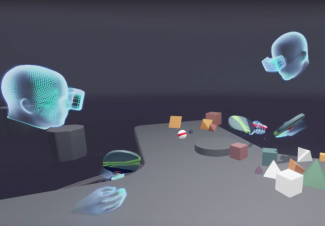

This experimental game not only allows two players, both immersed in VR via an HTC Vive headset, to share the same virtual space, it also projects their real world image into the application. And, it’s all possible over an Internet connection.

The surface of possibilities afforded by single player virtual reality experiences has barely been scratched and yet, there are developers already working on ways in which we can all share the same virtual space – even when we’re miles apart.

When Jasper Brekelmans and collaborator Jeroen de Mooij got access to a set of HTC Vive gear, they wanted to work towards an application that addressed their belief that “the Virtual Reality revolution needs a social element in order to really take off”. So, they decided to build a multiplayer, cooperative virtual reality game which not only allowed physical space to be shared and mapped into VR, but also project the player’s bodies in as well. The result is a cool looking shared experience, only possible in VR.

The video above demonstrates the two developers working within the same physical space, with a point cloud for each person’s physical self generated using a Microsoft Kinect for Windows depth camera rendered correctly positioned in VR. The benefits for this are that, your in-game compatriot can see where you are when blind to the real world, especially handy when they’re sharing the same physical space.

However, the real power of the technique comes when the two players are physically separated. The team purposefully build the system to be net-workable, estimating that the data required to send the projected player point cloud data should be possible over an Internet connection with around 5Mb/s bandwidth – pretty impressive.

There is something bizarrely fun about simply messing around in virtual spaces, and adding in another person to the mix, as demonstrated well with the recent Oculus Touch Toybox videos, elevates everything to 11. The possibilities of geographically diverse families for example, sharing a multiplayer space to spend time together, seems a particularly compelling example.

Whether this experiment will evolve into a full blown project isn’t clear, but the theory is certainly one worth developing in my opinion.