Co-founded by former CERN engineers who contributed to the ATLAS project at the Large Hadron Collider, CREAL3D is a Switzerland-based startup that’s created an impressive light-field display that’s unlike anything in an AR or VR headset on the market today.

At CES last week we saw and wrote about lots of cool stuff. But hidden in the less obvious places we found some pretty compelling bleeding-edge projects that might not be in this year’s upcoming headsets, but surely paint a promising picture for the next next-gen of AR and VR.

One of those projects wasn’t in CES’s AR/VR section at all. It was hiding in an unexpected place—one and half miles away, in an entirely different part of the conference—blending in as two nondescript boxes on a tiny table among a band of Swiss startups representing at CES as part of the ‘Swiss Pavilion’.

It was there that I met Tomas Sluka and Tomáš Kubeš, former CERN engineers and co-founders of CREAL3D. They motioned to one of the boxes, each of which had an eyepiece to peer into. I stepped up, looked inside, and after one quick test I was immediately impressed—not with what I saw, but how I saw it. But it’ll take me a minute to explain why.

CREAL3D is building a light-field display. Near as I can tell, it’s the closest thing to a real light-field that I’ve personally had a chance to see with my own eyes.

Light-fields are significant to AR and VR because they’re a genuine representation of how light exists in the real world, and how we perceive it. Unfortunately they’re difficult to capture or generate, and arguably even harder to display.

Every AR and VR headset on the market today uses some tricks to try to make our eyes interpret what we’re seeing as if it’s actually there in front of us. Most headsets are using basic stereoscopy and that’s about it—the 3D effect gives a sense of depth to what’s otherwise a scene projected onto a flat plane at a fixed focal length.

Such headsets support vergence (the movement of both eyes to fuse two images into one image with depth), but not accommodation (the dynamic focus of each individual eye). That means that while your eyes are constantly changing their vergence, the accommodation is stuck in one place. Normally these two eye functions work unconsciously in sync, hence the so-called ‘vergence-accommodation conflict’ when they don’t.

More simply put, almost all headsets on the market today are displaying imagery that’s an imperfect representation of how we see the real world.

On more advanced headsets, ‘varifocal’ approaches dynamically shift the focal length based on where you’re looking (with eye-tracking). Magic Leap, for instance, supports two focal lengths and jumps between them as needed. Oculus’ Half Dome prototype does the same, and—from what we know so far—seems to support a wide range of continuous focal lengths. Even so, these varifocal approaches still have some inherent issues that arise because they aren’t actually displaying light-fields.

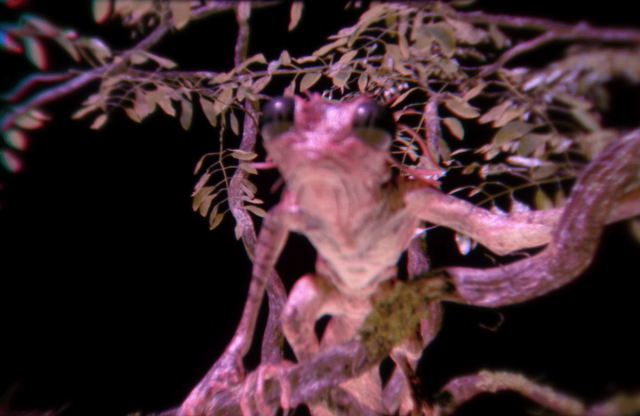

So, back to the quick test I did when I looked through the CREAL3D lens: inside I saw a little frog on a branch very close to my eye, and behind it was a tree. After looking at the frog, I focused on the tree which came into sharp focus while the frog became blurry. Then I looked back at the frog and saw a beautiful, natural blur blossom over the tree.

Above is raw, through-the-lens footage of the CREAL3D light-field display in which you can see the camera focusing on different parts of the image. (CREAL3D credits the 3D asset to Daniel Bystedt).

Why is this impressive? Well, I knew they weren’t using eye-tracking, so I knew what I was seeing wasn’t a typical varifocal system. And I was looking through a single lens, so I knew what I was seeing wasn’t mere vergence. This was accomodation at work (the dynamic focus of each individual eye).

The only explanation for being able to properly accommodate between two objects with a single eye (and without eye-tracking) is that I was looking at a real light-field—or at least something very close to one.

That beautiful blur I saw was the area of the scene not in focus of my eye, which can only bring one plane into focus at a time. You can see the same thing right now: close one eye, hold a finger up a few inches from your eye and focus on it. Now focus on something far behind your finger and watch as your finger becomes blurry.

This happens because the light from your finger and the light from the more distant objects is entering your eye at different angles. When I looked into CREAL3D’s display, I saw the same thing, for the same reason—except I was looking at a computer generated image.

A little experiment with the display really drove this point home. Holding my smartphone up to the lens, I could tap on the frog and my camera would bring it into focus. I could also tap the tree and the focus would switch to the tree while the frog became blurry. As far as my smartphone’s camera was concerned… these were ‘real’ objects at ‘real’ focal depths.

That’s the long way of saying (sorry, light-fields can be confusing) that light-fields are the ideal way to display virtual or augmented imagery—because they inherently support all of the ‘features’ of natural human vision. And it appears that CREAL3D’s display does much of the same.

But, these are huge boxes sitting on a desk. Could this tech even fit into a headset? And how does it work anyway? Founders Sluka and Kubeš weren’t willing to offer much detail on their approach, but I learned as much as I could about the capabilities (and limitations) of the system.

The ‘how’ part is the least clear at this point. Sluka would only tell me that they’re using a projector, modulating the light in some way, and that the image is not a hologram, nor are they using a microlens array. The company believes this to be a novel approach, and that their synthetic light-field is closer to an analog light-field than any other they’re aware of.

Sluka tells me that the system supports “hundreds of depth-planes from zero to infinity,” with a logarithmic distribution (higher density of planes closer to the eye, and lower density further). He said that it’s also possible to achieve a depth-plane ‘behind’ the eye, meaning that the system can correct for prescription eyewear.

The pair also told me that they believe the tech can be readily shrunk to fit into AR and VR headsets, and that the bulky devices shown at CES were just a proof of concept. The company expects that they could have their light-field displays ready for VR headsets this year, and shrunk all the way down to glasses-sized AR headsets by 2021.

As for limitations, the display currently only supports 200 levels per color (RBG), and increasing the field of view and the eyebox will be a challenge because of the need to expand the scope of the light-field, though the team expects they can achieve a 100 degree field of view for VR headsets and a 60–90 degree field of view for AR headsets. I suspect that generating synthetic lightfields in real-time at high framerates will also be a computational challenge, though Sluka didn’t go into detail about the rendering process.

It’s exciting, but early for CREAL3D. The company is a young startup with 10 members so far, and there’s still much to prove in terms of feasibility, performance, and scalability of the company’s approach to light-field displays.

Sluka holds a PhD in Science Engineering from the Technical University of Liberec in the Czech Republic. He says he’s a multidisciplinary engineer, and he has the published works to prove it. The CREAL3D team counts a handful of other PhDs among its ranks, including several from Intel’s shuttered Vaunt project.

Sluka told me that the company has raised around $1 million in the last year, and that the company is in the process of raising a $5 million round to further growth and development.