Lytro’s Immerge light-field camera is meant for professional high-end VR productions. It may be a beast of a rig, but it’s capable of capturing some of the best looking volumetric video that I’ve had my eyes on yet. The company has revealed a major update to the camera, the Immerge 2.0, which, through a few smart tweaks, makes for much more efficient production and higher quality output.

Light-field specialist Lytro, which picked up a $60 million Series D investment earlier this year, is making impressive strides in its light-field capture and playback technology. The company is approaching light-field from both live-action and synthetic ends; last month Lytro announced Volume Tracer, a software which generates light-fields from pre-rendered CG content, enabling ultra-high fidelity VR imagery that retains immersive 6DOF viewing.

Immerge 2.0

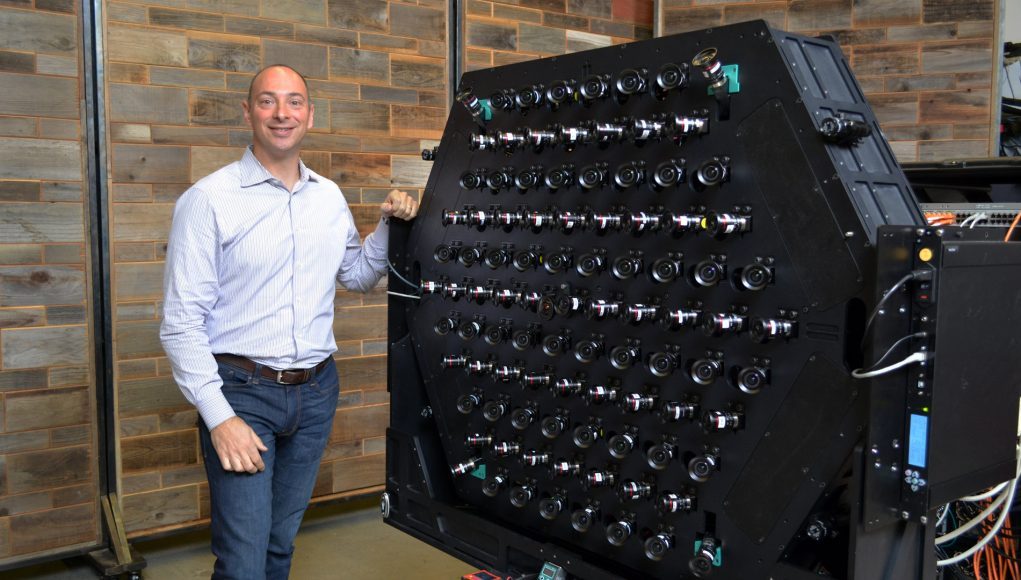

On the live-action end, the company has been building a high-end light-field camera which they call Immerge. Designed for high-end productions, the camera is actually a huge array of individual lenses which all work in unison to capture light-fields of the real world.

At a recent visit to the company’s Silicon Valley office, Lytro exclusively revealed to Road to VR the latest iteration of the camera, which they’re calling Immerge 2.0. The form-factor is largely the same as before—an array of lenses all working together to capture the scene from many simultaneous viewpoints—but you’ll note an important difference if you look closely: the Immerge 2.0 has alternating rows of cameras pointed off-axis in opposite directions.

With the change to the camera angles, and tweaks to the underlying software, the lenses on Immerge 2.0 effectively act as one giant camera that has a wider field of view than any of the individual lenses, now 120 degrees (compared to 90 degrees on the Immerge 1.0).

In practice, this can make a big difference to the camera’s bottom line: a wider field of view allows the camera to capture more of the scene at once, which means it requires fewer rotations of the camera to capture a complete 360 degree shot (now with as few as three spins, instead of five), and provides larger areas for actors to perform. A new automatic calibration process further speeds things up.

All of this means increased production efficiency, faster iteration time, and more creative flexibility—all the right notes to hit if the goal is to make one day make live action light-field capture easy enough to achieve widespread traction in professional VR content production.

Ever Increasing Quality

Lytro has also been refining their software stack which allows them to pull increasingly higher quality imagery derived from the light-field data. I saw a remastered version of the Hallelujah experience which I had seen earlier this year, this time outputting 5K per-eye (up from 3.5K) and employing a new anti-aliasing-like technique. Looking at the old and new version side-by-side revealed a much cleaner outline around the main character, sharper textures, and distant details with greater stereoscopy (especially in thin objects like ropes and bars) that were previously muddled.

What’s more, Lytro says they’re ready to bump the quality up to 10K per-eye, but are waiting for headsets that can take advantage of such pixel density. One interesting aspect of all of this is that many of the quality-enhancing changes that Lytro has made to their software can be applied to light-field data captured prior to the changes, which suggests a certain amount of future-proofing available to the company’s light-field captures.

– – — – –

Lytro appears to be making steady progress on both live action and synthetic light-field capture & playback technologies, but one thing that’s continuously irked those following their story is that none of their light-field content has been available to the public—at least not in a proper volumetric video format. On that front, the company promises that’s soon to be remedied, and has teased that a new piece of content is in the works and slated for a public Q1 release across all classes of immersive headsets. With a bit of luck, it shouldn’t be too much longer until you can check out what the Immerge 2.0 camera can do through your own VR headset.