Researchers at Meta Reality Labs have created a prototype VR headset with a custom-built accelerator chip specially designed to handle AI processing to make it possible to render the company’s photorealistic Codec Avatars on a standalone headset.

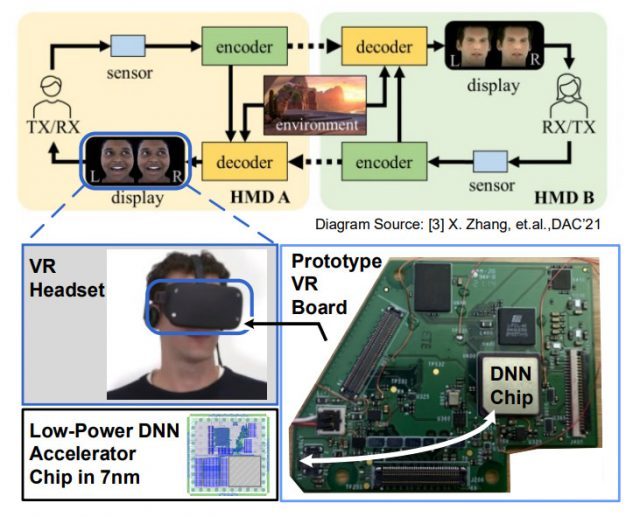

Long before the company changed its name, Meta has been working on its Codec Avatars project which aims to make nearly photorealistic avatars in VR a reality. Using a combination of on-device sensors—like eye-tracking and mouth-tracking—and AI processing, the system animates a detailed recreation of the user in a realistic way, in real-time.

Or at least that’s how it works when you’ve got high-end PC hardware.

Early versions of the company’s Codec Avatars research were backed by the power of an NVIDIA Titan X GPU, which monstrously dwarfs the power available in something like Meta’s latest Quest 2 headset.

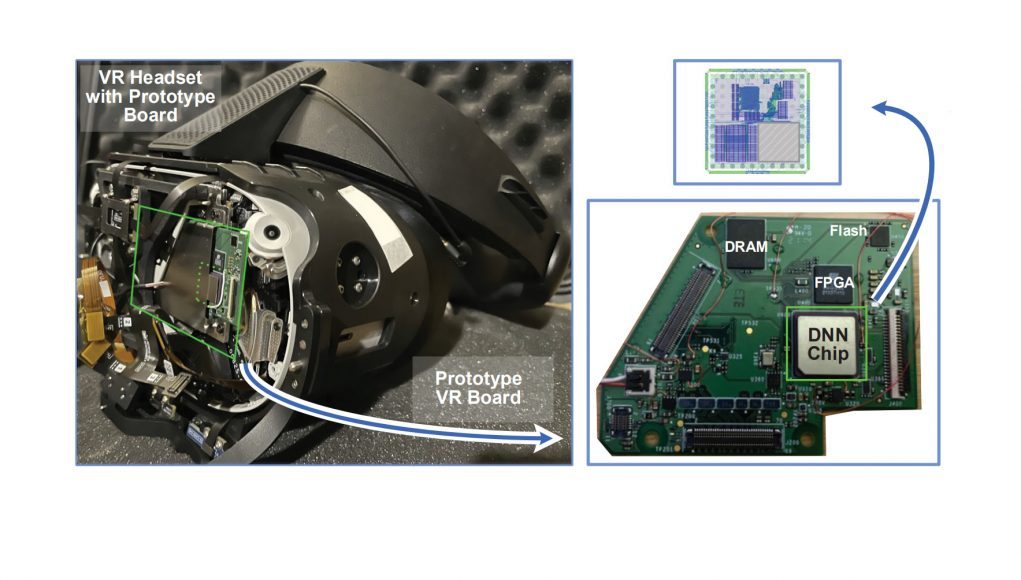

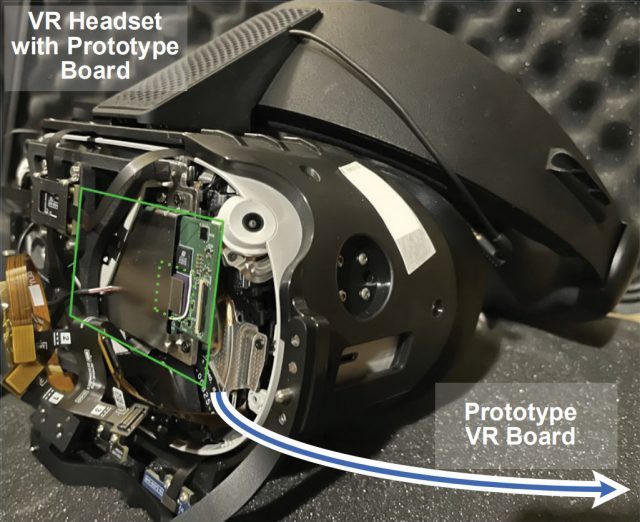

But the company has moved on to figuring out how to make Codec Avatars possible on low-powered standalone headsets, as evidenced by a paper published alongside last month’s 2022 IEEE CICC conference. In the paper, Meta reveals it created a custom chip built with a 7nm process to function as an accelerator specifically for Codec Avatars.

Specially Made

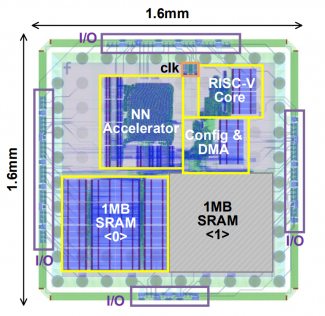

According to the researchers, the chip is far from off the shelf. The group designed it with an essential part of the Codec Avatars processing pipeline in mind—specifically, analyzing the incoming eye-tracking images and generating the data needed for the Codec Avatars model. The chip’s footprint is a mere 1.6mm².

“The test-chip, fabricated in 7nm technology node, features a Neural Network (NN) accelerator consisting of a 1024 Multiply-Accumulate (MAC) array, 2MB on-chip SRAM, and a 32bit RISC-V CPU,” the researchers write.

In turn, they also rebuilt the part of the Codec Avatars AI model to take advantage of the chip’s specific architecture.

“By re-architecting the Convolutional [neural network] based eye gaze extraction model and tailoring it for the hardware, the entire model fits on the chip to mitigate system-level energy and latency cost of off-chip memory accesses,” the Reality Labs researchers write. “By efficiently accelerating the convolution operation at the circuit-level, the presented prototype [chip] achieves 30 frames per second performance with low-power consumption at low form factors.”

By accelerating an intensive part of the Codec Avatars workload, the chip not only speeds up the process, but it also reduces the power and heat required. It’s able to do this more efficiently than a general-purpose CPU thanks to the custom design of the chip which then informed the rearchitected software design of the eye-tracking component of Codec Avatars.

One Part of a Pipeline

But the headset’s general purpose CPU (in this case, Quest 2’s Snapdragon XR2 chip) doesn’t get to take the day off. While the custom chip handles part of the Codec Avatars encoding process, the XR2 manages the decoding process and rendering the actual visuals of the avatar.

The work must have been quite multidisciplinary, as the paper credits 12 researchers, all from Meta’s Reality Labs: H. Ekin Sumbul, Tony F. Wu, Yuecheng Li, Syed Shakib Sarwar, William Koven, Eli Murphy-Trotzky, Xingxing Cai, Elnaz Ansari, Daniel H. Morris, Huichu Liu, Doyun Kim, and Edith Beigne.

It’s impressive that Meta’s Codec Avatars can run on a standalone headset, even if a specialty chip is required. But one thing we don’t know is how well the visual rendering of the avatars is handled. The underlying scans of the users are highly detailed and may be too complex to render on Quest 2 in full. It’s not clear how much the ‘photorealistic’ part of the Codec Avatars is preserved in this instance, even if all the underlying pieces are there to drive the animations.

– – — – –

The research represents a practical application of the new compute architecture that Reality Lab’s Chief Scientist, Michael Abrash, recently described as a necessary next step for making the sci-fi vision of XR a reality. He says that moving away from highly centralized processing to more distributed processing is critical for the power and performance demands of such headsets.

One can imagine a range of XR-specific functions that could benefit from chips specially designed to accelerate them. Spatial audio, for instance, is desirable in XR across the board for added immersion, but realistic sound simulation is computationally expensive (not to mention power hungry!). Positional-tracking and hand-tracking are a critical part of any XR experience—yet another place where designing the hardware and algorithms together could yield substantial benefits in speed and power.

Fascinated by the cutting edge of XR science? Check out our archives for more breakdowns of interesting research.