Researchers from Meta Reality Labs, Princeton University, and others have published a new paper detailing a method for achieving ultra-wide field-of-view holographic displays with retina resolution. The method drastically reduces the display resolution that would otherwise be necessary to reach such parameters, making it a potential shortcut to bringing holographic displays to XR headsets. Holographic displays are especially desirable in XR because they can display light-fields, a more accurate representation of the light we see in the real world.

Reality Labs Research, Meta’s R&D group for XR and AI, has spent considerable time and effort exploring the applications of holography in XR headsets.

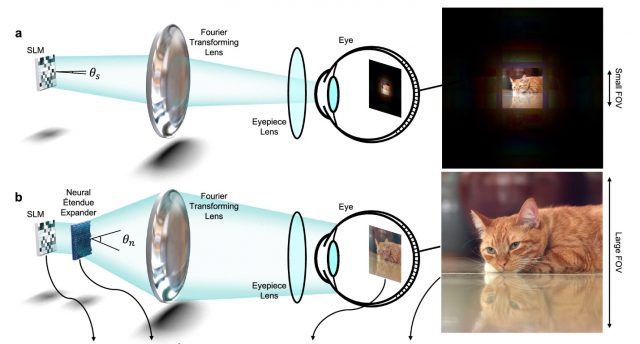

Among the many obstacles needed to make holographic displays viable in an XR headset is the issue of étendue: a measure of how widely light can be spread in a holographic system. Low étendue means a low field-of-view, and the only way to increase the étendue in this kind of system is by increasing the size of the display or reducing the quality of the image—neither of which are desirable for use in an XR headset.

Researchers from Reality Labs Research, Princeton University, and King Abdullah University of Science & Technology published a new paper in the peer-reviewed research journal Nature Communications titled Neural étendue expander for ultra-wide-angle high-fidelity holographic display.

The paper introduces a method to expand the étendue of a holographic display by up to 64 times. Doing so, the researchers say, creates a shortcut to an ultra-wide field-of-view holographic display that also achieves a retina resolution of 60 pixels per-degree.

Higher resolution spatial light modulators (SLM) than exist today will still be needed, but the method cuts the necessary SLM resolution from billions of pixels down to just tens of millions, the researchers say.

Given a theoretical SLM with a resolution of 7,680 × 4,320, the researchers say simulations of their étendue expansion method show it could achieve a display with a 126° horizontal field-of-view, and a resolution of 60 pixels per-degree (truly “retina resolution”) in ideal conditions.

No such SLM exists today, but to create a comparable display without étendue expansion would require an SLM with 61,440 × 34,560 resolution, which is far beyond any current or near-future manufacturing capability.

Étendue expansion itself isn’t new, but the researchers say that existing methods expand étendue at significant cost to image quality, creating an inverse relationship between field-of-view and image quality.

“The étendue expanded holograms produced with [our method] are the only holograms that showcase both ultra-wide-FOV and high-fidelity,” the paper claims.

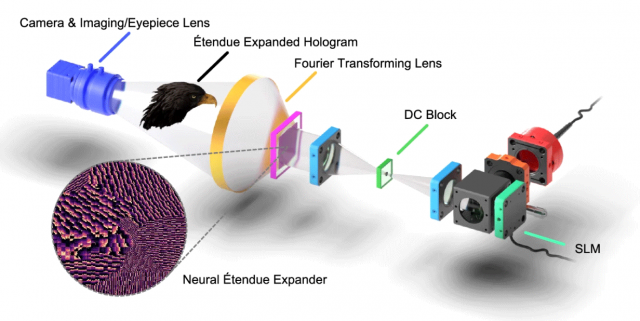

The researchers call the method “neural étendue expansion,” which is a ‘smart’ method of expanding étendue compared to existing naive methods which don’t take into account what is being displayed.

“Neural étendue expanders are learned from a natural image dataset and are jointly optimized with the SLM’s wavefront modulation. Akin to a shallow neural network, this new breed of optical elements allows us to tailor the wavefront modulation element to the display of natural images and maximize display quality perceivable by the human eye,” the paper explains.

The authors—Ethan Tseng, Grace Kuo, Seung-Hwan Baek, Nathan Matsuda, Andrew Maimone, Florian Schiffers, Praneeth Chakravarthula, Qiang Fu, Wolfgang Heidrich, Douglas Lanman & Felix Heide—conclude their paper saying they believe the method isn’t just a research step, but could itself one day be used as a practical application.

“[…] neural étendue expanders support multi-wavelength illumination for color holograms. The expanders also support 3D color holography and viewer pupil movement. We envision that future holographic displays may incorporate the described optical design approach into their construction, especially for VR/AR displays.”

And while this work is exciting, the researchers suggest they have much still to explore with this method.

“Extending our work to utilize other types of emerging optics such as metasurfaces may prove to be a promising direction for future work, as diffraction angles can be greatly enlarged by nano-scale metasurface features and additional properties of light such as polarization can be modulated using meta-optics.”