According to Snap’s CEO Evan Spiegel, the company behind Snapchat has reached a “crucible moment” as it heads into 2026, which he says rests on the growth and performance of Spectacles, the company’s AR glasses, as well as AI, advertising and direct revenue streams.

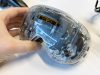

Snap announced in June it was working on the next iteration of its Spectacles AR glasses (aka ‘Specs’), which are expected to release to consumers sometime next year. Snap hasn’t revealed them yet, although the company says the new Specs will be smaller and lighter, feature see-through AR optics and include a built-in AI assistant.

Following the release of the fifth gen in 2024 to developers, next year will be “the most consequential year yet” in Snap’s 14-year history, Spiegel says, putting its forthcoming generation of Specs in the spotlight.

“After starting the year with considerable momentum, we stumbled in Q2, with ad revenue growth slowing to just 4% year-over-year,” Spiegel admits in his recent open letter. “Fortunately, the year isn’t over yet. We have an enormous opportunity to re-establish momentum and enter 2026 prepared for the most consequential year yet in the life of Snap Inc.”

Not only are Specs a key focus in the company’s growth, Spiegel thinks AR glasses, combined with AI, will drastically change the way people work, learn and play.

“The need for Specs has become urgent,” Spiegel says. “People spend over seven hours a day staring at screens. AI is transforming the way we work, shifting us from micromanaging files and apps to supervising agents. And the costs of manufacturing physical goods are skyrocketing.”

Those physical goods can be replaced with “photons, reducing waste while opening a vast new economy of digital goods,” Spiegel says, something the company hopes to tap into with Specs. And instead of replicating the smartphone experience into AR, Spiegel maintains the core of the device will rely on AI.

“Specs are not about cramming today’s phone apps into a pair of glasses. They represent a shift away from the app paradigm to an AI-first experience — personalized, contextual, and shared. Imagine pulling up last week’s document just by asking, streaming a movie on a giant, see-through, and private display that only you can see, or reviewing a 3D prototype at scale with your teammate standing next to you. Imagine your kids learning biology from a virtual cadaver, or your friends playing chess around a real table with a virtual board.”

Like many of its competitors, Spiegel characterizes Specs as “an enormous business opportunity,” noting the AR device can not only replace multiple physical screens, but the operating system itself will be “personalized with context and memory,” which he says will compound in value over time.

Meanwhile, Snap competitors Meta, Google, Samsung, and Apple are jockeying for position as they develop their own XR devices—the umbrella term for everything from mixed reality headsets, like Meta Quest 3 or Apple Vision Pro, to smart glasses like Ray-Ban Meta or Google’s forthcoming Android XR glasses, to full-AR glasses, such as Meta’s Orion prototype, which notably hopes to deliver many of the same features promised by the sixth gen Specs.

And as the company enters 2026, Spiegel says Snap is looking to organize differently, calling for “startup energy at Snap scale” by setting up a sort of internal accelerator of five to seven teams composed 10 to 15-person squads, which he says will include “weekly demo days, 90-day mission cycles, and a culture of fast failure will keep us moving.”

It’s a bold strategy, especially as the company looks to straddle the expectant ‘smartphone-to-AR’ computing paradigm shift, with Spiegel noting that “Specs are how we move beyond the limits of smartphones, beyond red-ocean competition, and into a once-in-a-generation transformation towards human-centered computing.”

You can read Snap CEO Evan Spiegel’s full open letter here, which includes more on AI and the company’s strategies for growth, engagement and ultimately how it’s seeking to generate more revenue.