Meta Connect 2023 has wrapped up, bringing with it a deluge of info from one of the XR industry’s biggest players. Here’s a look at the biggest announcements from Connect 2023, but more importantly, what it all means for the future of XR.

Last week marked the 10th annual Connect conference, and the first Connect conference after the Covid pandemic to have an in-person component. The event originally began as Oculus Connect in 2014. Having been around for every Connect conference, it’s amazing when I look around at just how much has changed and how quickly it all flew by. For those of you who have been reading and following along for just as long—I’m glad you’re still on this journey with us!

So here we are after 10 Connects. What were the big announcements and what does it all mean?

Meta Quest 3

Obviously, the single biggest announcement is the reveal and rapid release of Meta’s latest headset, Quest 3. You can check out the full announcement details and specs here and my hands-on preview with the headset here. The short and skinny is that Quest 3 is a big hardware improvement over Quest 2 (but still being held back by its software) and it will launch on October 10th starting at $500.

Quest 3 marks the complete dissolution of Oculus—the VR startup that Facebook bought back in 2014 to jump-start its entrance into XR. It’s the company’s first headset to launch following Facebook’s big rebrand to Meta, leaving behind no trace of the original and very well-regarded Oculus brand.

Apples and Oranges

On stage at Connect, Meta CEO Mark Zuckerberg called Quest 3 the “first mainstream mixed reality headset.” By “mainstream” I take it he meant ‘accessible to the mainstream’, given its price point. This was clearly in purposeful contrast to Apple’s upcoming Vision Pro which, to his point, is significantly less accessible given its $3,500 price tag. Though he didn’t mention Apple by name, his comments about accessibility, ‘no battery pack’, and ‘no tether’ were clearly aimed at Vision Pro.

Mixed Marketing

Meta is working hard to market Quest 3’s mixed reality capabilities, but for all the potential the feature has, there is no killer app for the technology. And yes, having the tech out there is critical to creating more opportunity for such a killer app to be created, but Meta is substantially treating its developers and customers as beta testers of this technology. The ‘market it and they will come’ approach that didn’t seem to pan out too well for Quest Pro.

Personally I worry about the newfangled feature being pushed so heavily by Meta that it will distract the body of VR developers who would otherwise better serve an existing customer base that’s largely starving for high-quality VR content.

Regardless of whether or not there’s a killer app for Quest 3’s improved mixed reality capabilities, there’s no doubt that the tech could be a major boon to the headset’s overall UX, which is in substantial need of a radical overhaul. I truly hope the company has mixed reality passthrough turned on as the default mode, so when people put on the headset they don’t feel immediately blind and disconnected from reality—or need to feel around to find their controllers. A gentle transition in and out of fully immersive experiences is a good idea, and one that’s well served with a high quality passthrough view.

Apple, on the other hand, has already established passthrough mixed reality as the default when putting on the headset, and for now even imagines it’s the mode users will spend most of their time in. Apple has baked this in from the ground-up, but Meta still has a long way to go to perfect it in their headsets.

Augments vs. Volumes

Several Connect announcements also showed us how Meta is already responding to the threat of Apple’s XR headset, despite the vast price difference between the offerings.

For one, Meta announced ‘Augments’, which are applets developers will be able to build that users can place in permanently anchored positions in their home in mixed reality. For instance, you could place a virtual clock on your wall and always see it there, or a virtual chessboard on your coffee table.

This is of course very similar to Apple’s concept of ‘Volumes’, and while Apple certainly didn’t invent the idea of having MR applets that live indefinitely in the space around you (nor Meta), it’s clear that the looming Vision Pro is forcing Meta to tighten its focus on this capability.

Meta says developers will be able to begin building ‘Augments’ on the Quest platform sometime next year, but it isn’t clear if that will happen before or after Apple launches Vision Pro.

Microgrestures

Augments aren’t the only way that Meta showed at Connect that it’s responding to Apple. The company also announced that its working on a system for detecting ‘microgestures’ for hand-tracking input—planned for initial release to developers next year—which look awfully similar to the subtle pinching gestures that are primarily used to control Vision Pro:

Again, neither Apple nor Meta can take credit for inventing this ‘microgesture’ input modality. Just like Apple, Meta has been researching this stuff for years, but there’s no doubt the sudden urgency to get the tech into the hands of developers is related to what Apple is soon bringing to market.

A Leg Up for Developers

Meta’s legless avatars have been the butt of many-a-joke. The company had avoided the issue of showing anyone’s legs because they are very difficult to track with an inside-out headset like Quest, and doing a simple estimation can result in stilted and awkward leg movements.

But now the company is finally adding leg estimation to its avatar models, and giving developers access to the same tech to incorporate it into their games and apps.

And it looks like the company isn’t just succumbing to the pressure of the legless avatar memes by spitting out the same kind of third-party leg IK solutions that are being used in many existing VR titles. Meta is calling its solution ‘generative legs’, and says the system leans on tracking of the user’s upper body to estimate plausibly realistic leg movements. A demo at Connect shows things looking pretty good:

It remains to be seen how flexible the system is (for instance, how will it look if a player is bowling or skiing, etc?).

Meta says the system can replicate common leg movements like “standing, walking, jumping, and more,” but also notes that there are limitations. Because the legs aren’t actually being tracked (just estimated) the generative legs model won’t be able to replicate one-off movements, like raising your knee toward your chest or twisting your feet at different angles.

Virtually You

The addition of legs coincides with another coming improvement to Meta’s avatar modeling, which the company is calling inside-out body tracking (IOBT).

While Meta’s headsets have always tracked the player’s head and hands using the headset and controllers, the rest of the torso (arms, shoulders, neck) was entirely estimated using mathematical modeling to figure out what position they should be in.

For the first time on Meta’s headsets, IOBT will actually track parts of the player’s upper body, allowing the company’s avatar model to incorporate more of the player’s real movements, rather than making guesses.

Specifically Meta says its new system can use the headset’s cameras to track wrist, elbows, shoulders, and torso positions, leading to more natural and accurate avatar poses. The IOBT capability can work with both controller tracking and controller-free hand-tracking.

Both capabilities will be rolled into Meta’s ‘Movement SDK’. The company says ‘generative legs’ will be coming to Quest 2, 3, and Pro, but the IOBT capability might end up being exclusive to Quest 3 (and maybe Pro) given the different camera placements that seem aimed toward making IOBT possible.

Calm Before the Storm, or Calmer Waters in General?

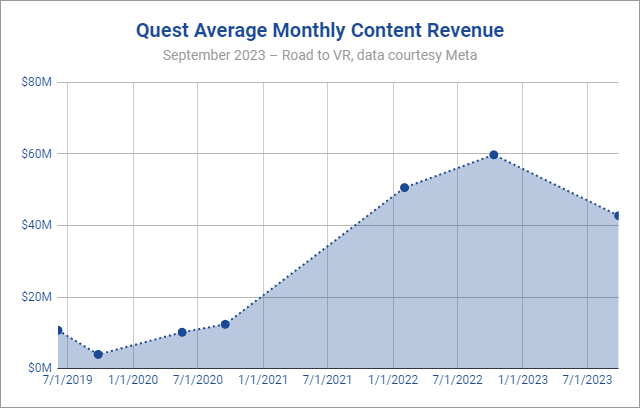

At Connect, Meta also shared the latest revenue milestone for the Quest store: more than $2 billion has been spent on games an apps. That means Meta has pocketed some $600 million from its store, while the remaining $1.4 billion has gone to developers.

That’s certainly nothing to sneeze at, and while many developers are finding success on the Quest store, the figure amounts to a slowdown in revenue momentum over the last 12 months, one which many developers have told me they’d been feeling.

The reason for the slowdown is likely a combination of Quest 2’s age (now three years old), the rather early announcement of Quest 3, a library of content that’s not quite meeting user’s expectations, and a still struggling retention rate driven by core UX issues.

Quest 3 is poised for a strong holiday season, but with its higher price point and missing killer app for the heavily marketed mixed reality feature, will it do as well as Quest 2’s breakout performance in 2021?