Having not had a chance to see Mojo Vision’s latest smart contact lens for myself until recently, I’ll admit that I expected the company was still years away from having a working contact lens with more than just a simple notification light or a handful of static pixels. Upon looking through the company’s latest prototype I was impressed to see a much more capable prototype than I had expected.

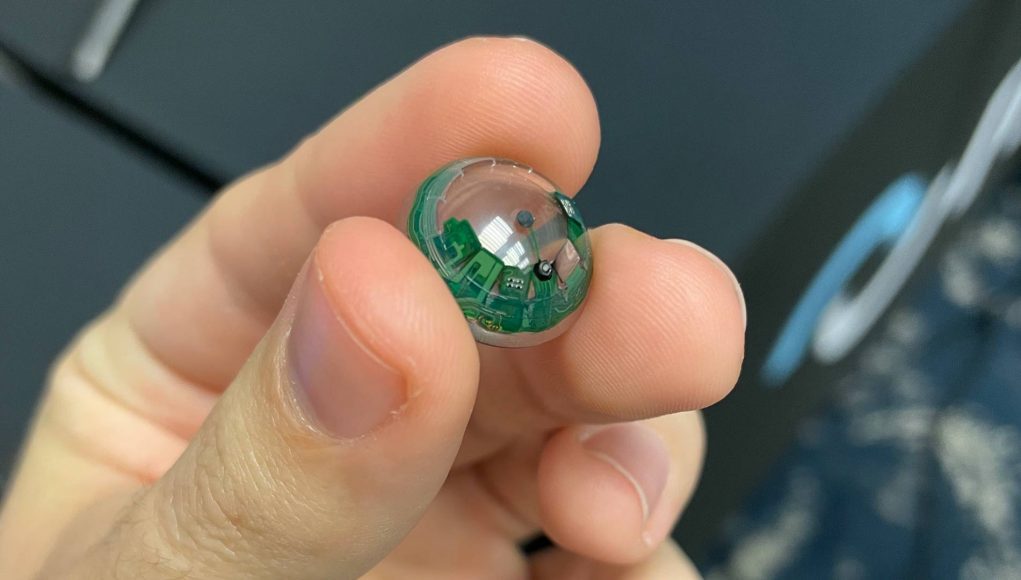

When I walked into Mojo Vision’s demo suite at AWE 2022 last month I was handed a hard contact lens that I assumed was a mockup of the tech the company hoped to eventually shrink and fit into the lens. But no… the company said this was a functional prototype, and everything inside the lens was real, working hardware.

The company tells me this latest prototype includes the “world’s smallest” MicroLED display—at a miniscule 0.48mm, with just 1.8 microns between pixels—an ARM processor, 5GHz radio, IMU (with accelerometer, gyro, and magnetometer), “medical-grade micro-batteries,” and a power management circuit with wireless recharging components.

And while the Mojo Vision smart contact lens is still much thicker than your typical contact lens, last week the company demonstrated this prototype can work in an actual human eye, using Mojo Vision CEO Drew Perkins as the guinea pig.

And while this looks, well… fairly creepy when actually worn in the eye, the company tells me that, in addition to making it thinner, they’ll cover the electronics with cosmetic irises to make it look more natural in the future.

At AWE I wasn’t able to put the contact lens in my own eye (Covid be damned). Instead the company had the lens attached to a tethered stick which I held up to my eye to peer through.

When I did I was surprised to see more than just a handful of pixels, but a full-blown graphical user interface with readable text and interface elements. It’s all monochrome green for now (taking advantage of the human eye’s ability to see green better than any other color), but the demo clearly shows that Mojo Vision’s ambitions are more than just a pipe dream.

Despite the physical display in the lens itself being opaque and directly in the middle of your eye, you can’t actually see it because it’s simply too small and too close. But you can see the image that it projects.

Compared to every HMD that exists today, Mojo Vision’s smart contact lens is particularly interesting because it moves with your eye. That means the display itself—despite having a very small 15° field-of-view—moves with your vision as you look around. And it’s always sharp no matter where you look because it’s always over your fovea (the center part of the retina that sees the most detail). In essence, it’s like having ‘built-in’ foveated rendering. A limited FoV remains a bottleneck to many use-cases, but having the display actually move with your eye alleviates the limitation at least somewhat.

But what about input? Mojo Vision has also been steady at work on figuring out how users will interact with the device. As I wasn’t able to put the lens into my own eye, the company instead put me in a VR headset with eye-tracking to emulate what it would be like to use the smart contact lens itself. Inside the headset I saw roughly the same interface I had seen through the demo contact lens, but now I could interact with the device using my eyes.

The current implementation doesn’t constrain the entire interface to the small field-of-view. Instead, your gaze acts as a sort of ‘spotlight’ which reveals a larger interface as you move your eyes around. You can interact with parts of the interface by hovering your gaze on a button to do things like show the current weather or recent text messages.

It’s an interesting and hands-free approach to an HMD interface, though in my experience the eyes themselves are not a great conscious input device because most of our eye-movements are subconsciously controlled. With enough practice it’s possible that manually controlling your gaze for input will become as simple and seamless as using your finger to control a touchscreen; ultimately another form of input might be better but that remains to be seen.

This interface and input approach is of course entirely dependent on high quality eye-tracking. Since I didn’t get to put the lens on for myself, I have no indication if Mojo Vision’s eye-tracking is up to the task, but the company claims its eye-tracking is an “order of magnitude more precise than today’s leading [XR] optical eye-tracking systems.”

In theory it should work as well as they claim—after all, what’s a better way to measure the movement of your eyes than with something that’s physically attached to them? In practice, the device’s IMU is presumably just as susceptible to drift as any other, which could be problematic. There’s also the matter of extrapolating and separating the movement of the user’s head from sensor data that’s coming from an eye-mounted device.

If the company’s eye-tracking is as precise (and accurate) as they claim, it would be a major win because it could enable the device to function as a genuine AR contact lens capable of immersive experiences, rather than just a smart contact lens for basic informational display. Mojo Vision does claim it expects its contact lens to be able to do immersive AR eventually, including stereoscopic rendering with one contact in each eye. In any case, AR won’t be properly viable on the device until a larger field-of-view is achieved, but it’s an exciting possibility.

So what’s the road map for actually getting this thing to market? Mojo Vision says it fully expects FDA approval will be necessary before they can sell it to anyone, which means even once everything is functional from a tech and feature standpoint, they’ll need to run clinical trials. As for when that might all be complete, the company told me “not in a year, but certainly [sooner than] five years.”