Oculus is calling its latest rendering tech ‘Stereo Shading Reprojection’, and says that for scenes which are heavy in pixel shading, the approach can save upwards of 20% on GPU performance. The company says the new VR rendering technique can be easily added to Unity-based projects, and that it “shouldn’t be hard to integrate with Unreal and other engines.”

The VR industry has a vested interest in finding ways to make VR rendering more efficient. For every bit of increased efficiency, the (currently quite high) bar for the hardware that’s required to drive VR headsets becomes lower and less expensive, making VR available to more people.

Following last year’s introduction of ‘Asynchronous Spacewarp’, another bit of rendering tech which allowed Oculus to establish a lower ‘Oculus Minimum Spec’, the company has now introduced Stereo Shading Reprojection, a technique which can reduce GPU load by 20% on certain scenes.

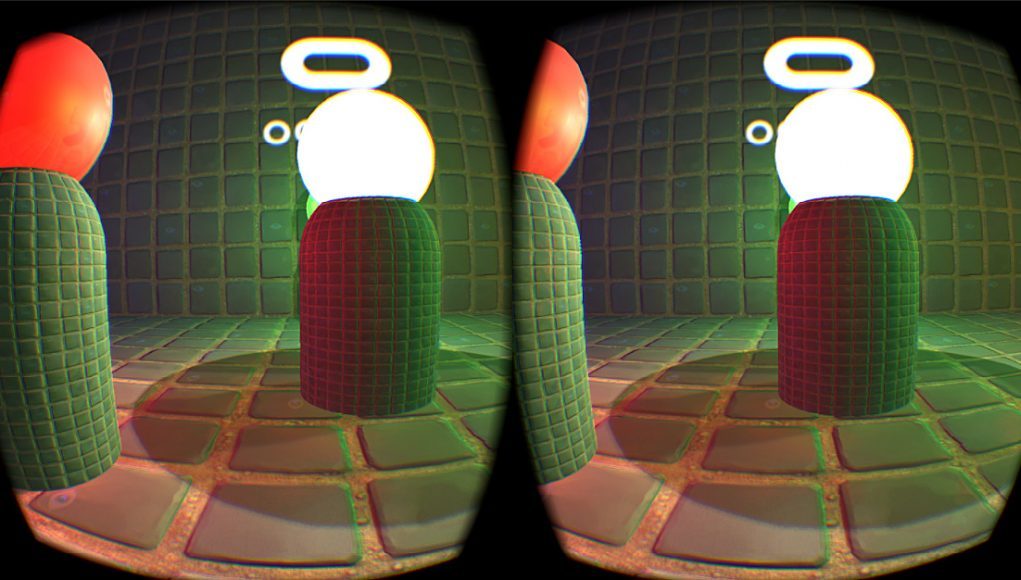

The basic idea behind SSR involves using similarities in the perspective of each eye’s view to eliminate redundant rendering work.

Because our eyes are separated by a small distance, each eye sees the world from a slightly different perspective. Though each view is different, there’s a lot of visual similarity from one to the next. SSR aims to capitalize on that similarity, and avoid doing redundant rendering work for the parts of the scene which have already been calculated for one eye, and from which you infer what the scene should look like from a similar perspective.

More technically, Oculus engineers Jian Zhang and Simon Green write on the Oculus developer blog that SSR uses “information from the depth buffer to reproject the first eye’s rendering result into the second eye’s framebuffer.” It’s a relatively simple idea, but as the pair points out, to work well for VR the solution needs to achieve the following:

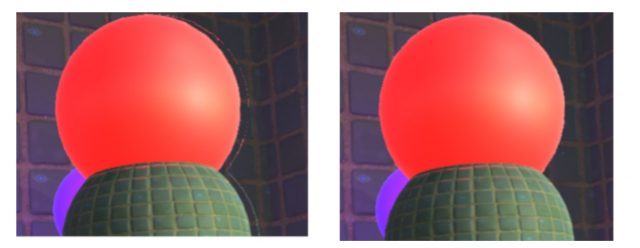

- It is still stereoscopically correct, i.e. the sense of depth should be identical to normal rendering

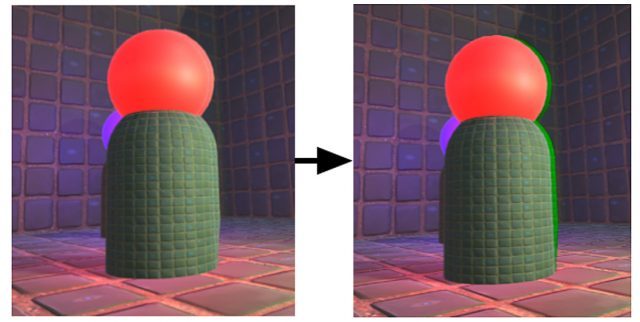

- It can recognize pixels not visible in the first eye but visible in the second eye due to slightly different points of view

- It has a backup solution for specular surfaces that don’t work well under reprojection

- It does not have obvious visual artifacts

And in order to be practical it should further:

- Be easy to integrate into your project

- Fit into the traditional rendering engine well, not interfering with other rendering like transparency passes or post effects

- Be easy to turn on and off dynamically, so you don’t have to use it when it isn’t a win

They share the basic procedure for Stereo Shading Reprojection rendering:

- Render left eye: save out the depth and color buffer before the transparency pass

- Render right eye: save out the right eye depth (after the right eye depth only pass)

- Reprojection pass: using the depth values, reproject the left eye color to the right eye’s position, and generate a pixel culling mask.

- Continue the right eye opaque and additive lighting passes, fill pixels in those areas which aren’t covered by the pixel culling mask.

- Reset the pixel culling mask and finish subsequent passes on the right eye.

The post on the Oculus developer blog futher details how reprojection works in this case, how to detect and correct artifacts, and a number of limitations of the technique, including incompatibility with single-pass stereo rendering (a VR rendering technique which is especially good for CPU-bound scenes).

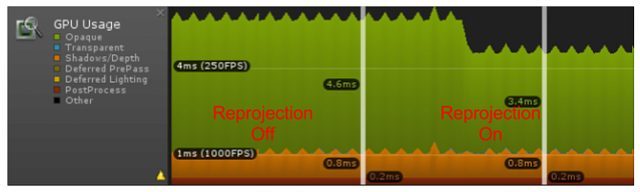

With SSR enabled, Oculus says their tests using an Nvidia GTX 970 GPU reveal savings of some 20% on rendering time, and that the technique is especially useful for scenes which are heavy in pixel shading (like those with dynamic lights).

As will all rendering performance gains, the extra overhead and be left free to make the scene easier to render on less powerful hardware, or it can be utilized to add more complex graphics and gameplay on the same target hardware.

Oculus says they plan to soon release Unity sample code demonstrating Stereo Shading Reprojection.