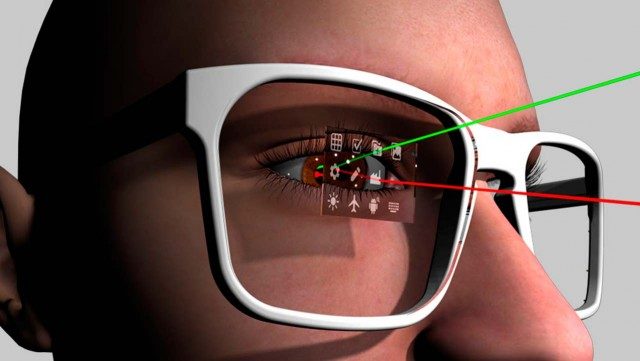

I had a chance to do a demo of Eyefluence, which has created a new model for eye interactions within virtual and augmented reality apps. Rather than using the normal eye interaction paradigm of dwelling focus or explicitly winking to select, Eyefluence has developed a more comfortable way to trigger discrete actions with a selection system that’s triggered through natural eye movements. At times it felt magical to feel like the technology was almost reading my mind, while other times it was clear that this is still an early iteration of an emerging visual language that’s is still being developed and defined.

I had a chance to do a demo of Eyefluence, which has created a new model for eye interactions within virtual and augmented reality apps. Rather than using the normal eye interaction paradigm of dwelling focus or explicitly winking to select, Eyefluence has developed a more comfortable way to trigger discrete actions with a selection system that’s triggered through natural eye movements. At times it felt magical to feel like the technology was almost reading my mind, while other times it was clear that this is still an early iteration of an emerging visual language that’s is still being developed and defined.

I had a chance to talk with Jim Marggraff, the CEO and founder of Eyefluence, at TechCrunch Disrupt last week where we talked about the strengths and weaknesses of their eye interaction model, as well as some of the applications that were prototyped within the demo.

In 1968, Douglas Engelbart gave

In 1968, Douglas Engelbart gave

I had a chance to do a demo of

I had a chance to do a demo of