Virtual experiences through virtual, augmented, and mixed reality are a new frontier for computer graphics. This frontier state is radically different from modern game and film graphics. For those, decades of production expertise and stable technology have already realized the potential of graphics on 2D screens. This article describes comprehensive new systems optimized for virtual experiences we’re inventing at NVIDIA.

Guest Article by Dr. Morgan McGuire

Dr. Morgan McGuire is a scientist on the new experiences in AR and VR research team at NVIDIA. He’s contributed to the Skylanders, Call of Duty, Marvel Ultimate Alliance, and Titan Quest game series published by Activision and THQ. Morgan is the coauthor of The Graphics Codex and Computer Graphics: Principles & Practice. He holds faculty positions at the University of Waterloo and Williams College.

Update: Part 2 of this article is now available.

NVIDIA Research sites span the globe, with our scientists collaborating closely with local universities. We cover a wide domain of applications, including self-driving cars, robotics, and game and film graphics.

Our innovation on virtual experiences includes technologies that you’ve probably heard a bit about already, such as foveated rendering, varifocal optics, holography, and light fields. This article details our recent work on those, but most importantly reveals our vision for how they’ll work together to transform every interaction with computing and reality.

Our innovation on virtual experiences includes technologies that you’ve probably heard a bit about already, such as foveated rendering, varifocal optics, holography, and light fields. This article details our recent work on those, but most importantly reveals our vision for how they’ll work together to transform every interaction with computing and reality.

NVIDIA works hard to ensure that each generation of our GPUs are the best in the world. Our role in the research division is thinking beyond that product cycle of steady evolutionary improvement, in order to look for revolutionary change and new applications. We’re working to take virtual reality from an early adopter concept to a revolution for all of computing.

Research is Vision

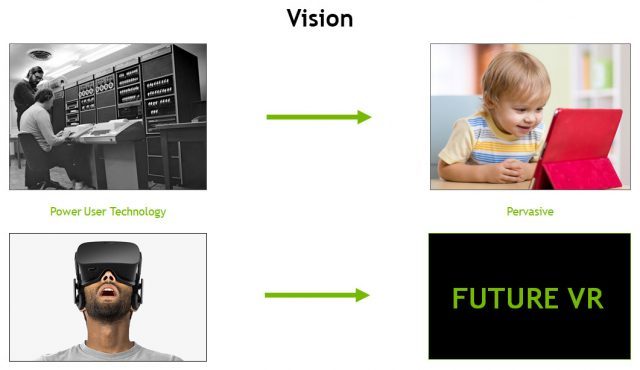

Our vision is that VR will be the interface to all computing. It will replace cell phone displays, computer monitors and keyboards, televisions and remotes, and automobile dashboards. To keep terminology simple, we use VR as shorthand for supporting all virtual experiences, whether or not you can also see the real world through the display.

We’re targeting the interface to all computing because our mission at NVIDIA is to create transformative technology. Technology is truly transformative only when it is in everyday use. It has to become a seamless and mostly transparent part of our lives to have real impact. The most important technologies are the ones we take for granted.

If we’re thinking about all computing and pervasive interfaces, what about VR for games? Today, games are an important VR application for early adopter power users. We already support them through products and are releasing new VR features with each GPU architecture. NVIDIA obviously values games highly and is ensuring that they will be fantastic in VR. However, the true potential of VR technology goes far beyond games, because games are only one part of computing. So, we started with VR games but that technology is now spreading with the scope of VR to work, social, fitness, healthcare, travel, science, education, and all other tasks for which computing now plays a role.

NVIDIA is in a unique position to contribute to the VR revolution. We’ve already transformed consumer computing once before having introduced the modern GPU in 1999, and with it high-performance computing for consumer applications. Today, not only your computer, but also your tablet, smartphone, automobile, and television now have GPUs in them. They provide a level of performance that once would have been considered a supercomputer only available to power users. As a result, we all enjoy a new level of productivity, convenience, and entertainment. Now we’re all power users, thanks to invisible and pervasive GPUs in our devices.

For VR to become a seamless part of our lives, the VR systems must become more comfortable, easy to use, affordable, and powerful. We’re inventing new headset technology that will replace modern VR’s bulky headsets with thin glasses driven by lasers and holograms. They’ll be as widespread as tablets, phones, and laptops, and even easier to operate. They’ll switch between AR/VR/MR modes instantly. And they’ll be powered by new GPUs and graphics software that will be almost unrecognizably different from today’s technology.

For VR to become a seamless part of our lives, the VR systems must become more comfortable, easy to use, affordable, and powerful. We’re inventing new headset technology that will replace modern VR’s bulky headsets with thin glasses driven by lasers and holograms. They’ll be as widespread as tablets, phones, and laptops, and even easier to operate. They’ll switch between AR/VR/MR modes instantly. And they’ll be powered by new GPUs and graphics software that will be almost unrecognizably different from today’s technology.

All of this innovation points to a new way of interacting with computers, and this will require not just a new devices or software but an entirely new system for VR. At NVIDIA, we’re inventing that system with cutting-edge tools, sensors, physics, AI, processors, algorithms, data structures, and displays.

Understanding the Pipeline

NVIDIA Research is very open about what we’re working on and sharing our results through scientific publications and open source code. In Part 2 of this article, I’m going to present a technical overview of some of our recent inventions. But first, to put them and our vision for future AR/VR systems in context, let’s examine how current film, game, and modern VR systems work.

Film Graphics Systems

Hollywood-blockbuster action films contain a mixture of footage of real objects and computer generated imagery (CGI) to create amazing visual effects. The CGI is so good now that Hollywood can make scenes that are entirely computer generated. During the beautifully choreographed introduction to Marvel’s Deadpool (2016), every object in the scene is rendered by a computer instead of filmed. Not just the explosions and bullets, but the buildings, vehicles, and people.

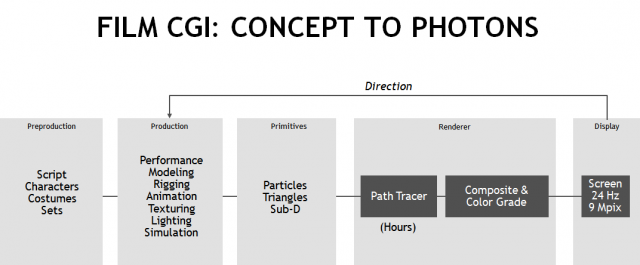

From a technical perspective, the film system for creating these images with high visual fidelity can be described by the following diagram:

From a technical perspective, the film system for creating these images with high visual fidelity can be described by the following diagram:

The diagram has many parts, from the authoring stages on the left, through the modeling primitives of particles, triangles, and curved subdivision surfaces, to the renderer. The renderer uses an algorithm called ‘path tracing’ that photo-realistically simulates light in the virtual scene.

The diagram has many parts, from the authoring stages on the left, through the modeling primitives of particles, triangles, and curved subdivision surfaces, to the renderer. The renderer uses an algorithm called ‘path tracing’ that photo-realistically simulates light in the virtual scene.

The rendering is also followed by manual post-processing of the 2D images for color and compositing. The whole process loops, as directors, editors, and artists iterate to modify the content based on visual feedback before it is shown to audiences. The image quality of film is our goal for VR realism.

Game Systems

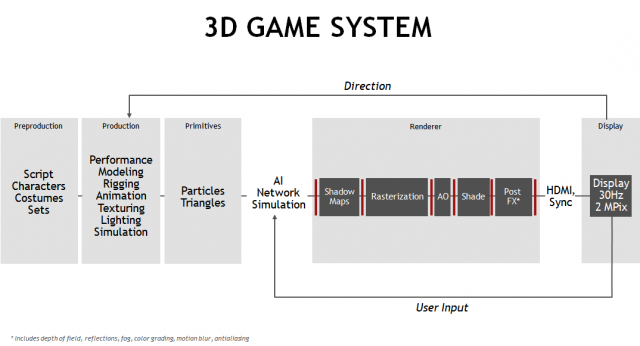

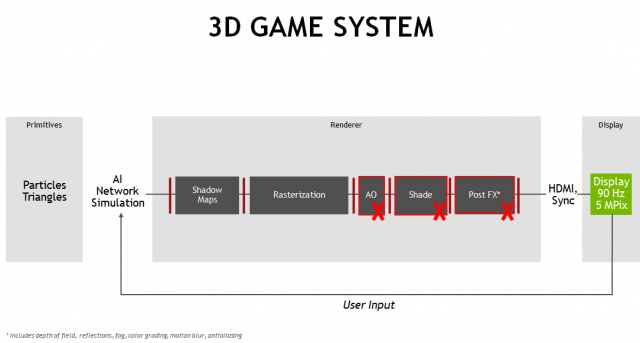

The film graphics system evolved into a similar system for 3D games. Games represent our target for VR interaction speed and flexibility, even for non-entertainment applications. The game graphics system looks like this diagram:

I’m specifically showing a deferred shading pipeline here. That’s what most PC games use because it delivers the highest image quality and throughput.

I’m specifically showing a deferred shading pipeline here. That’s what most PC games use because it delivers the highest image quality and throughput.

Like film, it begins with the authoring process and has the big art direction loop. Games add a crucial interaction loop for the player. When the player sees something on-screen, they react with a button press. That input then feeds into a later frame in the pipeline of graphics processing. This process introduces ‘latency’, which is the time it takes to update frames with new user input taken into account. For an action title to feel responsive, latency needs to be under 150ms in a traditional video game, so keeping it reasonably low is a challenge.

Unfortunately, there are many factors that can increase latency. For instance, games use a ‘rasterization’-based rendering algorithm instead of path tracing. The deferred-shading rasterization pipeline has a lot of stages, and each stage adds some latency. As with film, games also have a large 2D post-processing component, which is labelled ‘PostFX’ in the multi-stage pipeline referenced above. Like an assembly line, that long pipeline increases throughput and allows smooth framerates and high resolutions, but the increased complexity adds latency.

If you only look at the output, pixels are coming out of the assembly line quickly, which is why PC games have high frame rates. The catch is that the pixels spend a long time in the pipeline because it has so many stages. The red vertical lines in the diagram represent barrier synchronization points. They amplify the latency of the stages because at a barrier, the first pixel of the next stage can’t be processed until the last pixel of the previous stage is complete.

The game pipeline can deliver amazing visual experiences. With careful art direction, they approach film CGI or even live-action film quality on a top of the line GPU. For example, look at the video game Star Wars: Battlefront II (2017).

Still, the best frames from a Star Wars video game will be much more static than those from a Star Wars movie. That’s because game visual effects must be tuned for performance. This means that the lighting and geometry can’t change in the epic ways we see on the big screen. You’re probably familiar with relatively static gameplay environments that only switch to big set-piece explosions during cut scenes.

Still, the best frames from a Star Wars video game will be much more static than those from a Star Wars movie. That’s because game visual effects must be tuned for performance. This means that the lighting and geometry can’t change in the epic ways we see on the big screen. You’re probably familiar with relatively static gameplay environments that only switch to big set-piece explosions during cut scenes.

Modern Virtual Reality Systems

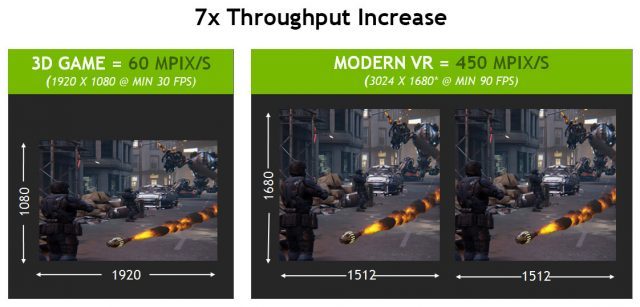

Now let’s see how film and games differ from modern VR. When developers migrate their game engines to VR, the first challenge they hit is the specification increase. There’s a jump in raw graphics power from 60 million pixels per second (MPix/s) in a game to 450 MPix/s for VR. And that’s just the beginning… these demands will quadruple that in the next year.

450 Mpix/second on an Oculus Rift or HTC Vive today is almost a seven times increase in the number of pixels per second compared to 1080p gaming at 30 FPS. This is a throughput increase because it changes the rate at which pixels move through the graphics system. That’s big, but the performance challenge is even greater. Recall how game interaction latency was around 100-150ms between a player input and pixels changing on the screen for a traditional game. For VR, we need not only a seven times throughput increase, but also a seven times reduction in the latency at the same time. How do today’s VR developers accomplish this? Let’s look at latency first.

450 Mpix/second on an Oculus Rift or HTC Vive today is almost a seven times increase in the number of pixels per second compared to 1080p gaming at 30 FPS. This is a throughput increase because it changes the rate at which pixels move through the graphics system. That’s big, but the performance challenge is even greater. Recall how game interaction latency was around 100-150ms between a player input and pixels changing on the screen for a traditional game. For VR, we need not only a seven times throughput increase, but also a seven times reduction in the latency at the same time. How do today’s VR developers accomplish this? Let’s look at latency first.

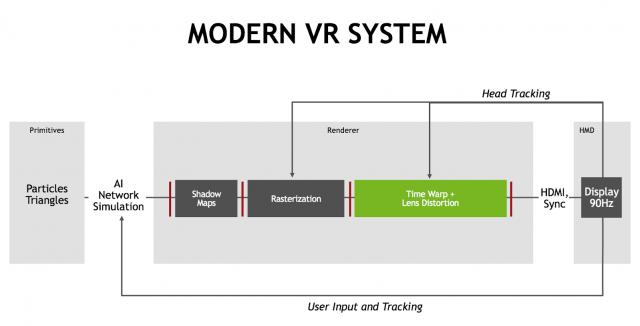

In the diagram below, latency is the time it takes data to move from the left to the right side of the system. More stages in the system give better throughput because they can work in parallel, but they also make the pipeline longer, so latency gets worse. To reduce latency, you need to eliminate boxes and red lines.

As you might expect, to reduce latency developers remove as many stages as they can, as shown in the modified diagram above. That means switching back to a ‘forward’ rendering pipeline where everything is done in one 3D pass over the scene instead of multiple 2D shading and PostFX passes. This reduces throughput, which is then conserved by significantly lowering image quality. Unfortunately, it still doesn’t give quite enough latency reduction.

As you might expect, to reduce latency developers remove as many stages as they can, as shown in the modified diagram above. That means switching back to a ‘forward’ rendering pipeline where everything is done in one 3D pass over the scene instead of multiple 2D shading and PostFX passes. This reduces throughput, which is then conserved by significantly lowering image quality. Unfortunately, it still doesn’t give quite enough latency reduction.

The key technology that helped close the latency gap in modern VR is called Time Warp. Under Time Warp, images shown on screen can be updated without a full trip through the graphics pipeline. Instead, the head tracking data are routed to a GPU stage that appears after rendering is complete. Because this stage is ‘closer’ to the display, it can warp the already-rendered image to match the latest head-tracked data, without taking a trip through the entire rendering pipeline. With some predictive techniques, this brings the perceived latency down from about 50ms to zero in the best case.

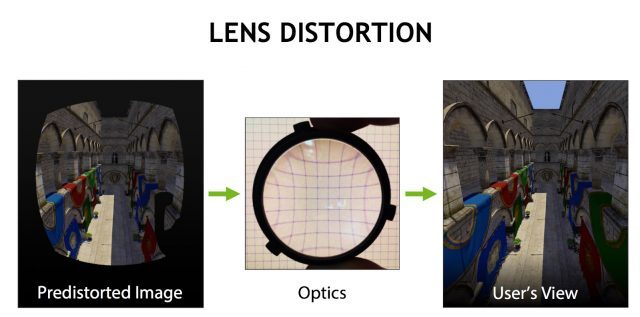

Another key enabling idea for modern VR hardware is Lens Distortion. A good camera’s optics contain at least five high quality glass lenses. Unfortunately, that’s heavy, large, and expensive, and you can’t strap the equivalent of two SLR cameras to your head.

This is why many head-mounted displays use a single inexpensive plastic lens per eye. These lenses are light and small, but low quality. To correct for the distortion and chromatic aberration from a simple lens, shaders pre-distort the images by the opposite amounts.

This is why many head-mounted displays use a single inexpensive plastic lens per eye. These lenses are light and small, but low quality. To correct for the distortion and chromatic aberration from a simple lens, shaders pre-distort the images by the opposite amounts.

NVIDIA GPU hardware and our VRWorks software accelerate the modern VR pipeline. The GeForce GTX 1080 and other Pascal architecture GPUs use a new feature called Simultaneous Multiprojection to render multiple views with increased throughput and reduced latency. This feature provides single-pass stereo so that both eyes render at the same time, along with lens-matched shading, which renders directly into the predistorted image and gives better performance and more sharpness. The GDDR5X memory in the 1080 provides 1.7x the bandwidth of the previous generation and hardware audio and physics help create a more accurate virtual world to increase immersion.

Reduced pipeline stages, Time Warp, Lens Distortion, and a powerful PC GPU comprise the Modern VR system.

Reduced pipeline stages, Time Warp, Lens Distortion, and a powerful PC GPU comprise the Modern VR system.

– – — – –

Now that we’ve established how film, games, and VR graphics work, in Part 2 of this article we look at the limits of human visual perception and show several of the methods we’re exploring to drive performance closer to them in VR systems of the future.