Ahead of SID Display Week, researchers have published details on Google and LG’s 18 Mpixel 4.3-in 1,443-ppi 120Hz OLED display made for wide field of view VR headsets, which was teased back in March.

The design, the researchers claim in the paper, uses a white OLED with color filter structure for high density pixelization and an n‐type LTPS backplane for faster response time than mobile phone displays.

The researchers also developed a foveated pixel pipeline for the display which they say is “appropriate for virtual reality and augmented reality applications, especially mobile systems.” The researchers attached to the project are Google’s Carlin Vieri, Grace Lee, and Nikhil Balram, and LG’s Sang Hoon Jung, Joon Young Yang, Soo Young Yoon, and In Byeong Kang.

The paper maintains the human visual system (HVS) has a FOV of approximately 160 degrees horizontal by 150 degrees vertical, and an acuity of 60 pixels per degree (ppd), which translates to a resolution requirement of 9,600 × 9,000 pixels per eye. At least in the context of VR, a display with those exact specs would match up to a human’s natural ability to see.

Take a look at the specs below to see a comparison for the panel as configured vs the human’s natural visual system.

Human Visual System vs. Google/LG’s Display

| Specification | Upper bound | As built |

| Pixel count (h × v) | 9,600 × 9,000 | 4,800 × 3,840 |

| Acuity (ppd) | 60 | 40 |

| Pixels per inch (ppi) | 2,183 | 1,443 |

| Pixel pitch (µm) | 11.6 | 17.6 |

| FoV (°, h × v) | 160 × 150 | 120 × 96 |

To reduce motion blur, the 120Hz OLED is said to support short persistence illumination of up to 1.65 ms.

To drive the display, the paper specifically mentions the need for foveated rendering, a technique that uses eye-tracking to position the highest resolution image directly at the eye’s most photon receptor-dense section. “Foveated rendering and transport are critical elements for implementation of standalone VR HMDs using this 4.3′′ OLED display,” the researchers say.

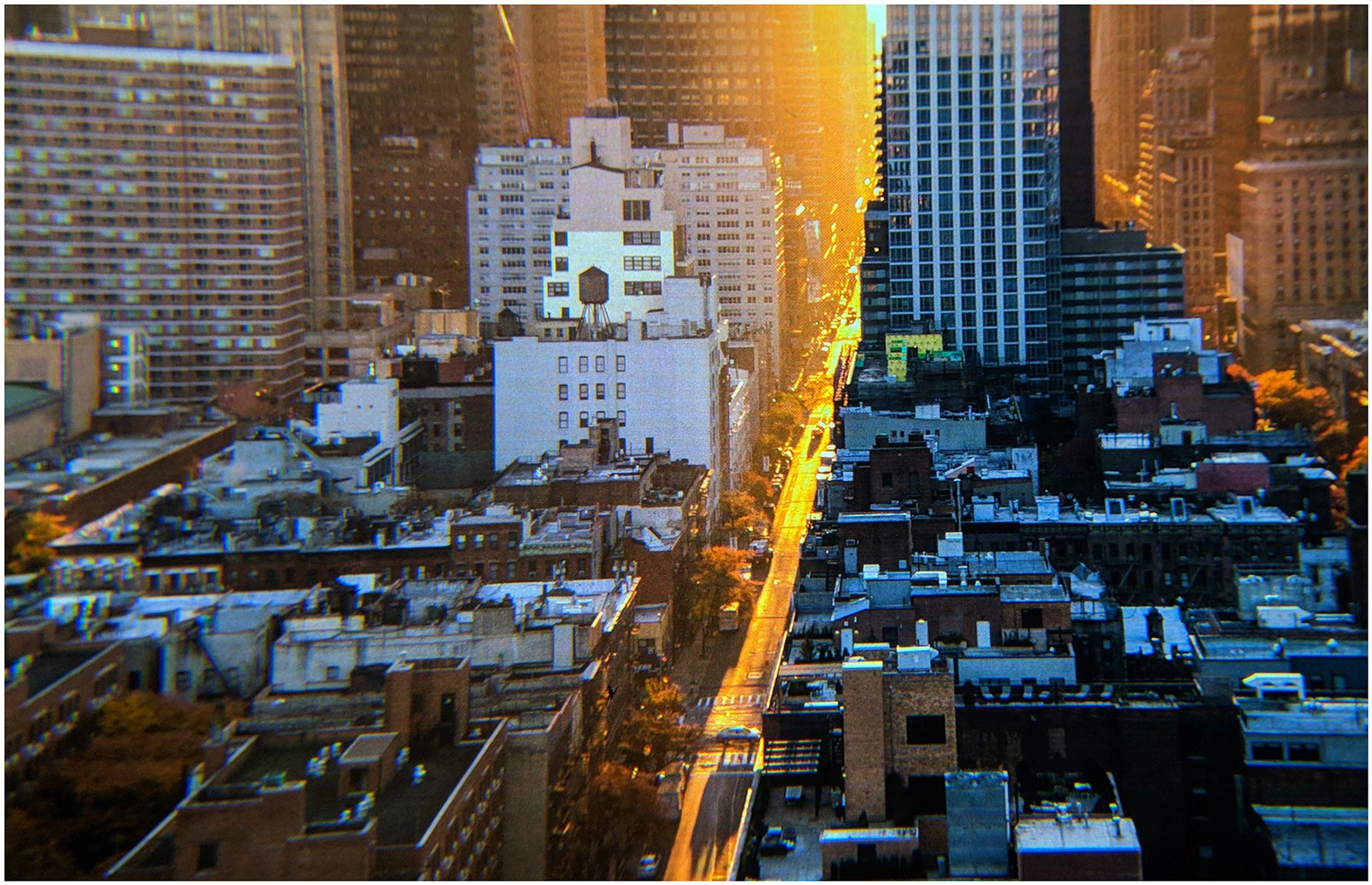

While it’s difficult to communicate a display’s acuity on a webpage and viewed on traditional monitors, which necessarily includes a modicum of image compression, the paper also includes a few pictures of the panel in action.

The researchers also photographed the panel with a VR optic to magnify what you might see if it were implemented in a headset. No distortion correction was applied to the displayed image, although the quality is “very good with no visible screen door effect even when viewed through high quality optics in a wide FoV HMD,” the paper maintains.

Considering Google and LG say this specific panel configuration, which requires foveated rendering, is “especially ideal for mobile systems,” the future for mobile VR/AR may be very bright (and clear) indeed.

Both the Japan-based JID group and China-based INT are presenting their respective VR display panels at SID Display Week, which are respectively a 1,001 PPI LCD (JDI) and a 2,228 PPI AMOLED (INT).

We expect to hear more about Google and LG’s display at today’s SID Display Week talk, which takes place on May 22nd between 11:10 AM – 12:30 PM PT. We’ll update this article accordingly. In the meantime, check out the specs below:

Google & LG New VR Display Specs

| Attribute | Value |

| Size (diagonal) | 4.3″ |

| Subpixel count |

3840 × 2 (either RG or BG) × 4800

|

| Pixel pitch | 17.6 µm (1443 ppi) |

| Brightness |

150 cd/m2 @ 20% duty

|

| Contrast | >15,000:1 |

| Color depth | 10 bits |

| Viewing angle² | 30°(H), 15° (V) |

| Refresh rate | 120 Hz |