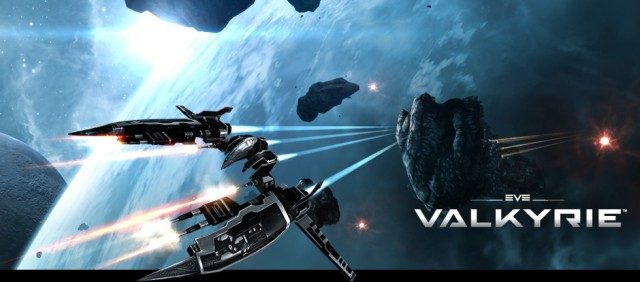

EVE: Valkyrie is a VR-exclusive, fast-paced space dog-fighting game from EVE: Online creators CCP Games. As one of the first games to showcase the incredible possibilities of VR technology in games, EVE: Valkyrie has played a pivotal role in VR’s recent ‘coming of age’, helping to convince an initially sceptical gaming world that VR is the future of games. This groundbreaking title, developed at CCP’s Newcastle studio, has been widely acclaimed as one of VR’s ‘killer apps’.

EVE: Valkyrie is a VR-exclusive, fast-paced space dog-fighting game from EVE: Online creators CCP Games. As one of the first games to showcase the incredible possibilities of VR technology in games, EVE: Valkyrie has played a pivotal role in VR’s recent ‘coming of age’, helping to convince an initially sceptical gaming world that VR is the future of games. This groundbreaking title, developed at CCP’s Newcastle studio, has been widely acclaimed as one of VR’s ‘killer apps’.

EVE: Valkyrie creators Andy Robinson and Erich Cooper chart the 15-month history of EVE: Valkyrie from a ’20% time’ project for a small group of friends in Reykjavik, Iceland to the multi-platform AAA game that has become the poster-child for VR. Learn about the challenges we faced developing for rapidly-changing platforms and under more NDAs than you can shake a stick at, and what it took to showcase the game to tens of thousands of players at events such as E3, GDC, CES and Gamescom.

Speakers:

- Andy Robinson, Co-creator and artist on the original EVE-VR prototype, CCP

- Erich Cooper, Game Designer, CCP

Road to VR Editor Paul James is live blogging the talk live from VRTGO UK 2014, updates will appear automatically below, no need to refresh.